Accelerating AI model training with transfer learning

Listen to the article

Humans possess an extraordinary ability to transfer knowledge. When faced with a new problem or challenge, we naturally tap into our reservoir of past experiences and apply relevant expertise to resolve it. It’s like having a powerful weapon that allows us to navigate tasks with ease and efficiency. Thus, if you know how to ride a bicycle and want to learn to ride a motorbike, your experience with a bicycle will help you handle tasks like balancing and steering, making the learning process smoother compared to starting from scratch.

The idea of transfer learning in machine learning was born out of inspiration from the human learning approach. Transfer learning is essentially a technique where knowledge gained from training a model on one task or domain is utilized to improve the performance of the model on a different task or domain. It’s, however, worth noting that this approach proves beneficial when the second task shares similarities with the first task or when limited data is available for the second task.

The fundamental idea behind transfer learning is centered around using a deep learning network that has already been trained on a related problem. This pre-trained network is initially trained on a similar task or domain, allowing it to learn general features and patterns from the data. Initializing the network with these learned weights and features provides a starting point for a new task, where the network can be fine-tuned or further trained using a smaller dataset specific to the new problem. By utilizing this network as a foundation, the duration of training required for the new yet related problem can be significantly reduced.

In this article, we will explore the concept of transfer learning, its mechanics, and the reasons and circumstances under which it should be applied. Furthermore, we will delve into the various approaches to implementing transfer learning.

- What is transfer learning?

- Features of transfer learning

- Why use transfer learning?

- When to use transfer learning?

- How does transfer learning work?

- Types of transfer learning

- Transfer learning techniques

- Common approaches to transfer learning

- How to train a CNN for image classification using transfer learning?

- Benefits of transfer learning

- Applications of transfer learning

What is transfer learning?

Transfer learning is a machine learning approach that involves utilizing knowledge acquired from one task to improve performance on a different but related task. For example, if we train a model to recognize backpacks in pictures, we can use it to identify objects like sunglasses, a cap or a table.

The main idea behind transfer learning is to use what the model already knows from solving a task with labeled data and apply that knowledge to a new task that doesn’t have much data. Instead of starting from scratch, we begin with the patterns and information the model learned from a similar task. Transfer learning is commonly used in tasks like analyzing images or understanding language. It’s helpful because it allows us to take advantage of the hard work already done by pre-trained models, which saves time and computational resources.

The term “transfer learning” is inspired by human psychology; for instance, someone who knows how to play the piano can more readily learn to play the violin without starting from scratch.

Features of transfer learning

The features of transfer learning include:

Reusing a model

Transfer learning’s pivotal feature is the ability to repurpose a developed model for another task. For instance, when dealing with the detection of early-stage Parkinson’s disease, a lack of data may hinder the direct training of a deep neural network. Here, a reasonable approach would be to first train the neural network on a related, data-rich task, such as detecting advanced stages of the disease. The learned knowledge can then be applied to the more nuanced early-stage detection task.

The choice to use the entire model or specific components depends on the unique requirements of your task. Employing the entire model for new predictions is plausible if both tasks utilize the same input data. Alternatively, fine-tuning specific parts of the model, like the output and specialized layers, allows for customization to meet the distinctive needs of the new task. This method optimizes the application of existing knowledge to the specific challenges of early-stage detection.

Using a pre-trained model

Another effective feature entails harnessing models that have already undergone training (pre-trained models). A variety of such pre-existing models are available, necessitating thorough research to identify the most suitable pre-trained model. The optimal combination of fresh, trainable and reusable layers is contingent on the specific problem’s intricacies.

For instance, prominent platforms like Keras, Model Zoo, and TensorFlow offer a spectrum of pre-trained models that cater to diverse needs, such as feature extraction, transfer learning, predictive tasks, and fine-tuning exercises.

Feature extraction

Machine learning models work through feature extraction, which means it checks the patterns and features of input data and then accordingly provide output. Earlier, experts manually designed features for machine learning, but with deep learning, machines can figure out useful features on their own. Still, deciding which features the system should pay attention to is important. Neural networks can learn which features matter using algorithms. They can quickly find the right mix of features, even for complicated tasks that would normally need a lot of human effort.

These learned features can be used for other tasks, too. To make this work, you start by using the early layers of the network to understand what features are important. Input data must be fed into the network and treat the output layer as a middleman. This middle layer then holds the essence of the original data, which can be very useful for different purposes.

Why use transfer learning?

The utilization of transfer learning in deep learning brings forth various advantages, making it an attractive approach. The primary benefits include saving training time, improving neural network performance, and reducing the need for extensive amounts of data.

Training a neural network from scratch often demands a substantial amount of data. However, in many scenarios, access to such data is limited or not readily available. This is where transfer learning proves to be useful. Instead of training a model from scratch for a new task, transfer learning allows us to leverage pre-trained models that have already learned representations from large amounts of data. This is particularly advantageous in natural language processing, where constructing large labeled datasets requires expert knowledge and significant resources.

Furthermore, transfer learning reduces training time significantly. Training a deep neural network from scratch on a complex task can be time-consuming, sometimes taking days or weeks. However, the initial training time can be minimized by utilizing transfer learning since the pre-trained model already has important features and patterns, saving valuable time and computational resources. Thus, transfer learning addresses the challenges of limited data availability and lengthy training times, allowing the creation of effective machine learning models with smaller datasets and reducing the time required for model training.

When to use transfer learning?

Transfer learning is particularly useful in the following situations:

Data scarcity

When dealing with limited data, training a model from scratch often leads to underwhelming performance due to overfitting. In such cases, leveraging a pre-trained model can significantly improve model accuracy by capitalizing on previously learned features and patterns.

Time constraints

Developing some machine learning models from scratch can be highly time-intensive. Utilizing a pre-trained model is practical and efficient when time is of the essence, as it allows practitioners to build upon previously acquired knowledge and expedite the development process.

Computational constraints

Training models for complex tasks usually require extensive computational resources. Employing a pre-trained model can substantially reduce the computational burden, enabling the efficient execution of machine learning tasks even with limited resources.

Domain similarity

Transfer learning is highly effective when the source and target tasks share similarities, allowing the transfer of knowledge gained from one domain to enhance performance in a related one.

Enhanced generalization

Transfer learning can also be beneficial in scenarios where enhanced model generalization is required, as models trained on diverse and extensive data from a source task tend to generalize better to unseen data in the target task.

Rapid prototyping

When quick model prototyping is essential, transfer learning enables the swift development of models, facilitating immediate testing and refinement.

How does transfer learning work?

Before delving into the operational mechanics of transfer learning, it is essential to understand the fundamentals of deep learning. A deep neural network comprises numerous weights that connect the layers of neurons within the network. These weights, usually real values, undergo adjustment during the training process and are utilized to apply inputs, including those from intermediate layers, to feed-forward an output classification. The fundamental concept of transfer learning begins with a pre-initialized deep learning network trained on a similar problem. By leveraging this network, the training duration for the new but related problem can be significantly reduced while achieving comparable performance.

When employing transfer learning, we encounter the concept of layer freezing. A layer, whether a Convolutional Neural Network (CNN) layer, hidden layer, or a subset of layers, is considered frozen when it is no longer trainable, meaning its weights remain unchanged during the training process. Conversely, unfrozen layers undergo regular training updates.

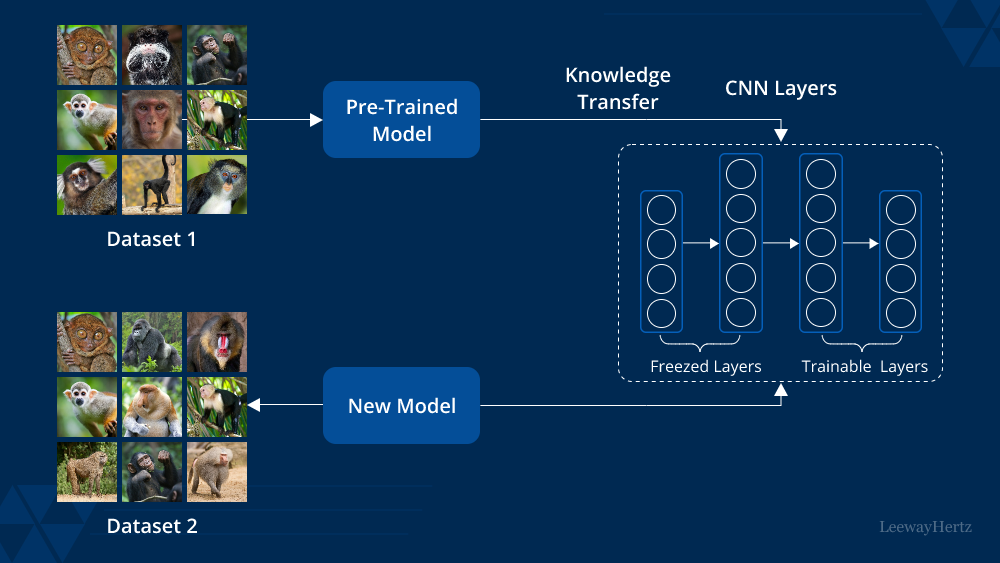

When utilizing transfer learning to address a problem, we select a pre-trained model as our base model. There are two possible approaches to incorporating knowledge from the pre-trained model. The first approach involves freezing certain layers of the pre-trained model and training the remaining layers on our new dataset for the specific task. The second approach entails creating a new model and extracting selected features from the pre-trained layers to incorporate them into the newly constructed model. In both cases, some learned features are extracted from the pre-trained model, while the remaining parts are adjusted to suit the new dataset through training. This ensures that only the shared features are taken from the pre-trained model while the rest of the model is adapted to the new dataset through training.

Determining which layers to freeze and which to train when utilizing transfer learning can be determined based on the desired level of feature inheritance from the pre-trained model. The more you want to leverage the pre-trained model’s features, the more layers you should consider freezing.

Let’s now consider a scenario where the pre-trained model specializes in detecting humans in images, and our objective is to leverage this knowledge to detect cars, which involve an entirely different dataset. In this case, freezing many layers would not be ideal. This is because freezing too many layers would retain not only low-level features but also high-level features like nose, eyes, etc., which are irrelevant to the new dataset (car detection). Instead, we would focus on extracting low-level features from the base network while training the entire network using the new dataset.

Therefore, the decision on which layers to freeze and which layers to train depends on the similarity of features between the pre-trained model and the new dataset, ensuring that we extract relevant features while adapting the model to the specific requirements of the new task.

Launch your project with LeewayHertz!

Supercharge your ML projects with our extensive AI development expertise our deep knowledge of transfer learning and other vital ML techniques coupled .

Types of transfer learning

The different types of transfer learning in deep learning are:

Domain adaptation

Domain adaptation is a concept within the broader field of transfer learning, which involves leveraging knowledge gained from one or more source domains to improve the performance of a target domain. In domain adaptation, the focus is on situations where there are differences between the source and target domains in terms of the types of data they contain and the patterns in those data.

Imagine you have a machine learning model that works well on a specific task in a certain context (the source domain), but you want to apply it to a related task in a different context (the target domain). However, the data in the target domain might look different from what the model has seen before. This could be due to variations in the data collection process, different sensors, diverse user behaviors, or other factors. As a result, the model’s performance might degrade when applied directly to the target domain due to these differences.

Domain adaptation aims to overcome this challenge by aligning the source and target domains as closely as possible. The goal is to make the distribution of data in the target domain more similar to that of the source domain, even though they might not be identical. This alignment reduces the “domain gap” between the two domains, allowing the knowledge gained from the source domain to be more effectively transferred to the target domain.

Domain confusion

Different layers identify features of varying complexities in neural networks. To achieve better transfer of knowledge across different domains, it’s advantageous to develop algorithms that ensure these features are consistent across various contexts. This is known as domain invariance.

To attain domain invariance, making the feature representations between source and target domains as similar as possible is crucial. A strategy to accomplish this involves introducing a concept called domain confusion. The idea is to add an objective to the neural network during training that confuses the network itself regarding whether a feature belongs to the source or target domain.

This is where the domain confusion loss comes into play. It’s a key part of the training process, pushing the network to align the distributions of features from both domains. This loss function encourages the network to make it hard for a domain classifier to predict a given feature’s origin (source or target).

The process involves a dual optimization: minimizing the classification loss for source data and minimizing the domain confusion loss for all data samples (source and target). This way, the network learns to extract informative features for the main task and is adaptable across different domains. Ultimately, this helps the network perform well on its primary task while being robust to domain differences, leading to improved performance when faced with new and unseen domains.

Multi-task learning

In multitask learning, multiple tasks within the same domain are simultaneously learned without differentiating between the source and target tasks.

We learn a set of tasks, t1, t2, …, t(n), co-learning them all at once.

This approach facilitates knowledge transfer across scenarios and enables the development of a comprehensive feature vector from diverse scenarios within the same domain. The learner optimizes learning and performance across all n tasks by leveraging shared knowledge.

One-shot learning

One-shot learning refers to a classification task where the model is provided with only one or a few examples to learn from, and it is then expected to classify a larger number of new examples in the future. This scenario often arises in face recognition, where the model must correctly classify faces under varying conditions, such as different facial expressions, lighting conditions, accessories, and hairstyles, with only one or a few template photos as initial input.

In one-shot learning, the model heavily relies on knowledge transfer from a base model trained on a limited number of examples for each class. The effectiveness of the model’s performance in this context hinges on its ability to generalize from the limited available training samples and make accurate predictions on new, unseen examples.

Zero-shot learning

Zero-shot learning is a strategy in which transfer learning is employed without relying on labeled data samples from a specific class. Unlike other learning approaches, zero-shot learning does not require instances of a class during training. Instead, it relies on additional data to understand unseen data during training.

In zero-shot learning, the emphasis is placed on three key components: the traditional input variable (x), the traditional output variable (y), and the task-specific random variable. By leveraging these components, zero-shot learning enables the model to handle scenarios where labeled data in the target language is unavailable. This becomes particularly useful in applications like machine translation, where traditional labeling may not be feasible.

Transfer learning techniques

Transfer learning techniques vary based on the specific application domain, task, and available data. Before deciding on a transfer learning technique, it’s important to answer the following questions:

- What knowledge from the source task can be transferred to enhance the performance of the target task?

- When should knowledge be transferred, and when should it not be, to improve the target task without compromising results?

- Considering the current domain and task requirements, how can the knowledge acquired from the source model be effectively transferred?

Transfer learning techniques can be categorized into three main groups, depending on the task domain and the presence of labeled or unlabeled data.

Inductive transfer learning

Inductive transfer learning is a technique that assumes the source and target domains are the same, even though the tasks performed by the model are different. The algorithms aim to utilize the knowledge acquired from the source model to enhance performance on the target task. By leveraging a pre-trained model that has already learned the domain-specific features, the model starts at a more advantageous position than training from scratch. Inductive transfer learning can be divided into two subcategories based on whether the source domain has labeled data. These subcategories are multi-task learning and self-taught learning, respectively.

Unsupervised transfer learning

Unsupervised transfer learning shares similarities with inductive transfer learning. The main distinction lies in the type of tasks and datasets involved. In unsupervised transfer learning, the algorithms primarily focus on unsupervised tasks and utilize unlabeled datasets in both the source and target tasks.

While inductive transfer learning assumes the availability of labeled data in the source domain, unsupervised transfer learning takes a different approach. It leverages unsupervised learning techniques, where the algorithms learn from unlabeled data without explicit supervision or labels.

The objective of unsupervised transfer learning is to extract meaningful patterns, representations, or features from the unlabeled data in the source domain and apply this knowledge to improve the performance of the target task, which also consists of unlabeled data. Doing so aims to transfer the learned knowledge and enhance the model’s ability to generalize and perform well on the target task without relying on explicit labels.

Transductive transfer learning

It is employed in scenarios where the domains of the source and target tasks are not exactly the same but are interconnected. This strategy involves identifying similarities between the source and target tasks.

In such cases, the source domain often has a significant amount of labeled data, while the target domain primarily consists of unlabeled data. The goal is to leverage the labeled data from the source domain to improve the performance of the target task, even though the domains themselves are not identical.

Transductive transfer learning focuses on using the available labeled data in the source domain to extract valuable insights and patterns that can be applied to the unlabeled data in the target domain. This aims to enhance the model’s performance on the target task by leveraging the similarities and interconnections between the two domains.

Common approaches to transfer learning

In the context of transfer learning, another way to categorize strategies is based on the similarity of the domains, independent of the type of data samples available for training.

Homogeneous transfer learning

Homogeneous transfer learning addresses scenarios where domains share the same feature space. It focuses on handling slight differences in marginal distributions between domains. These approaches effectively adapt the domains by addressing sample selection bias or covariate shift. Let’s understand this by breaking it down:

Instance transfer

This scenario often occurs when a large amount of labeled data is available in the source domain but only a limited number of labeled instances in the target domain. Both domains and their corresponding feature spaces differ mainly in their marginal distributions.

To illustrate, let’s consider building a model for cancer diagnosis in a specific region where the elderly population is predominant. Although limited labeled instances may be available in the target region, relevant data from another region where the majority are young people could be accessible. However, directly transferring all the data from the other region may not be effective due to the existing marginal distribution difference, as the elderly are at a higher risk of cancer compared to younger individuals.

In such a scenario, it becomes natural to consider adapting the marginal distributions. The instance-based transfer allows the model to effectively incorporate knowledge from the source domain while addressing the marginal distribution difference, thereby improving the performance of cancer diagnosis in the target region with limited labeled data.

Parameter transfer

Parameter-based transfer learning approaches focus on transferring knowledge at the model/parameter level. This approach involves transferring learned knowledge through the shared parameters of the source and target domain models. One common method is to create multiple source learner models and then optimally combine them, similar to ensemble learning, to form an enhanced target learner.

The underlying idea behind parameter-based methods is that a well-trained model on the source domain has acquired a well-defined structure, which can be transferred to the target model if the two tasks are related. In deep learning models, there are typically two ways to share weights: soft weight sharing and hard weight sharing.

In soft weight sharing, the model is expected to be close to the already learned features and is often penalized if its weights deviate significantly from a given set. This encourages the model to retain the learned features while adapting to the target task.

In hard weight sharing, the exact weights are shared among different models. This approach imposes the constraint that the shared weights remain unchanged during the transfer learning process.

Feature-representation transfer

Feature-representation transfer learning approaches involve transforming the original features to create a new feature representation. This category can be divided into two subcategories: asymmetric and symmetric feature-based transfer learning.

- Asymmetric approaches focus on transforming the source features to align them with the target feature space. In other words, the features from the source domain are adjusted and mapped to fit the target feature space. However, this transformation may result in some information loss due to the marginal differences between the domains’ feature distributions.

- Symmetric approaches aim to find a common latent feature space where the source and target features can be transformed into a unified representation. By establishing this common feature space, the features from both domains can be effectively aligned and mapped to a shared representation. This symmetric transformation helps preserve the relevant information from both domains while mitigating the impact of marginal distribution differences.

Both asymmetric and symmetric feature-based transfer learning approaches offer valuable techniques for leveraging the information contained in the features and enabling effective knowledge transfer between different domains.

Relational-knowledge transfer

Relational-based transfer learning approaches primarily concentrate on learning and leveraging the relationships between the source and target domains. The main objective is to extract the logical relationships or rules acquired from the source domain and apply them in the target domain.

By transferring the knowledge of these relationships, these approaches aim to enhance the understanding and inference capabilities in the target domain. For instance, if the relationships between various elements of speech are learned from a male voice in the source domain, this knowledge can be beneficial in analyzing sentences in a different voice in the target domain.

Relational-based transfer learning techniques emphasize understanding and utilizing the underlying connections and patterns between elements or entities in the source and target domains. By leveraging this relational knowledge, it becomes possible to improve the performance and generalization of models in various tasks and domains.

Launch your project with LeewayHertz!

Supercharge your ML projects with our extensive AI development expertise our deep knowledge of transfer learning and other vital ML techniques coupled .

Heterogeneous transfer learning

Transfer learning typically focuses on extracting meaningful features from a pre-trained network to apply them to a related task using new samples. However, it often overlooks that the feature spaces between the source and target domains can differ significantly. Collecting labeled data in the source domain that matches the feature space of the target domain can be challenging. To address this limitation, heterogeneous transfer learning methods have been developed.

Heterogeneous transfer learning aims to tackle the issue of differing feature spaces between the source and target domains and other challenges, such as variations in data distributions and label spaces. This approach is particularly useful in cross-domain tasks like cross-language text categorization and text-to-image classification, where the feature spaces of the source and target domains may not align. Considering the heterogeneity between domains, these methods enable effective knowledge transfer and improve performance in cross-domain scenarios.

How to train a CNN for image classification using transfer learning?

Training a convolutional neural network from scratch is rare due to the limited availability of large datasets. Instead, a common approach is to pre-train a ConvNet on a massive dataset like ImageNet, which contains millions of images across numerous categories. The pre-trained ConvNet can be either an initialization or a fixed feature extractor for the specific task.

We will train a model to classify ants and bees using a dataset of around 120 images for each class. Our goal is to develop an accurate classification system for these fascinating creatures. We are using Python for this. Let us see how this is done:

Step-1: Import libraries

We will import the necessary libraries and set up the environment for training a deep-learning model using PyTorch. Here is the code for this:

from __future__ import print_function, division import torch import torch.nn as nn import torch.optim as optim from torch.optim import lr_scheduler import torch.backends.cudnn as cudnn import numpy as np import torchvision from torchvision import datasets, models, transforms import matplotlib.pyplot as plt import time import os import copy cudnn.benchmark = True plt.ion() # interactive mode

contextlib.ExitStack object at 0x7f39d741eb60

Step-2: Load the data

To load the data, we will utilize torchvision and torch.utils.data packages.

Our task is to train a model that can accurately classify images of ants and bees. We have a limited dataset of approximately 120 training images for each class and 75 validation images per class. Given the small size of this dataset, training a model from scratch might result in poor generalization. However, we can expect the model to generalize reasonably well by employing transfer learning techniques.

It is important to note that this dataset is a small subset of the larger ImageNet dataset.

# Data augmentation and normalization for training

# Just normalization for validation

data_transforms = {

'train': transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'val': transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

data_dir = 'data/hymenoptera_data'

image_datasets = {x: datasets.ImageFolder(os.path.join(data_dir, x),

data_transforms[x])

for x in ['train', 'val']}

dataloaders = {x: torch.utils.data.DataLoader(image_datasets[x], batch_size=4,

shuffle=True, num_workers=4)

for x in ['train', 'val']}

dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'val']}

class_names = image_datasets['train'].classes

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

Step-3: Visualize a few images

To visualize some training images and better understand the data augmentations, you can use the following code:

def imshow(inp, title=None):

"""Display image for Tensor."""

inp = inp.numpy().transpose((1, 2, 0))

mean = np.array([0.485, 0.456, 0.406])

std = np.array([0.229, 0.224, 0.225])

inp = std * inp + mean

inp = np.clip(inp, 0, 1)

plt.imshow(inp)

if title is not None:

plt.title(title)

plt.pause(0.001) # pause a bit so that plots are updated

# Get a batch of training data

inputs, classes = next(iter(dataloaders['train']))

# Make a grid from batch

out = torchvision.utils.make_grid(inputs)

imshow(out, title=[class_names[x] for x in classes])

This code will show a grid of training images with their respective class names. It helps provide a visual representation of the data and the applied data augmentations, allowing you to gain insights into the dataset’s characteristics.

Step-4: Training the model

Now, we will create a general function for training a model. This function will demonstrate two important aspects:

- Scheduling the learning rate.

- Saving the best model.

In the code below, the scheduler parameter represents an LR scheduler object from torch.optim.lr_scheduler. This function will be used to train our model efficiently.

best_model_wts = copy.deepcopy(model.state_dict())

best_acc = 0.0

for epoch in range(num_epochs):

print(f'Epoch {epoch}/{num_epochs - 1}')

print('-' * 10)

# Each epoch has a training and validation phase

for phase in ['train', 'val']:

if phase == 'train':

model.train() # Set model to training mode

else:

model.eval() # Set model to evaluate mode

running_loss = 0.0

running_corrects = 0

# Iterate over data.

for inputs, labels in dataloaders[phase]:

inputs = inputs.to(device)

labels = labels.to(device)

# zero the parameter gradients

optimizer.zero_grad()

# forward

# track history if only in train

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

# backward + optimize only if in training phase

if phase == 'train':

loss.backward()

optimizer.step()

# statistics

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

if phase == 'train':

scheduler.step()

epoch_loss = running_loss / dataset_sizes[phase]

epoch_acc = running_corrects.double() / dataset_sizes[phase]

print(f'{phase} Loss: {epoch_loss:.4f} Acc: {epoch_acc:.4f}')

# deep copy the model

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

print()

time_elapsed = time.time() - since

print(f'Training complete in {time_elapsed // 60:.0f}m {time_elapsed % 60:.0f}s')

print(f'Best val Acc: {best_acc:4f}')

# load best model weights

model.load_state_dict(best_model_wts)

return modelLaunch your project with LeewayHertz!

Supercharge your ML projects with our extensive AI development expertise our deep knowledge of transfer learning and other vital ML techniques coupled .

Step-5: Visualizing the model predictions

We will now define a generic function to display predictions for a few images. This function will help us visualize the model’s predictions on input images.

def visualize_model(model, num_images=6):

was_training = model.training

model.eval()

images_so_far = 0

fig = plt.figure()

with torch.no_grad():

for i, (inputs, labels) in enumerate(dataloaders['val']):

inputs = inputs.to(device)

labels = labels.to(device)

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

for j in range(inputs.size()[0]):

images_so_far += 1

ax = plt.subplot(num_images//2, 2, images_so_far)

ax.axis('off')

ax.set_title(f'predicted: {class_names[preds[j]]}')

imshow(inputs.cpu().data[j])

if images_so_far == num_images:

model.train(mode=was_training)

return

model.train(mode=was_training)

Step-6: Finetuning the ConvNet

To load a pre-trained model and reset the final fully connected layer, you can use the following code:

model_ft = models.resnet18(weights='IMAGENET1K_V1') num_ftrs = model_ft.fc.in_features # Here the size of each output sample is set to 2. # Alternatively, it can be generalized to ``nn.Linear(num_ftrs, len(class_names))``. model_ft.fc = nn.Linear(num_ftrs, 2) model_ft = model_ft.to(device) criterion = nn.CrossEntropyLoss() # Observe that all parameters are being optimized optimizer_ft = optim.SGD(model_ft.parameters(), lr=0.001, momentum=0.9) # Decay LR by a factor of 0.1 every 7 epochs exp_lr_scheduler = lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

Downloading: "https://download.pytorch.org/models/resnet18-f37072fd.pth" to /var/lib/jenkins/.cache/torch/hub/checkpoints/resnet18-f37072fd.pth 0%| | 0.00/44.7M [00:00<?, ?B/s] 27%|##6 | 11.9M/44.7M [00:00<00:00, 125MB/s] 83%|########3 | 37.1M/44.7M [00:00<00:00, 207MB/s] 100%|##########| 44.7M/44.7M [00:00<00:00, 202MB/s]

Step-7: Train and evaluate

The training process will take approximately 15-25 minutes on a CPU. However, using a GPU significantly reduces the training time to less than a minute.

model_ft = train_model(model_ft, criterion, optimizer_ft, exp_lr_scheduler,

num_epochs=25)

Step-8: ConvNet as a fixed feature extractor

In this step, we will freeze all the network layers except the final one. To achieve this, we will set requires_grad = False for the parameters of the frozen layers. This ensures that the gradients are not computed during the backward pass, effectively freezing the parameters.

model_conv = torchvision.models.resnet18(weights='IMAGENET1K_V1')

for param in model_conv.parameters():

param.requires_grad = False

# Parameters of newly constructed modules have requires_grad=True by default

num_ftrs = model_conv.fc.in_features

model_conv.fc = nn.Linear(num_ftrs, 2)

model_conv = model_conv.to(device)

criterion = nn.CrossEntropyLoss()

# Observe that only parameters of final layer are being optimized as

# opposed to before.

optimizer_conv = optim.SGD(model_conv.fc.parameters(), lr=0.001, momentum=0.9)

# Decay LR by a factor of 0.1 every 7 epochs

exp_lr_scheduler = lr_scheduler.StepLR(optimizer_conv, step_size=7, gamma=0.1)

Step-9: Train and evaluate

This process is expected to take about half the time on the CPU compared to the previous scenario. This is because the gradients do not need to be computed for most of the network, which reduces the computational load. However, the forward pass must still be computed to generate the predictions.

model_conv = train_model(model_conv, criterion, optimizer_conv,

exp_lr_scheduler, num_epochs=25)

Benefits of transfer learning

Transfer learning holds immense value in the realm of machine learning and artificial intelligence, offering the following benefits:

Enhanced initial model

Transfer learning provides a superior starting point by leveraging prior knowledge and insights, eliminating the need to start from scratch, as is common with other learning methods.

Accelerated learning rate

Due to the pre-trained nature of the model on related tasks, transfer learning expedites the learning process, allowing for quicker adaptation to the new task.

Improved post-training accuracy

Leveraging a robust foundation and accelerated learning, transfer learning yields higher accuracy in model predictions and outcomes compared to models trained from scratch.

Reduced training time

Employing pre-trained models reduces the time required to attain optimal performance, making transfer learning more efficient than traditional machine learning methods.

Resource efficiency

Transfer learning requires fewer computational resources and less data, making it a cost-effective and efficient solution, especially in scenarios with limited resources.

Enhanced generalization

Models trained with transfer learning often exhibit better generalization to unseen data due to the diverse and extensive data encountered during initial training.

Mitigation of overfitting

In scenarios with limited labeled data, transfer learning helps in mitigating overfitting by leveraging the knowledge acquired from abundant data in the source task.

Broader application scope

Transfer learning enables the application of models across different domains and tasks, expanding the possibilities and scope of machine learning applications.

Applications of transfer learning

The key applications of transfer learning are as follows:

Natural Language Processing (NLP)

Natural language processing focuses on developing systems that can understand and analyze human language in audio or text form. Its applications include voice assistants, speech recognition software, language translation, and more, all aimed at improving human-machine interaction.

Transfer learning plays a crucial role in enhancing NLP models. It allows models to be trained simultaneously on various language elements, dialects, phrases, or vocabularies. This enables the models to adapt to different languages by leveraging pre-trained models’ knowledge of linguistic structures. For instance, models trained in English can be retrained and adapted for similar languages or specific tasks.

One example of transfer learning in NLP is Google’s Neural Translation Model (GNMT), which facilitates cross-lingual translations. The model uses a pivot or common language as an intermediary to translate between two distinct languages. For instance, to translate from Russian to Korean, the model first translates Russian to English and then English to Korean. By learning the translation mechanism from data, the model improves its ability to translate between language pairs accurately.

Computer vision

Computer vision is a field that focuses on enabling systems to understand and interpret visual data, such as images and videos. Machine learning algorithms are trained on large datasets of images to improve their ability to recognize and classify objects within images. In this context, transfer learning plays a crucial role by leveraging pre-trained models and reusable components of computer vision algorithms.

Transfer learning allows using models trained on large datasets and applying them to smaller image sets. For example, it can be used to identify the sharp edges of objects in a given collection of images. Additionally, specific layers in the model responsible for detecting edges can be identified and further trained, adapting the model to specific requirements. This enables more efficient and effective training of computer vision models, even with limited data.

Neural networks

Neural networks play a fundamental role in deep learning, mimicking the functions of the human brain. However, training these networks can be computationally demanding due to the models’ complexity. Transfer learning offers a solution to mitigate this resource requirement and improve efficiency in the training process.

Transfer learning involves transferring valuable features learned from one network to another, allowing for fine-tuning and accelerating the model development process. By applying knowledge gained from one task to another, transfer learning enables the efficient use of neural networks across different tasks, enhancing the models’ overall learning capability and performance.

Endnote

Transfer learning has streamlined and enhanced machine learning capabilities by leveraging pre-existing knowledge and models, making model processing faster and more efficient. This has reduced capital investment and time spent on building models from scratch. Consequently, businesses across various industries are increasingly adopting transfer learning to improve performance, save time, and reduce costs.

Looking ahead, it will be interesting to see how businesses embrace transfer learning in machine learning to maintain competitiveness in the market. With its potential to drive innovation and efficiency, transfer learning holds promise for further advancements in various domains and industries.

Maximize the potential of AI in your business by leveraging LeewayHertz’s deep knowledge of ML techniques like transfer learning, combined with their exceptional AI development capabilities. Contact us for your next project!

Listen to the article

Start a conversation by filling the form

All information will be kept confidential.

Insights

Large Multimodal Models: Transforming AI with cross-modal integration

Large multimodal models (LMMs) represent a significant advancement in artificial intelligence, enabling AI systems to process and comprehend multiple types of data modalities such as text, images, audio, and video.

Generative AI for compliance: An intelligent approach to business governance

Generative AI streamlines the creation of compliance documents, offers real-time support, and employs predictive analytics to reduce compliance risks and more.

Getting started with Generative AI: A beginner’s guide

By automating simple tasks, creating high-quality content, and even addressing complex medical issues, generative AI has already begun to revolutionize industries across the board.