What is deep learning and how does it empower enterprises?

In today’s data-driven world, enterprises face numerous challenges in extracting insights from data for informed decision making. Traditional approaches often fall short when handling the complexity and scale of data modern businesses generate. This is where deep learning, a subset of artificial intelligence, steps in.

Enterprises face many challenges when they rely only on traditional methods. One significant challenge is the management of large volumes of diverse data, making it difficult to extract valuable insights for decision-making and growth. Another challenge arises from manual tasks and manual analysis, which consume time and resources, hampering decision-making speed and competitive advantage. Additionally, traditional algorithms lack the ability to capture intricate relationships, leading to inaccuracies in predictions, missed opportunities, and potential financial losses.

Fortunately, deep learning addresses these challenges effectively. Its algorithms analyze massive datasets, uncover intricate patterns, and reveal insights hidden in data. This empowers enterprises to make data-driven decisions with unparalleled precision and speed. Deep learning also enables automation and efficiency, freeing up resources for core competencies. Furthermore, deep-learning-based predictive models provide businesses with reliable forecasts, enabling proactive decision-making and providing a competitive edge in the market.

In this article, we will delve deeper into the workings of deep learning, exploring its practical applications across industries. We will highlight the transformative nature of deep learning within enterprises while discussing the challenges and benefits of implementing deep learning methods within enterprise systems, among other vital aspects.

- The rise of deep learning

- What is deep learning, and how does it work?

- Deep learning models

- Deep learning vs. machine learning

- Enterprise applications of deep learning

- Benefits of deep learning for enterprises

- Key challenges and factors to consider when adopting deep learning

- Industries that have benefited significantly from the adoption of deep learning-powered solutions

- How to create and train deep learning models?

- Future trends and developments in deep learning

The rise of deep learning

Several key milestones mark the rise of deep learning. It all began in 1943 when Walter Pitts and Warren McCulloch created computer models inspired by the human brain’s neural networks. These early models used algorithms to mimic the way the brain processes information.

Despite facing challenges and setbacks, deep learning continued to evolve. The 1970s witnessed the first AI downturn, which was a period of reduced funding and interest in AI that affected deep learning and AI research. Despite this setback, Kunihiko Fukushima designed the first convolutional neural network, specifically the Neocognitron, which allowed computers to learn and recognize visual patterns. The concepts introduced by Neocognitron continue to be influential.

In the 1980s and 1990s, Yann LeCun demonstrated practical backpropagation by combining convolutional neural networks with handwritten digit recognition. However, this period also saw the second AI downturn, which impacted neural networks and deep learning research. Nevertheless, the support vector machine and long short-term memory (LSTM) for recurrent neural networks were developed in 1995 and 1997, respectively.

In 2001, a META Group report highlighted the challenges and opportunities presented by data’s growing volume and diversity. The advent of Big Data was anticipated, setting the stage for deep learning’s future. The year 2009 marked a significant milestone when Fei-Fei Li launched ImageNet, a large database of labeled images that fueled the training of neural networks. This underscored the importance of data in driving machine learning advancements.

By 2011, the increasing speed of GPUs enabled efficient training of convolutional neural networks without layer-by-layer pre-training. AlexNet, a convolutional neural network, achieved remarkable success in international competitions in 2011 and 2012.

In 2012, Google Brain’s “The Cat Experiment” showed how neural networks can discover patterns in unlabeled data through unsupervised learning. This highlighted the ongoing pursuit of unsupervised learning in deep learning research.

In 2014, Ian Goodfellow introduced the Generative Adversarial Neural Network (GAN), which employs a game between two neural networks. GANs aim to generate near-perfect imitations of photos, stimulating advancements in image generation and manipulation.

The rise of deep learning has been fueled by these pivotal moments, encompassing advancements in backpropagation, convolutional neural networks, unsupervised learning, and GANs. With the increasing computational power, deep learning architectures became more efficient and capable of handling complex tasks. Today, deep learning continues to advance rapidly, driven by ongoing research and the exploration of innovative techniques.

What is deep learning, and how does it work?

Deep Learning (DL), a subfield of ML, specializes in training Artificial Neural Networks (ANNs) to autonomously learn and make predictions or decisions, eliminating the need for explicit programming instructions. It is inspired by the structure and function of the human brain’s neural networks. Unlike traditional programming methods that require explicit instructions for every task, deep learning leverages the power of neural networks to learn and derive insights autonomously. That means, instead of being explicitly programmed, deep learning algorithms learn from large amounts of data and adjust their internal parameters to make accurate predictions or classifications.

Deep learning relies on interconnected layers of artificial neurons, also known as nodes or units. These nodes are organized into input, hidden, and output layers. Each node receives input signals, performs a mathematical computation on them, and then passes the transformed output to the next layer of nodes.

In the training phase, deep learning models iteratively fine-tune the weights and biases of each node to minimize the difference between the expected output and the model’s predicted output. This adjustment is done iteratively using optimization algorithms, such as stochastic gradient descent, which optimize the model’s ability to make accurate predictions.

Deep learning models excel at automatically learning hierarchical representations of data. Each layer in the network extracts increasingly complex and abstract features from the input data. The initial layers learn low-level features, such as edges or corners in an image, while the subsequent layers learn higher-level features, such as shapes or objects. This hierarchical representation enables deep learning models to capture intricate relationships and dependencies within the data.

The training of deep learning models requires large labeled datasets. However, once trained, these models can generalize unseen data and make predictions or perform highly accurate tasks. Deep learning has demonstrated exceptional caliber in diverse areas, such as speech recognition, NLP, computer vision, and recommendation systems.

The power of deep learning stems from its ability to automatically learn and extract relevant features from raw data, removing the need for manual feature engineering. This makes it particularly well-suited for handling unstructured data, such as images, audio, and text. Additionally, advancements in computational power, the availability of large datasets, and the development of specialized hardware, such as GPUs, have contributed to the rapid progress and widespread adoption of deep learning in recent years.

Deep learning models

Deep learning models encompass a range of architectures and algorithms designed to tackle specific tasks and domains. Some of the commonly used deep learning models include:

Classic Neural Networks or Multilayer Perceptrons (MLPs)

Classic Neural Networks, also known as Multilayer Perceptrons (MLPs), form the foundation of deep learning and are widely used for various tasks. MLPs consist of multiple layers of interconnected nodes or neurons, with each node performing a weighted sum of inputs followed by an activation function. The layers include an input layer, one or more hidden layers, and an output layer. MLPs are primarily used for supervised learning, where they can be trained to map input data to desired outputs. Training involves adjusting the weights and biases of the neurons through backpropagation, which computes the error gradient with respect to the network parameters. The activation function introduces non-linearity, enabling MLPs to model complex relationships in the data.

Although MLPs lack the spatial and temporal modeling capabilities of specialized architectures like CNNs and RNNs, they are versatile and can be applied to various tasks, including classification, regression, and pattern recognition. MLPs have been successfully used in various domains, such as finance, healthcare, and natural language processing, and continue to serve as a fundamental building block in the field of deep learning.

Get customized ML solutions for your business!

With proficiency in deep learning and other ML concepts, LeewayHertz builds powerful ML solutions that are perfectly aligned with your business’s unique needs.

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are a type of deep learning model specifically designed for processing and analyzing visual data, such as images and videos. CNNs have redefined computer vision tasks by effectively capturing and recognizing complex patterns and features within images. The most important part of CNNs is the convolutional layer, which applies filters or kernels to input data, allowing the network to learn spatial hierarchies of features automatically. The subsequent pooling layers reduce the spatial dimensions while preserving the most salient information.

CNNs also include fully connected layers that perform classification or regression tasks based on the extracted features. Due to their hierarchical and local connectivity, CNNs excel at tasks such as image classification, object detection, semantic segmentation, and image generation. Their ability to automatically learn and extract relevant visual features from raw data has propelled advancements in areas such as medical imaging, autonomous driving, and visual recognition systems.

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are a class of neural networks that excel at processing sequential data, such as time series, text, and speech. Unlike feedforward networks, RNNs have recurrent connections, allowing information to persist and be propagated through time. RNNs can capture temporal dependencies and learn from past information to make predictions or generate sequences.

RNNs have a memory-like component that allows them to retain and update information at each time step. However, traditional RNNs suffer from the vanishing gradient problem, limiting their ability to capture long-term dependencies. Variations like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) were introduced to address this. These models have specialized memory cells and gating mechanisms that enhance the network’s ability to remember and forget information selectively. RNNs have proven successful in various applications, including machine translation, speech recognition, sentiment analysis, and handwriting generation. Their ability to model sequential data and capture context has made them invaluable in understanding and generating complex patterns in time-based and sequential datasets.

Deep learning vs. machine learning

Deep learning and machine learning are related fields within the broader domain of artificial intelligence, but they differ in certain aspects. Here is a comparison between the two:

|

Aspect

|

Deep learning

|

Machine learning

|

|---|---|---|

| Representation of data | Learns hierarchical representations from raw data | Requires manual feature engineering |

| Model complexity | Deep neural networks with many layers | Simple models with fewer layers/algorithms |

| Training data size | Requires large amounts of training data | Can perform well with smaller datasets |

| Performance | State-of-the-art in complex tasks | Effective for traditional learning tasks |

| Interpretability | Less interpretable due to complex architectures | More interpretable, provides insights into feature importance |

| Computational requirements | Computationally intensive, may require specialized hardware | Can be trained on standard CPUs |

Get customized ML solutions for your business!

With proficiency in deep learning and other ML concepts, LeewayHertz builds powerful ML solutions that are perfectly aligned with your business’s unique needs.

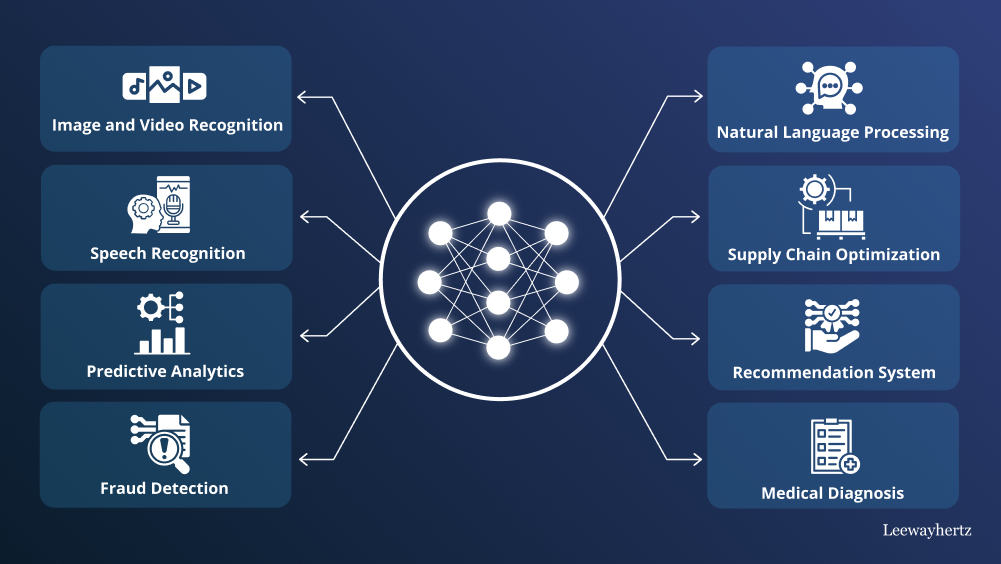

Enterprise applications of deep learning

The adoption of deep learning unlocks a wealth of possibilities for enterprises across various domains. Some of its key applications are:

- Image and video recognition: Deep learning models like CNNs excel at image and video analysis tasks. They are used for object detection, image classification, facial recognition, and scene understanding. This technology has numerous applications, including autonomous vehicles, surveillance systems, manufacturing quality control, and social media content filtering.

- Natural language processing and text analytics: Deep learning models also impact natural language processing and text analytics tasks. Their capabilities enable a wide range of applications like sentiment analysis, language translation, chatbots, text summarization, and question-answering systems. NLP-powered applications are widely used in customer support, content moderation, market research, and content generation.

- Speech recognition and voice assistants: Deep learning has significantly advanced speech recognition technology, making voice assistants like Siri, Alexa, and Google Assistant possible. These systems can accurately transcribe speech, perform voice commands, and generate human-like responses. In various industries, voice assistants find applications in smart home automation, customer service, voice-controlled devices, and hands-free operation.

- Fraud detection and cybersecurity: Deep learning is crucial in detecting fraud and enhancing cybersecurity measures. Deep learning models can analyze large volumes of data in real time, identifying patterns, anomalies, and potential threats. They are used for credit card fraud detection, network intrusion detection, malware detection, and spam filtering, among other tasks. Deep learning models continuously learn from new data, enhancing their ability to detect emerging threats, improving threat detection.

- Predictive analytics and recommendation system: Deep learning enables enterprises to build predictive models to forecast future trends, behaviors, and outcomes. Deep learning models can analyze vast amounts of data, identifying patterns and making accurate predictions. Recommendation systems, widely used in e-commerce, media streaming, and personalized marketing, utilize deep learning algorithms to suggest relevant products, movies, music, and content to users based on their preferences and behavior.

- Medical diagnosis: Deep learning has made significant strides in medical imaging analysis, enabling more accurate and faster diagnoses. It aids in detecting diseases like cancer, identifying abnormalities in radiology scans, and interpreting medical images, ultimately improving patient care and outcomes.

- Supply chain optimization: Deep learning can optimize supply chain operations by forecasting demand, optimizing inventory levels, and improving logistics. It can analyze historical data, market trends, and external factors to enhance decision-making and streamline operations.

Benefits of deep learning for enterprises

Deep learning offers a range of benefits for enterprises across various industries. Here are some key advantages of deep learning and how they can benefit businesses:

Improved decision-making: Deep learning algorithms allow enterprises to make more informed and data-driven decisions. By analyzing extensive datasets, deep learning models can uncover patterns and trends that might go unnoticed when analyzed by human analysts. Real-time analytics capabilities enable enterprises to access up-to-date information, while forecasting and predictive analytics empower them to anticipate changes and adjust strategies accordingly. Deep learning is crucial in risk assessment and mitigation, optimizing processes, and resource allocation, among other applications of deep learning in enterprises. By leveraging deep learning, enterprises gain valuable insights for strategic planning, market analysis, and overall business growth.

Enhanced customer experience and personalization: Deep learning technologies provide enterprises with powerful tools to enhance customer experience and deliver personalized interactions. Deep learning models can gain insights into individual preferences, behaviors, and needs by analyzing vast amounts of customer data. This enables enterprises to offer tailored recommendations, personalized marketing campaigns, and customized product offerings. Deep learning-based chatbots and virtual assistants can understand natural language and provide real-time support, improving response times and customer satisfaction. By leveraging deep learning for customer experience, enterprises can foster customer loyalty, increase retention rates, and gain a competitive edge in the market.

Automation of repetitive tasks: Deep learning enables enterprises to automate a wide range of tasks, increasing efficiency and productivity. DL algorithms can be trained to perform tasks such as data entry, data analysis, image recognition, and natural language processing. Automating these tasks enables enterprises to liberate valuable human resources, allowing them to concentrate on more intricate and strategic activities. This reduces the risk of errors and inconsistencies and accelerates the overall workflow. Deep learning-based automation can be applied across various sectors, including customer service, manufacturing, finance, and logistics, enabling enterprises to streamline operations, reduce costs, and optimize resource utilization.

Increased operational efficiency: Deep learning can significantly enhance operational efficiency within enterprises by optimizing processes and streamlining workflows. Deep learning algorithms can analyze complex data sets, identify patterns, and make accurate predictions, allowing enterprises to make data-driven decisions and optimize resource allocation. For example, in supply chain management, deep learning can optimize inventory levels, improve demand forecasting, and optimize logistics. In manufacturing, deep learning can enhance quality control and predictive maintenance. By leveraging deep learning for operational efficiency, enterprises can reduce costs, minimize waste, improve productivity, and ultimately achieve a competitive advantage in their respective industries.

Key challenges and factors to consider when adopting deep learning

While deep learning possesses significant potential, certain challenges and considerations must be taken into account for its successful adoption. Here are a few examples:

Requirement for quality data: Deep learning models typically require large amounts of labeled data for effective training. Deep learning can function best when loads of quality data are available. As the available data grows, the performance of the deep learning system also grows. A deep learning system can fail miserably when quality data isn’t fed into the system. There may be domains like industrial applications where there is a lack of sufficient data. This limits the adoption of deep learning in such cases.

Bias problem of AI: The good or bad of an artificial intelligence system largely depends on the volume of data it is trained on. Therefore, the future of AI systems depends on the volume and quality of data available to train them. In reality, though, most of the data organizations collect lacks quality and significance. They are firstly biased and largely only define the specifications and nature of a small demographic with interests such as gender, religion and the like.

Computational resources: Deep learning models are computationally demanding and require significant computational resources, especially for training large-scale models. Organizations need to invest in powerful hardware, such as GPUs or TPUs, or consider utilizing cloud-based infrastructure to support deep learning workloads.

Model interpretability: Deep learning models, especially with complex architectures, can lack interpretability, making it challenging to understand why certain predictions are made. Interpretable deep learning methods are an active area of research, but striking a balance between model complexity and interpretability is still a challenge.

Overfitting and generalization: Deep learning models are prone to overfitting, where they memorize training data instead of learning generalizable patterns. Techniques like regularization, data augmentation, and early stopping can help mitigate overfitting. Ensuring models generalize well to unseen data is a crucial consideration.

Ethical and legal implications: Deep learning applications may raise ethical and legal concerns, such as privacy issues, algorithmic bias, and fairness. It is important to address these considerations and ensure models are fair, transparent, and comply with relevant regulations, especially in sensitive domains like healthcare or finance.

Continuous learning and model updates: Deep learning models may need to be updated periodically to adapt to changing data patterns or improve performance. Implementing mechanisms for continuous learning, model monitoring, and updates is crucial to ensure the longevity and effectiveness of deep learning models.

Explainability and trust: Deep learning models can be seen as “black boxes” due to their complexity, which can undermine trust in their predictions. Ensuring explainability and transparency and providing insights into model decision-making are important considerations, particularly in critical applications like healthcare or finance.

Integration with existing systems: Integrating deep learning models into existing systems or workflows can be a challenge. Compatibility with existing software infrastructure, data pipelines, or deployment environments must be carefully addressed to ensure seamless integration and scalability.

Industries that have benefited significantly from the adoption of deep learning-powered solutions

Deep learning has proven to be immensely successful in transforming enterprises across diverse industries. Through its advanced algorithms and neural networks, deep learning has enabled enterprises to achieve remarkable outcomes and unlock new opportunities. Deep learning-based solutions have proven to be highly beneficial in these industries:

Healthcare: Deep learning has been used for medical imaging analysis, disease diagnosis, and prognosis prediction. It has shown promise in radiology, pathology, and drug discovery. Deep learning models can analyze medical images like X-rays, CT scans, and MRIs to detect abnormalities and assist in diagnosing diseases.

Finance: Deep learning has been employed in fraud detection, credit scoring, algorithmic trading, and risk assessment. It can identify patterns and anomalies in financial data, enabling early detection of fraudulent activities and improving the accuracy of credit assessments.

Retail: Deep learning is used in retail for applications such as demand forecasting, inventory management, customer segmentation, and personalized marketing. Deep learning models can provide personalized product recommendations and optimize supply chain operations by analyzing customer behavior and purchase history.

Manufacturing: In the manufacturing industry, deep learning has found applications in predictive maintenance, supply chain optimization and quality control. It can analyze sensor data, detect product defects, predict equipment failures, and optimize production processes.

Transportation: Deep learning is utilized in autonomous vehicles for tasks like object detection, lane recognition, decision-making and the like. It enables self-driving cars to perceive their surroundings and make real-time decisions based on visual and sensor data.

Energy: Deep learning is employed in the energy industry for load forecasting, optimization, and predictive maintenance. It can analyze historical energy consumption data to predict future demand, optimize energy distribution, and identify potential equipment failures.

Agriculture: Deep learning is used in precision agriculture for crop monitoring, yield prediction, and disease detection. By analyzing satellite imagery and sensor data, deep learning models can provide valuable insights to farmers, helping them optimize irrigation, fertilizer usage, and pest control.

These are just a few examples; deep learning can be applied in various other industries, including telecommunications, insurance, and logistics.

How to create and train deep learning models?

Here, we will develop a deep-learning model to predict employee attrition probability. We will use a dataset from Kaggle containing various indicators of employee satisfaction within a company. To construct the model, we will employ the Keras sequential layer, which allows us to create and configure the model’s different layers.

Prerequisites

You will need the following to proceed:

- An Anaconda development environment on your machine.

- A Jupyter Notebook installation. Anaconda will install Jupyter Notebook for you during its installation.

- Familiarity with machine learning.

Step1 – Gather and preprocess the data

In this step, you will load your dataset using pandas, a data manipulation Python library. Prior to initiating the data pre-processing phase, it is essential to activate your working environment and verify that all the required packages are properly installed on your machine. Using conda simplifies the installation of Keras and TensorFlow, ensuring compatibility and handling dependencies.

Move into the environment you created on your machine:

& conda activate my_env

To install keras and tensorflow, run the following command:

(my_env) $ conda install tensorflow keras

To begin, open Jupyter Notebook by entering the following command in your terminal:

(my_env) $ jupyter notebook

$ ssh -L 8888:localhost:8888 your_username@your_server_ip

Once you have accessed Jupyter Notebook, locate the anaconda3 file and click on it. Next, at the top of the screen, select “New” and choose “Python 3” to create a new notebook. To proceed, import the necessary modules for your project and load the dataset into a notebook cell. Import the pandas module for data manipulation and numpy for converting data into numpy arrays. Additionally, convert any columns in string format to numerical values to ensure compatibility with your computer’s processing capabilities.

Insert the below-mentioned code into your notebook cell and run it:

import pandas as pd

import numpy as np

df = pd.read_csv("https://raw.githubusercontent.com/mwitiderrick/kerasDO/master/HR_comma_sep.csv")

You have imported the necessary libraries, such as numpy and pandas, to support your data analysis tasks. Next, load the dataset into your notebook using pandas.

To gain insights into the dataset, you can utilize the head() function. This function allows you to view the first few records of your data frame. By adding the following code to a notebook cell and executing it, you will be able to observe a snapshot of your dataset.

df.head()

Next, you will convert the categorical columns to numerical values using dummy variables. This involves representing the categories as ones and zeros, indicating their presence or absence. To prevent the “dummy variable trap,” you will drop the first dummy variable.

Note: The dummy variable trap occurs when two or more variables are highly correlated, which can negatively impact model performance. To avoid this, you need to drop one dummy variable, ensuring you always have N-1 dummy variables. It doesn’t matter which specific dummy variable is dropped as long as you maintain N-1. For example, if you have an on/off switch represented by dummy variables, you can drop one column since the absence of the “on” state implies the “off” state.

Insert this below-mentioned code in the next notebook cell and execute it:

feats = ['department','salary'] df_final = pd.get_dummies(df,columns=feats,drop_first=True)

feats = [‘department’,’salary’] define the two columns for which you intend to create dummy variables. pd.get_dummies(df,columns=feats,drop_first=True) will generate the necessary numerical variables needed for your employee retention model. The code converts the defined categorical feats into numerical variables. The dataset has been loaded, and the salary and department columns have been converted into a format suitable for the keras deep learning model. Moving forward, the dataset will be split into a training and testing set.

Get customized ML solutions for your business!

With proficiency in deep learning and other ML concepts, LeewayHertz builds powerful ML solutions that are perfectly aligned with your business’s unique needs.

Step 2 – Separating the training and testing datasets

To split your dataset into a training and testing set, you will utilize the train_test_split module from the scikit-learn package. This step is crucial to train the model using a portion of the employee data and evaluate its performance using the remaining data. Splitting the dataset in this manner is a common practice when constructing deep learning models. It is essential to implement this split to ensure that the model does not have access to the testing data during the training process.

Import the train_test_split module from scikit-learn by inserting the provided code into the next notebook cell and executing it.

from sklearn.model_selection import train_test_split

After importing the train_test_split module, the left column in your dataset is utilized as the target variable for predicting whether an employee will leave the company. Hence, it is crucial to ensure that your deep learning model does not have access to this column. To achieve this, execute the following code in a notebook cell to drop the left column:

X = df_final.drop(['left'],axis=1).values y = df_final['left'].values

To meet the requirements of your deep learning model, the dataset needs to be in the form of arrays. To achieve this, numpy is utilized to convert the data into numpy arrays using the .values attribute.

Now, you can proceed to split the dataset into training and testing sets. The data will be split into training and testing sets with a ratio of 70% for training and 30% for testing. The larger portion is allocated for training to ensure an adequate amount of data is available for the training process.

To split the data into the specified ratios, use this code to the next notebook cell and execute it.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3)

The data has been successfully converted to the format accepted by Keras, namely numpy arrays, and has been split into separate training and testing sets. Before proceeding, data transformation is performed, which will be covered in the next step.

Step 3 – Data transformation

As a common practice in building deep learning models, scaling the dataset is often recommended to enhance computational efficiency. In this step, the data will be scaled using the StandardScaler, which will ensure that the dataset values have a mean of 0 and a standard deviation of 1. This transformation aims to achieve a normally distributed dataset. To accomplish this, the scikit-learn StandardScaler will be utilized.

To scale both the training set and the test set, add the provided code to a notebook cell and run it:

from sklearn.preprocessing import StandardScaler sc = StandardScaler() X_train = sc.fit_transform(X_train) X_test = sc.transform(X_test)

To begin, the StandardScaler is imported, and an instance of it is called. The fit_transform method is employed to scale both the training and testing sets.

All dataset features have now been scaled to ensure they are within the same range. The next step involves building the artificial neural network.

Step 4 – Building the artificial neural network

To construct the deep learning model, keras is utilized. This involves importing keras, which, by default, employs tensorflow as the backend. From keras, the Sequential module is imported to initialize the artificial neural network. Additionally, the Dense module is imported to add layers to the deep learning model.

When constructing a deep learning model, it is essential to define three types of layers:

- The input layer serves as the entry point for passing the dataset features. It performs no computations but transfers the features to the hidden layers.

- The hidden layers are typically situated between the input and output layers, and there can be multiple hidden layers. These layers carry out computations and relay the information to the output layer.

- The output layer represents the final layer of the neural network, which produces the desired results once the model is trained. It is responsible for generating the output variables.

Run the following code in your notebook cell to import the necessary Keras, Sequential, and Dense modules:

import keras from keras.models import Sequential from keras.layers import Dense

To initialize a linear stack of layers, the Sequential module is employed. In this case, a classifier variable is created since the task at hand involves classification. Classification refers to a problem where labeled data is available, and predictions are made based on this labeled data.

To create the classifier variable, insert the provided code snippet into your notebook.

classifier = Sequential()

By employing Sequential, you have successfully initialized the classifier for your network. At this point, you can proceed to add layers to your network.

Run the provided code snippet in the next cell:

classifier.add(Dense(9, kernel_initializer = "uniform",activation = "relu", input_dim=18))

The layers are added using the .add() function on the classifier, with specific parameters:

- The first parameter determines the number of nodes in the network, which defines the connections within the neural network.

- The second parameter is the kernel_initializer, which initializes the weights when fitting the deep learning model. The weights are initialized to values close to zero but not exactly zero.

- The third parameter represents the activation function, which influences the model’s ability to learn complex patterns and make accurate predictions. Activation functions can be linear or non-linear, and for the given problem, the relu activation function is chosen as it tends to generalize well on the dataset.

- The last parameter, input_dim, specifies the number of features in the dataset.

Moving forward, the output layer, which provides predictions, will be added:

classifier.add(Dense(1, kernel_initializer = "uniform",activation = "sigmoid"))

The output layer is configured with the following parameters:

- The number of output nodes is set to one, as you are expecting a single output indicating whether an employee will leave the company.

- The sigmoid activation function is chosen as the kernel_initializer, allowing you to obtain the probability of an employee leaving. If there were more than two categories, the softmax activation function, a variant of sigmoid, would be used.

Moving forward, gradient descent is applied to the neural network. This optimization strategy aims to minimize errors during the training process. By adjusting the initially assigned weights, the cost function (a measure of the neural network’s performance) is reduced. The objective of gradient descent is to reach the point of minimum error. This is achieved by finding the local minimum of the cost function, which involves differentiating to determine the slope at a specific point. By descending into the minimum, you can adjust the weights accordingly. Among various optimization strategies, the popular adam optimizer is utilized in this tutorial.

Insert the provided code snippet into your notebook cell and run it:

classifier.compile(optimizer= "adam",loss = "binary_crossentropy",metrics = ["accuracy"])

To apply gradient descent, you utilize the compile function with the following parameters:

- The optimizer specifies the gradient descent algorithm.

- The loss function is chosen as binary_crossentropy, suitable for binary classification problems.

- The metric parameter determines the evaluation metric for the model, in this case, accuracy.

With the classifier configured, you can proceed to fit it to your dataset. This is accomplished using the .fit() method provided by Keras. Insert the provided code snippet into a notebook cell and execute it to fit the model to your dataset.

classifier.fit(X_train, y_train, batch_size = 10, epochs = 1)

The .fit() method is used with the following parameters:

- The first parameter is the training set containing the features.

- The second parameter is the target column for making predictions.

Additionally, the batch_size determines the number of samples processed per training round, while the epochs represent the number of times the dataset is passed through the neural network during training.

Having created, compiled, and fitted the deep learning model to the dataset, you can now proceed to make predictions using the unseen portion of the data. In the next step, predictions will be generated using the trained deep learning model.

Step 5 – Generating predictions on the test set

To initiate the prediction process, the testing dataset is utilized with the existing model. Keras provides the .predict() function for generating predictions.

Insert the provided code in the subsequent notebook cell to start the prediction procedure:

y_pred = classifier.predict(X_test)

Having already trained the classifier with the training set, this code employs the acquired knowledge to generate predictions for the test set. It calculates the probabilities of an employee leaving and considers a threshold of 50% and above as indicative of a high likelihood of the employee leaving the company.

Insert the following line of code in your notebook cell to set the threshold:

y_pred = (y_pred > 0.5)

Having generated predictions using the predict method and established the threshold for identifying potential employee attrition, the next step is to assess the model’s performance using a confusion matrix.

Step 6 – Evaluating the confusion matrix

To evaluate the accuracy of the predictions, a confusion matrix is employed to analyze the number of correct and incorrect predictions. The confusion matrix, also referred to as an error matrix, presents the counts of true positives (tp), false positives (fp), true negatives (tn), and false negatives (fn) for a classifier.

- True positives represent correct predictions of the positive class (sensitivity or recall).

- True negatives indicate accurate predictions of the negative class.

- False positives signify incorrect predictions of the positive class.

- False negatives correspond to inaccurate predictions of the negative class.

To utilize the confusion matrix functionality provided by scikit-learn, include the following code in your subsequent notebook cell:

from sklearn.metrics import confusion_matrix cm = confusion_matrix(y_test, y_pred) cm

The output of the confusion matrix indicates that your deep learning model achieved 3305 + 375 correct predictions and 106 + 714 incorrect predictions. By calculating (3305 + 375) / 4500, you can determine the accuracy of the model. With a total of 4500 observations in the dataset, the accuracy is found to be 81.7%. This accuracy rate of 81.7% is considered quite good, as it demonstrates that your model is capable of achieving at least 81% accurate predictions.

Output

array([[3305, 106],

[ 714, 375]])

After evaluating your model with the confusion matrix, the next step is to make a single prediction using the developed model.

Step 7 – Generating a single prediction

To make a single prediction with your model, you will provide the details of one employee and predict the probability of them leaving the company. By passing the employee’s features to the predict method, which you previously scaled and converted to a numpy array, you can obtain the prediction.

Use the following code in a cell to pass the employee’s features and make the prediction:

new_pred = classifier.predict(sc.transform(np.array([[0.26,0.7 ,3., 238., 6., 0.,0.,0.,0., 0.,0.,0.,0.,0.,1.,0., 0.,1.]])))

The provided features correspond to a single employee and include attributes such as satisfaction level, last evaluation, number of projects, and more.

To establish a threshold of 50%, use the following code:

new_pred = (new_pred > 0.5) new_pred

This threshold signifies that an employee is predicted to leave the company if the probability is above 50%.

Based on the output, it is evident that the employee won’t leave the company:

Output array([[False]])

You have the option to adjust the threshold for your model, either higher or lower. For instance, you can choose to set the threshold at 60%:

new_pred = (new_pred > 0.6) new_pred

The prediction still indicates that the employee will not leave the company:

Output array([[False]])

In this step, we have seen how to make individual predictions based on the features of a single employee. In the next step, we will focus on enhancing the accuracy of your model.

Step 8 – Enhancing the model accuracy

To mitigate the issue of high variance in model training results, you can employ K-fold cross-validation. Typically, K is set to 10. In this technique, the model is trained on 9 folds and evaluated on the remaining fold. This process is repeated for each fold until all folds have been used. Each iteration provides its own accuracy, and the average of these accuracies becomes the overall model accuracy.

By utilizing the KerasClassifier wrapper, you can implement K-fold cross-validation with keras. This wrapper integrates with scikit-learn’s cross-validation functionality. To begin, import the cross_val_score function for cross-validation and the KerasClassifier.

Insert and execute the following code in your notebook cell

from keras.wrappers.scikit_learn import KerasClassifier from sklearn.model_selection import cross_val_score

To generate the function that you will pass to the KerasClassifier, add this code to the next cell:

def make_classifier():

classifier = Sequential()

classifier.add(Dense(9, kernel_initializer = "uniform", activation = "relu", input_dim=18))

classifier.add(Dense(1, kernel_initializer = "uniform", activation = "sigmoid"))

classifier.compile(optimizer= "adam",loss = "binary_crossentropy",metrics = ["accuracy"])

return classifier

In this step, a function is created that will be passed to the KerasClassifier as one of its arguments. This function serves as a wrapper for the neural network design that was used earlier. The function takes parameters that are similar to those used in the previous steps. Within the function, the classifier is initialized using Sequential(). Dense is then used to add the input and output layers. Finally, the classifier is compiled and returned.

To pass the function that is built to the KerasClassifier, add this line of code to your notebook:

classifier = KerasClassifier(build_fn = make_classifier, batch_size=10, nb_epoch=1)

The KerasClassifier is utilized with three arguments:

- build_fn: the function containing the neural network design

- batch_size: the number of samples processed in each iteration

- nb_epoch: the total number of epochs for training

Then, cross-validation is applied using Scikit-learn’s cross_val_score function. Add the following code to your notebook cell and run it:

accuracies = cross_val_score(estimator = classifier,X = X_train,y = y_train,cv = 10,n_jobs = -1)

Using the specified number of folds as 10, this function will generate ten accuracies. These accuracies are assigned to the variable “accuracies” and will be used later to calculate the mean accuracy. The function takes the following arguments:

- estimator: the classifier that you defined earlier

- X: the features of the training set

- y: the target values in the training set

- cv: the number of folds

- n_jobs: the number of CPUs to utilize (-1 indicates using all available CPUs)

Now that you have performed cross-validation, you can compute the mean and variance of the accuracies. To accomplish this, insert the following code into a notebook cell:

mean = accuracies.mean() mean

In the output, you will observe that the mean accuracy is 83%.

Output 0.8343617910685696

To calculate the variance of the accuracies, insert the following code into the next cell of your notebook:

variance = accuracies.var() variance

The obtained variance of 0.00109 indicates that your model is performing exceptionally well, as the variance is extremely low.

Output0.0010935021002275425

By implementing K-Fold cross-validation, you have successfully improved the accuracy of your model. In the next step, you will address the issue of overfitting.

Step 9 – Applying dropout regularization to address over-fitting issues

To address the issue of overfitting, you can apply dropout regularization in your model. Dropout regularization helps prevent the model from memorizing the training set by randomly deactivating a certain percentage of neurons during each iteration. In this case, a rate of 0.1 is specified, indicating that 1% of the neurons will be deactivated during training. The overall network design remains unchanged.

To incorporate the Dropout layer into your model, add the following code in the next cell:

from keras.layers import Dropout classifier = Sequential() classifier.add(Dense(9, kernel_initializer = "uniform", activation = "relu", input_dim=18)) classifier.add(Dropout(rate = 0.1)) classifier.add(Dense(1, kernel_initializer = "uniform", activation = "sigmoid")) classifier.compile(optimizer= "adam",loss = "binary_crossentropy",metrics = ["accuracy"])

By adding a Dropout layer with a rate of 0.1, overfitting can be mitigated by deactivating 15% of the neurons during training. After including the Dropout and output layers, the classifier is compiled as before.

In this step, the goal of combating overfitting is achieved through the introduction of a Dropout layer. Subsequently, the focus will shift to further enhancing the model by fine-tuning the parameters used during its creation.

Step 10 – Hyperparameter tuning

Using grid search, different model parameters can be experimented with to identify the optimal ones that yield the highest accuracy. This technique involves trying out various parameters and selecting the ones that produce the best results. In order to improve the model’s accuracy, the make_classifier function will be modified to accommodate the testing of different optimizer functions. The GridSearchCV function from scikit-learn facilitates this functionality.

To modify the make_classifier function and explore various optimizer functions, add the following code to your notebook:

from sklearn.model_selection import GridSearchCV

def make_classifier(optimizer):

classifier = Sequential()

classifier.add(Dense(9, kernel_initializer = "uniform", activation = "relu", input_dim=18))

classifier.add(Dense(1, kernel_initializer = "uniform", activation = "sigmoid"))

classifier.compile(optimizer= optimizer,loss = "binary_crossentropy",metrics = ["accuracy"])

return classifier

By importing GridSearchCV, the necessary tool has been acquired. Modifications have been made to the make_classifier function to facilitate the testing of various optimizers. Initialization of the classifier, addition of the input and output layers, and compilation of the classifier have been carried out. The classifier has been returned for further utilization.

Similar to step 4, include the following line of code to define the classifier:

classifier = KerasClassifier(build_fn = make_classifier)

The classifier has been defined using the KerasClassifier, which requires a function to be passed via the build_fn parameter. The KerasClassifier has been called and the previously created make_classifier function has been passed as an argument.

Next, you will set a few parameters that you intend to experiment with. Insert the following code into a cell and execute it:

params = {

'batch_size':[20,35],

'epochs':[2,3],

'optimizer':['adam','rmsprop']

}

Different batch sizes, numbers of epochs, and various types of optimizer functions have been added.

For a small dataset like this, a batch size between 20-35 is recommended. Larger batch sizes should be experimented with for larger datasets. Using a low number of epochs ensures faster results, but larger numbers can be tested if time is not a constraint. The adam and rmsprop optimizers from Keras are suitable choices for this neural network.

Now, the defined parameters will be used to search for the best combination using the GridSearchCV function. Execute the following code in the next cell:

grid_search = GridSearchCV(estimator=classifier,

param_grid=params,

scoring="accuracy",

cv=2)

The grid search function expected the following parameters:

- Estimator: the classifier used.

- Param_grid: the set of parameters to be tested.

- Scoring: the metric used.

- CV: the number of folds used for testing.

Next, the grid_search was fitted to the training dataset.

grid_search = grid_search.fit(X_train,y_train)

The output will resemble the following:

OutputEpoch 1/2 5249/5249 [==============================] - 1s 228us/step - loss: 0.5958 - acc: 0.7645 Epoch 2/2 5249/5249 [==============================] - 0s 82us/step - loss: 0.3962 - acc: 0.8510 Epoch 1/2 5250/5250 [==============================] - 1s 222us/step - loss: 0.5935 - acc: 0.7596 Epoch 2/2 5250/5250 [==============================] - 0s 85us/step - loss: 0.4080 - acc: 0.8029 Epoch 1/2 5249/5249 [==============================] - 1s 214us/step - loss: 0.5929 - acc: 0.7676 Epoch 2/2 5249/5249 [==============================] - 0s 82us/step - loss: 0.4261 - acc: 0.7864

Add the code below to a notebook cell to retrieve the best parameters from this search using the best_params_ attribute:

best_param = grid_search.best_params_ best_accuracy = grid_search.best_score_

To check the best parameters for your model, use the following code:

best_param

The output reveals that the best batch size is 20, the best number of epochs is 2, and the optimal optimizer for your model is adam.

Output = {'batch_size': 20, 'epochs': 2, 'optimizer': 'adam'}

You can evaluate the best accuracy of your model using the best_accuracy variable, which represents the highest accuracy achieved with the best parameters after performing the grid search.

best_accuracy

The output generated will be similar to the following:

Output0.8533193637489285

GridSearch was utilized to determine the optimal parameters for the classifier. It was discovered that the best batch size is 20, the best optimizer is adam, and the best number of epochs is 2. The highest accuracy achieved for the classifier was 85%. A model for predicting employee retention has been constructed, achieving an accuracy of up to 85%.

Future trends and developments in deep learning

Deep learning is a rapidly evolving field, and several trends and developments are shaping its future. Here are some key areas of focus and potential advancements in deep learning:

Explainability and interpretability: As deep learning models become more complex, there is a growing need to understand and interpret their decisions. Research is focused on developing techniques and methods to explain the predictions made by deep learning models, enabling users to trust and understand the underlying reasoning.

Transfer learning and few-shot learning: Transfer learning allows pre-trained models to be fine-tuned for new tasks with limited data, improving efficiency and performance. Few-shot learning aims to develop models to learn new concepts or tasks with minimal training examples, mimicking human-like learning abilities.

Reinforcement learning: Reinforcement learning, which combines deep learning with principles from decision-making and control theory, has shown promise in areas such as robotics and game-playing. Future advancements in reinforcement learning may lead to more sophisticated and efficient learning algorithms and applications.

Integration with other fields: Deep learning will continue to intersect with other areas such as robotics, Augmented Reality (AR), Virtual Reality(VR), and Internet of Things (IoT). Integration with these fields will enable more advanced applications and enhance the capabilities of deep learning models.

Generative models: Generative models, such as GANs and Variational Autoencoders (VAEs), have gained attention for generating realistic and creative outputs, such as images, music, and text. Further research is expected to improve generative models’ stability, diversity, and control.

Multi-modal learning: AI has improved in integrating multiple modalities, such as text, vision, speech, and other technologies, within a single machine learning model. Developers are now working on integrating these modalities into machine learning and deep learning to improve task networking and efficiency.

Using deep learning in neuroscience: The human brain comprises neurons. Computer-based artificial neural networks, resembling the neural networks in the human brain, have emerged as a significant development. This advancement has led to numerous breakthroughs in neuroscience, resulting in the discovery of numerous neurological treatments and concepts.

Increased use of Edge Intelligence(EL): Edge intelligence is reshaping the landscape of data acquisition and analysis by decentralizing processes from cloud-based data storage to the edge. By reducing dependence on centralized cloud servers, edge intelligence enhances the autonomy and efficiency of data storage devices, enabling more localized and responsive data processing.

Final words

Deep learning has emerged as a transformative technology for enterprises, offering unprecedented opportunities for growth and innovation. The applications of deep learning in businesses are vast and diverse. From improving decision-making to enhancing customer experiences, deep learning models have proven their value in driving positive outcomes for enterprises. With deep learning, enterprises can leverage their data to gain valuable insights, enhance customer satisfaction, and achieve a competitive edge in their respective industries. While adopting deep learning comes with challenges and considerations, successful use cases across various sectors demonstrate its potential. By following a systematic approach to creating and training deep learning models, enterprises can overcome these challenges and unlock the transformative power of this technology. Deep learning continues to evolve rapidly, with advancements in NLP, computer vision, and reinforcement learning. These advancements promise exciting possibilities for enterprise applications and offer new ways for businesses to innovate and differentiate themselves in the market. By embracing deep learning, enterprises can drive growth, improve operational efficiency, and deliver exceptional customer experiences.

Looking to leverage deep learning-based solutions for your business? Contact LeewayHertz’s AI experts for expert guidance and robust AI development.

Start a conversation by filling the form

All information will be kept confidential.

Insights

Journey to AGI: Exploring the next frontier in artificial intelligence

Artificial General Intelligence represents a significant leap in the evolution of artificial intelligence, characterized by capabilities that closely mirror the intricacies of human intelligence.

AI in real estate: Impacting the dynamics of the modern property market

AI-powered solutions are gradually transforming the real estate industry by simplifying and expediting complex processes, ultimately boosting work efficiency across various roles, including sellers, brokers, asset managers, and investors.

AI for insurance: Transforming insurance operations with data-driven insights

AI empowers insurers to foster growth, mitigate risks, combat fraud, and automate various processes, thereby reducing costs and improving efficiency.