how to build a private LLM?

These machine-learning models are capable of processing vast amounts of text data and generating highly accurate results. They are built using complex algorithms, such as transformer architectures, that analyze and understand the patterns in data at the word level. This enables LLMs to better understand the nuances of natural language and the context in which it is used. With their ability to process and generate text at an unprecedented scale, LLMs have become increasingly popular for a wide range of applications, including language translation, chatbots and text classification.

Some of the most powerful large language models currently available include GPT-3, BERT, T5 and RoBERTa. Developed by leading tech companies such as OpenAI, Google and Facebook AI, these models have achieved state-of-the-art results on several natural language understanding tasks, including language translation, question answering and sentiment analysis. For example, GPT-3 has 175 billion parameters and generates highly realistic text, including news articles, creative writing, and even computer code. On the other hand, BERT has been trained on a large corpus of text and has achieved state-of-the-art results on benchmarks like question answering and named entity recognition.

With the growing use of large language models in various fields, there is a rising concern about the privacy and security of data used to train these models. Many pre-trained LLMs available today are trained on public datasets containing sensitive information, such as personal or proprietary data, that could be misused if accessed by unauthorized entities. This has led to a growing inclination towards Private Large Language Models (PLLMs) trained on private datasets specific to a particular organization or industry.

This article delves deeper into large language models, exploring how they work, the different types of models available and their applications in various fields. And by the end of this article, you will know how to build a private LLM.

- What are Large Language Models?

- Different types of Large Language Models

- How do Large Language Models work? Understanding the building blocks

- Understanding private LLM

- Why do you need private LLMs?

- How to build a private LLM?

- Industries benefiting from private LLMs

- How can LeewayHertz AI development services help you build a private LLM?

What are Large Language Models (LLMs)?

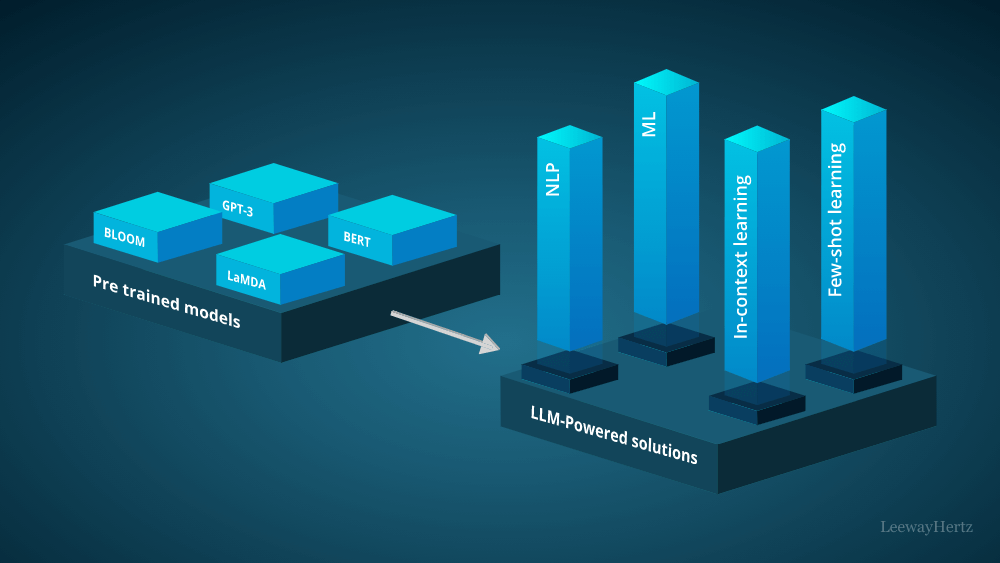

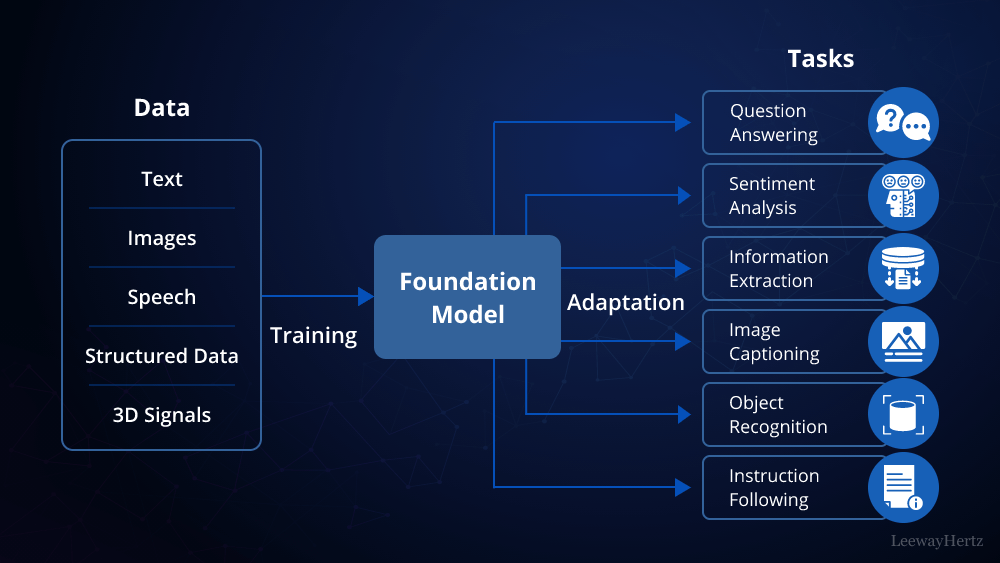

Large Language Models (LLMs) are foundation models that utilize deep learning in natural language processing (NLP) and natural language generation (NLG) tasks. They are designed to learn the complexity and linkages of language by being pre-trained on vast amounts of data. This pre-training involves techniques such as fine-tuning, in-context learning, and zero/one/few-shot learning, allowing these models to be adapted for certain specific tasks.

At its core, an LLM is a transformer-based neural network introduced in 2017 by Google engineers in an article titled “Attention is All You Need”. The goal of the model is to predict the text that is likely to come next. The sophistication and performance of a model can be judged by its number of parameters, which are the number of factors it considers when generating output.

The transformer architecture is a key component of LLMs and relies on a mechanism called self-attention, which allows the model to weigh the importance of different words or phrases in a given context. This mechanism enables the model to attend to different parts of an input sequence to compute a representation for each position and has proven to be particularly effective in capturing long-range dependencies and understanding the nuances of natural language.

These are some examples of large language models:

- Turing NLG by Microsoft

- Gopher and Chinchilla by Deepmind

- Switch transformer, GLAM, PALM, Lamba, T5, MT5 by Google

- OPT and Fairseq Dense by Meta

- GPT-3 versions such as GPT-Neo, GPT-J, and GPT-NeoX by OpenAI

- Ernie 3.0 by Baidu

- Jurassic by AI21Labs

- Exaone by LG

- Pangu Alpha by Huawei

- Roberta, XML-Roberta, and Deberta

- DistilBert

- XLNet

These models have varying levels of complexity and performance and have been used in a variety of natural language processing and natural language generation tasks.

Large language models offer businesses a multitude of advantages, including the automation of tasks, such as sentiment analysis, customer service, content creation, fraud detection, and prediction and classification, resulting in cost savings and enhanced productivity.

Additionally, language models enhance availability, personalization and customer satisfaction by providing 24/7 service through chatbots and virtual assistants and by processing large amounts of data to understand customer behavior and preferences. These models also save time by automating tasks such as data entry, customer service, document creation and analyzing large datasets. Finally, large language models increase accuracy in tasks such as sentiment analysis by analyzing vast amounts of data and learning patterns and relationships, resulting in better predictions and groupings.

Launch your project with LeewayHertz

Elevate your data governance and privacy standards with a private LLM

Different types of Large Language Models

Large language models are categorized into three main types based on their transformer architecture:

Autoregressive language models

Autoregressive (AR) language modeling is a type of language modeling where the model predicts the next word in a sequence based on the previous words. Given its context, these models are trained to predict the probability of each word in the training dataset. This feed-forward model predicts future words from a given set of words in a context. However, the context words are restricted to two directions – either forward or backward – which limits their effectiveness in understanding the overall context of a sentence or text. While AR models are useful in generative tasks that create a context in the forward direction, they have limitations. The model can only use the forward or backward context, but not both simultaneously. This limits its ability to understand the context and make accurate predictions fully, affecting the model’s overall performance.

The most popular example of an autoregressive language model is the Generative Pre-trained Transformer (GPT) series developed by OpenAI, with GPT-4 being the latest and most powerful version.

Autoregressive models are generally used for generating long-form text, such as articles or stories, as they have a strong sense of coherence and can maintain a consistent writing style. However, they can sometimes generate text that is repetitive or lacks diversity.

Autoregressive language models have also been used for language translation tasks. For example, Google’s Neural Machine Translation system uses an autoregressive approach to translate text from one language to another. The system is trained on large amounts of bilingual text data and then uses this training data to predict the most likely translation for a given input sentence.

Autoencoding language models

Autoencoder language modeling is a type of neural network architecture used in natural language processing (NLP) to generate a fixed-size vector representation, or embedding, of input text by reconstructing the original input from a masked or corrupted version of it. This type of modeling is based on the idea that a good representation of the input text can be learned by predicting missing or masked words in the input text using the surrounding context.

Autoencoding models have been proven to be effective in various NLP tasks, such as sentiment analysis, named entity recognition and question answering. One of the most popular autoencoding language models is BERT or Bidirectional Encoder Representations from Transformers, developed by Google. BERT is a pre-trained model that can be fine-tuned for various NLP tasks, making it highly versatile and efficient.

Autoencoding models are commonly used for shorter text inputs, such as search queries or product descriptions. They can accurately generate vector representations of input text, allowing NLP models to better understand the context and meaning of the text. This is particularly useful for tasks that require an understanding of context, such as sentiment analysis, where the sentiment of a sentence can depend heavily on the surrounding words. In summary, autoencoder language modeling is a powerful tool in NLP for generating accurate vector representations of input text and improving the performance of various NLP tasks.

Hybrid models

Hybrid language models combine the strengths of autoregressive and autoencoding models in natural language processing. Autoregressive models generate text based on the input context by predicting the next word in a sequence given the previous words, while autoencoding models learn to generate a fixed-size vector representation of input text by reconstructing the original input from a masked or corrupted version of it.

Hybrid models, like T5 developed by Google, combine the advantages of both approaches. They generate text based on the input context while also being fine-tuned for specific NLP tasks such as text classification, summarization and translation, making them highly adaptable and efficient, as they can perform multiple tasks with high accuracy.

One of the key benefits of hybrid models is their ability to balance coherence and diversity in the generated text. They can generate coherent and diverse text, making them useful for various applications such as chatbots, virtual assistants, and content generation. Researchers and practitioners also appreciate hybrid models for their flexibility, as they can be fine-tuned for specific tasks, making them a popular choice in the field of NLP.

How do Large Language Models work? Understanding the building blocks

Large language models consist of multiple crucial building blocks that enable them to process and comprehend natural language data. Here are some essential components:

Tokenization

Tokenization is a fundamental process in natural language processing that involves dividing a text sequence into smaller meaningful units known as tokens. These tokens can be words, subwords, or even characters, depending on the requirements of the specific NLP task. Tokenization helps to reduce the complexity of text data, making it easier for machine learning models to process and understand.

The two most commonly used tokenization algorithms in LLMs are BPE and WordPiece. BPE is a data compression algorithm that iteratively merges the most frequent pairs of bytes or characters in a text corpus, resulting in a set of subword units representing the language’s vocabulary. WordPiece, on the other hand, is similar to BPE, but it uses a greedy algorithm to split words into smaller subword units, which can capture the language’s morphology more accurately.

Tokenization is a crucial step in LLMs as it helps to limit the vocabulary size while still capturing the nuances of the language. By breaking the text sequence into smaller units, LLMs can represent a larger number of unique words and improve the model’s generalization ability. Tokenization also helps improve the model’s efficiency by reducing the computational and memory requirements needed to process the text data.

Embedding

Embedding is a crucial component of LLMs, enabling them to map words or tokens to dense, low-dimensional vectors. These vectors encode the semantic meaning of the words in the text sequence and are learned during the training process. The process of learning embeddings involves adjusting the weights of the neural network based on the input text sequence so that the resulting vector representations capture the relationships between the words.

Embeddings can be trained using various techniques, including neural language models, which use unsupervised learning to predict the next word in a sequence based on the previous words. This process helps the model learn to generate embeddings that capture the semantic relationships between the words in the sequence. Once the embeddings are learned, they can be used as input to a wide range of downstream NLP tasks, such as sentiment analysis, named entity recognition and machine translation.

One key benefit of using embeddings is that they enable LLMs to handle words not in the training vocabulary. Using the vector representation of similar words, the model can generate meaningful representations of previously unseen words, reducing the need for an exhaustive vocabulary. Additionally, embeddings can capture more complex relationships between words than traditional one-hot encoding methods, enabling LLMs to generate more nuanced and contextually appropriate outputs.

Attention

Attention mechanisms in LLMs allow the model to focus selectively on specific parts of the input, depending on the context of the task at hand. Self-attention mechanisms, used in transformer-based models, work by computing the dot product between one token in the sequence and other tokens, resulting in a matrix of weights representing each token’s importance relative to every other token in the sequence.

These weights are then used to compute a weighted sum of the token embeddings, which forms the input to the next layer in the model. By doing this, the model can effectively “attend” to the most relevant information in the input sequence while ignoring irrelevant or redundant information. This is particularly useful for tasks that involve understanding long-range dependencies between tokens, such as natural language understanding or text generation.

Moreover, attention mechanisms have become a fundamental component in many state-of-the-art NLP models. Researchers continue exploring new ways of using them to improve performance on a wide range of tasks. For example, some recent work has focused on incorporating different types of attention, such as multi-head attention, or using attention to model interactions between different modalities, such as text and images.

Pre-training

Pretraining is a critical process in the development of large language models. It is a form of unsupervised learning where the model learns to understand the structure and patterns of natural language by processing vast amounts of text data. During pretraining, the model is trained on a large corpus of text, usually without any specific task or objective, and learns to predict the next word in a sentence or to fill in the missing words in a sequence of text.

The pretraining process allows the model to capture the fundamental properties of language, such as syntax, semantics, and context, and to develop an understanding of the relationships between words and their meanings. As a result, pretraining produces a language model that can be fine-tuned for various downstream NLP tasks, such as text classification, sentiment analysis, and machine translation.

Pretraining can be done using various architectures, including autoencoders, recurrent neural networks (RNNs) and transformers. Among these, transformers have become the most popular architecture for pretraining large language models due to their ability to handle long-range dependencies and capture contextual relationships between words. The most well-known pretraining models based on transformers are BERT and GPT.

Transfer learning

Transfer learning is a machine learning technique that involves utilizing the knowledge gained during pre-training and applying it to a new, related task. In the context of large language models, transfer learning entails fine-tuning a pre-trained model on a smaller, task-specific dataset to achieve high performance on that particular task.

The advantage of transfer learning is that it allows the model to leverage the vast amount of general language knowledge learned during pre-training. This means the model can learn more quickly and accurately from smaller, labeled datasets, reducing the need for large labeled datasets and extensive training for each new task. Transfer learning can significantly reduce the time and resources required to train a model for a new task, making it a highly efficient approach.

In addition, transfer learning can also help to improve the accuracy and robustness of the model. The model can learn to generalize better and adapt to different domains and contexts by fine-tuning a pre-trained model on a smaller dataset. This makes the model more versatile and better suited to handling a wide range of tasks, including those not included in the original pre-training data.

Understanding private LLM

Private LLMs are designed with a primary focus on user privacy and data protection. These models incorporate several techniques to minimize the exposure of user data during both the training and inference stages.

One key privacy-enhancing technology employed by private LLMs is federated learning. This approach allows models to be trained on decentralized data sources without directly accessing individual user data. By doing so, it preserves the privacy of users since their data remains localized.

Differential privacy is another important component. It involves adding noise to the data during the training process, making it more challenging to identify specific information about individual users. This ensures that even if someone gains access to the model, it becomes difficult to discern sensitive details about any particular user.

In addition, private LLMs often implement encryption and secure computation protocols. These measures are in place to protect user data during both training and inference. Encryption ensures that the data is secure and cannot be easily accessed by unauthorized parties. Secure computation protocols further enhance privacy by enabling computations to be performed on encrypted data without exposing the raw information.

Why do you need private LLMs?

Building a large language model can be a significant undertaking for any company, but there are compelling reasons for doing so. Here are a few reasons:

Customization

Customization is one of the key benefits of building your own large language model. You can tailor the model to your needs and requirements by building your private LLM. This customization ensures the model performs better for your specific use cases than general-purpose models. When building a custom LLM, you have control over the training data used to train the model. This control allows you to curate the data to include specific types of content, including platform-specific capabilities, terminology, and context that might not be well-covered in general-purpose models like GPT-4.

Building your private LLM lets you fine-tune the model to your specific domain or use case. This fine-tuning can be done by training the model on a smaller, domain-specific dataset relevant to your specific use case. This approach ensures the model performs better for your specific use case than general-purpose models.

Reduced dependency

Another significant benefit of building your own large language model is reduced dependency. By building your private LLM, you can reduce your dependence on a few major AI providers, which can be beneficial in several ways.

Firstly, by building your private LLM, you have control over the technology stack that the model uses. This control lets you choose the technologies and infrastructure that best suit your use case. This flexibility can help reduce dependence on specific vendors, tools, or services. Secondly, building your private LLM can help reduce reliance on general-purpose models not tailored to your specific use case. General-purpose models like GPT-4 or even code-specific models are designed to be used by a wide range of users with different needs and requirements. As a result, they may not be optimized for your specific use case, which can result in suboptimal performance. By building your private LLM, you can ensure that the model is optimized for your specific use case, which can improve its performance. Finally, building your private LLM can help to reduce your dependence on proprietary technologies and services. This reduction in dependence can be particularly important for companies prioritizing open-source technologies and solutions. By building your private LLM and open-sourcing it, you can contribute to the broader developer community and reduce your reliance on proprietary technologies and services.

Cost efficiency

Cost efficiency is another important benefit of building your own large language model. By building your private LLM, you can reduce the cost of using AI technologies, which can be particularly important for small and medium-sized enterprises (SMEs) and developers with limited budgets.

Using open-source technologies and tools is one way to achieve cost efficiency when building an LLM. Many tools and frameworks used for building LLMs, such as TensorFlow, PyTorch and Hugging Face, are open-source and freely available. This availability can help to reduce the cost of building an LLM. Another way to achieve cost efficiency when building an LLM is to use smaller, more efficient models. While larger models like GPT-4 can offer superior performance, they are also more expensive to train and host. By building smaller, more efficient models, you can reduce the cost of hosting and deploying the model without sacrificing too much performance. Finally, by building your private LLM, you can reduce the cost of using AI technologies by avoiding vendor lock-in. You may be locked into a specific vendor or service provider when you use third-party AI services, resulting in high costs over time. By building your private LLM, you have greater control over the technology stack and infrastructure used by the model, which can help to reduce costs over the long term.

Data privacy and security

Data privacy and security are crucial concerns for any organization dealing with sensitive data. Building your own large language model can help achieve greater data privacy and security.

When you use third-party AI services, you may have to share your data with the service provider, which can raise privacy and security concerns. By building your private LLM, you can keep your data on your own servers to help reduce the risk of data breaches and protect your sensitive information. Building your private LLM also allows you to customize the model’s training data, which can help to ensure that the data used to train the model is appropriate and safe. For instance, you can use data from within your organization or curated data sets to train the model, which can help to reduce the risk of malicious data being used to train the model. In addition, building your private LLM allows you to control the access and permissions to the model, which can help to ensure that only authorized personnel can access the model and the data it processes. This control can help to reduce the risk of unauthorized access or misuse of the model and data. Finally, building your private LLM allows you to choose the security measures best suited to your specific use case. For example, you can implement encryption, access controls and other security measures that are appropriate for your data and your organization’s security policies.

Maintaining regulatory compliance

Building private LLMs plays a vital role in ensuring regulatory compliance, especially when handling sensitive data governed by diverse regulations. Private LLMs contribute significantly by offering precise data control and ownership, allowing organizations to train models with their specific datasets that adhere to regulatory standards. This control prevents inadvertent use of non-compliant information. Moreover, private LLMs can be fine-tuned using proprietary data, enabling content generation that aligns with industry standards and regulatory guidelines. These LLMs can be deployed in controlled environments, bolstering data security and adhering to strict data protection measures.

Furthermore, organizations can generate content while maintaining confidentiality, as private LLMs generate information without sharing sensitive data externally. They also help address fairness and non-discrimination provisions through bias mitigation. The transparent nature of building private LLMs from scratch aligns with accountability and explainability regulations. Compliance with consent-based regulations such as GDPR and CCPA is facilitated as private LLMs can be trained with data that has proper consent. The models also offer auditing mechanisms for accountability, adhere to cross-border data transfer restrictions, and adapt swiftly to changing regulations through fine-tuning. By constructing and deploying private LLMs, organizations not only fulfill legal requirements but also foster trust among stakeholders by demonstrating a commitment to responsible and compliant AI practices.

Pushing the boundaries of AI development

Building your own large language model can help push the boundaries of AI development by allowing you to experiment with new approaches, architectures, and techniques that may not be possible with off-the-shelf models.

When building your private LLM, you have greater control over the architecture, training data and training process. This control allows you to experiment with new techniques and approaches unavailable in off-the-shelf models. For example, you can try new training strategies, such as transfer learning or reinforcement learning, to improve the model’s performance. In addition, building your private LLM allows you to develop models tailored to specific use cases, domains and languages. For instance, you can develop models better suited to specific applications, such as chatbots, voice assistants or code generation. This customization can lead to improved performance and accuracy and better user experiences.

Building your private LLM can also help you stay updated with the latest developments in AI research and development. As new techniques and approaches are developed, you can incorporate them into your models, allowing you to stay ahead of the curve and push the boundaries of AI development. Finally, building your private LLM can help you contribute to the broader AI community by sharing your models, data and techniques with others. By open-sourcing your models, you can encourage collaboration and innovation in AI development.

Adaptable to evolving needs

Private LLMs can be fine-tuned and customized as an organization’s needs evolve, enabling long-term flexibility and adaptability. This means that organizations can modify their proprietary large language models (LLMs) over time to address changing requirements and respond to new challenges. Private LLMs are tailored to the organization’s unique use cases, allowing specialization in generating relevant content. As the organization’s objectives, audience, and demands change, these LLMs can be adjusted to stay aligned with evolving needs, ensuring that the content produced remains pertinent. This adaptability offers advantages such as staying current with industry trends, addressing emerging challenges, optimizing performance, maintaining brand consistency, and saving resources. Ultimately, organizations can maintain their competitive edge, provide valuable content, and navigate their evolving business landscape effectively by fine-tuning and customizing their private LLMs.

Open-source models

Building your own large language model can enable you to build and share open-source models with the broader developer community.

By building your private LLM you have complete control over the model’s architecture, training data and training process. This level of control allows you to fine-tune the model to meet specific needs and requirements and experiment with different approaches and techniques. Once you have built a custom LLM that meets your needs, you can open-source the model, making it available to other developers.

By open-sourcing your models, you can contribute to the broader developer community. Developers can use open-source models to build new applications, products and services or as a starting point for their own custom models. This collaboration can lead to faster innovation and a wider range of AI applications.

In addition to sharing your models, building your private LLM can enable you to contribute to the broader AI community by sharing your data and training techniques. By sharing your data, you can help other developers train their own models and improve the accuracy and performance of AI applications. By sharing your training techniques, you can help other developers learn new approaches and techniques they can use in their AI development projects.

Launch your project with LeewayHertz

Elevate your data governance and privacy standards with a private LLM

How to build a private LLM?

Building a large language model is a complex task requiring significant computational resources and expertise. There is no single “correct” way to build an LLM, as the specific architecture, training data and training process can vary depending on the task and goals of the model.

In the context of LLM development, an example of a successful model is Databricks’ Dolly. Dolly is a large language model specifically designed to follow instructions and was trained on the Databricks machine-learning platform. The model is licensed for commercial use, making it an excellent choice for businesses looking to develop LLMs for their operations. Dolly is based on pythia-12b and was trained on approximately 15,000 instruction/response fine-tuning records, known as databricks-dolly-15k. These records were generated by Databricks employees, who worked in various capability domains outlined in the InstructGPT paper. These domains include brainstorming, classification, closed QA, generation, information extraction, open QA and summarization.

Dolly does exhibit a surprisingly high-quality instruction-following behavior that is not characteristic of the foundation model on which it is based. This makes Dolly an excellent choice for businesses that want to build their LLMs on a proven model specifically designed for instruction following.

Datasets overview

The dataset used for the Databricks Dolly model is called “databricks-dolly-15k,” which consists of more than 15,000 prompt/response pairs generated by Databricks employees. These pairs were created in eight different instruction categories, including the seven outlined in the InstructGPT paper and an open-ended free-form category. Contributors were instructed to avoid using information from any source on the web except for Wikipedia in some cases and were also asked to avoid using generative AI.

During the data generation process, contributors were allowed to answer questions posed by other contributors. Contributors were asked to provide reference texts copied from Wikipedia for some categories. The dataset is intended for fine-tuning large language models to exhibit instruction-following behavior. Additionally, it presents an opportunity for synthetic data generation and data augmentation using paraphrasing models to restate prompts and responses.

Let’s discuss how to build an LLM like Dolly.

Step 1: Data processing

Data collection

Databricks employees were invited to create prompt/response pairs in each of eight different instruction categories, including brainstorming, classification, closed QA, generation, information extraction, open QA and summarization. The contributors were instructed to avoid using information from any source on the web with the exception of Wikipedia (for particular subsets of instruction categories), and explicitly instructed to avoid using generative AI in formulating instructions or responses. Examples of each behavior were provided to motivate the types of questions and instructions appropriate to each category. Halfway through the data generation process, contributors were allowed to answer questions posed by other contributors.

Databricks Dolly is a pre-trained large language model based on the GPT-3.5 architecture, a GPT (Generative Pre-trained Transformer) architecture variant. The Dolly model was trained on a large corpus of text data using a combination of supervised and unsupervised learning.

We will offer a brief overview of the functionality of the trainer.py script responsible for orchestrating the training process for the Dolly model. This involves setting up the training environment, loading the training data, configuring the training parameters and executing the training loop.

Pre-requisites

Load libraries

To execute the code, you first need to load the following libraries:

import logging from functools import partial from pathlib import Path from typing import Any, Dict, List, Tuple, Union import click import numpy as np from datasets import Dataset, load_dataset from transformers import ( AutoModelForCausalLM, AutoTokenizer, DataCollatorForLanguageModeling, PreTrainedTokenizer, Trainer, TrainingArguments, set_seed, )

Here is a breakdown of the imports and their purposes:

- logging: This module is used for logging messages during the training process.

- functools.partial: This is used to create a new function with some arguments already set, which can be useful for configuring other functions.

- pathlib.Path: This is used to work with file paths in a platform-independent way.

- typing: This module is used to define types for function arguments and return values.

- click: This is a library for creating command-line interfaces.

- numpy: This is a library for working with arrays and matrices.

- datasets: This is a library for working with datasets.

- AutoModelForCausalLM: This is a class from the Transformers library for loading a pre-trained language model.

- AutoTokenizer: This is a class from the Transformers library for loading a pre-trained tokenizer.

- DataCollatorForLanguageModeling: This is a class from the Transformers library for combining sequences of input data into batches for language modeling.

- PreTrainedTokenizer: This is a base class for all tokenizers in the Transformers library.

- Trainer: This is a class from the Transformers library for training a language model.

- TrainingArguments: This is a class from the Transformers library for defining the arguments used during training.

- set_seed: This is a function from the Transformers library for setting the random seed used during training.

Data loading

def load_training_dataset(path_or_dataset: str = "databricks/databricks-dolly-15k") -> Dataset:

logger.info(f"Loading dataset from {path_or_dataset}")

dataset = load_dataset(path_or_dataset)["train"]

logger.info("Found %d rows", dataset.num_rows)

def _add_text(rec):

instruction = rec["instruction"]

response = rec["response"]

context = rec.get("context")

if not instruction:

raise ValueError(f"Expected an instruction in: {rec}")

if not response:

raise ValueError(f"Expected a response in: {rec}")

# For some instructions there is an input that goes along with the instruction, providing context for the

# instruction. For example, the input might be a passage from Wikipedia and the instruction says to extract

# some piece of information from it. The response is that information to extract. In other cases there is

# no input. For example, the instruction might be open QA such as asking what year some historic figure was

# born.

if context:

rec["text"] = PROMPT_WITH_INPUT_FORMAT.format(instruction=instruction, response=response, input=context)

else:

rec["text"] = PROMPT_NO_INPUT_FORMAT.format(instruction=instruction, response=response)

return rec

dataset = dataset.map(_add_text)

return dataset

load_training_dataset loads a training dataset in the form of a Hugging Face Dataset. The function takes a path_or_dataset parameter, which specifies the location of the dataset to load. The default value for this parameter is “databricks/databricks-dolly-15k,” which is the name of a pre-existing dataset.

The function first logs a message indicating that it is loading the dataset and then loads the dataset using the load_dataset function from the datasets library. It selects the “train” split of the dataset and logs the number of rows in the dataset. The function then defines a _add_text function that takes a record from the dataset as input and adds a “text” field to the record based on the “instruction,” “response,” and “context” fields in the record. If the “context” field is present, the function formats the “instruction,” “response” and “context” fields into a prompt with input format, otherwise it formats them into a prompt with no input format. Finally, the function returns the modified record.

The load_training_dataset function applies the _add_text function to each record in the dataset using the map method of the dataset and returns the modified dataset.

Data preprocessing and tokenization

def preprocess_dataset(tokenizer: AutoTokenizer, max_length: int, seed=DEFAULT_SEED) -> Dataset:

"""Loads the training dataset and tokenizes it so it is ready for training.

Args:

tokenizer (AutoTokenizer): Tokenizer tied to the model.

max_length (int): Maximum number of tokens to emit from tokenizer.

Returns:

Dataset: HuggingFace dataset

"""

dataset = load_training_dataset()

logger.info("Preprocessing dataset")

_preprocessing_function = partial(preprocess_batch, max_length=max_length, tokenizer=tokenizer)

dataset = dataset.map(

_preprocessing_function,

batched=True,

remove_columns=["instruction", "context", "response", "text", "category"],

)

# Make sure we don't have any truncated records, as this would mean the end keyword is missing.

logger.info("Processed dataset has %d rows", dataset.num_rows)

dataset = dataset.filter(lambda rec: len(rec["input_ids"]) < max_length)

logger.info("Processed dataset has %d rows after filtering for truncated records", dataset.num_rows)

logger.info("Shuffling dataset")

dataset = dataset.shuffle(seed=seed)

logger.info("Done preprocessing")

return dataset

This function is responsible for preprocessing the training dataset so that it can be used for training the language model. It takes in an AutoTokenizer, which is used for the tokenization of the dataset and a maximum length, which is the maximum number of tokens that can be emitted from the tokenizer.

First, it loads the training dataset using the load_training_dataset() function and then it applies a _preprocessing_function to the dataset using the map() function. The _preprocessing_function pushes the preprocess_batch() function defined in another module to tokenize the text data in the dataset. It removes the unnecessary columns from the dataset by using the remove_columns parameter.

After tokenization, it filters out any truncated records in the dataset, ensuring that the end keyword is present in all of them. It then shuffles the dataset using a seed value to ensure that the order of the data does not affect the training of the model.

Finally, it returns the preprocessed dataset that can be used to train the language model.

Step 2: Model training

The training corpus used for Dolly consists of a diverse range of texts, including web pages, books, scientific articles and other sources. The texts were preprocessed using tokenization and subword encoding techniques and were used to train the GPT-3.5 model using a GPT-3 training procedure variant. The training process for Dolly involved several stages. In the first stage, the GPT-3.5 model was trained using a subset of the corpus in a supervised learning setting. This involved training the model to predict the next word in a given sequence of words, given a context window of preceding words. In the second stage, the model was further trained in an unsupervised learning setting, using a variant of the GPT-3 unsupervised learning procedure. This involved fine-tuning the model on a larger portion of the training corpus while incorporating additional techniques such as masked language modeling and sequence classification.

During the training process, the Dolly model was trained on large clusters of GPUs and TPUs to speed up the training process. The model was also optimized using various techniques, such as gradient checkpointing and mixed-precision training to reduce memory requirements and increase training speed.

The complete code of model training for Dolly is available here.

def train(

*,

input_model: str,

local_output_dir: str,

dbfs_output_dir: str,

epochs: int,

per_device_train_batch_size: int,

per_device_eval_batch_size: int,

lr: float,

seed: int,

deepspeed: str,

gradient_checkpointing: bool,

local_rank: str,

bf16: bool,

logging_steps: int,

save_steps: int,

eval_steps: int,

test_size: Union[float, int],

save_total_limit: int,

warmup_steps: int,

):

set_seed(seed)

model, tokenizer = get_model_tokenizer(

pretrained_model_name_or_path=input_model, gradient_checkpointing=gradient_checkpointing

)

# Use the same max length that the model supports. Fall back to 1024 if the setting can't be found.

# The configuraton for the length can be stored under different names depending on the model. Here we attempt

# a few possible names we've encountered.

conf = model.config

max_length = None

for length_setting in ["n_positions", "max_position_embeddings", "seq_length"]:

max_length = getattr(model.config, length_setting, None)

if max_length:

logger.info(f"Found max lenth: {max_length}")

break

if not max_length:

max_length = 1024

logger.info(f"Using default max length: {max_length}")

processed_dataset = preprocess_dataset(tokenizer=tokenizer, max_length=max_length, seed=seed)

split_dataset = processed_dataset.train_test_split(test_size=test_size, seed=seed)

logger.info("Train data size: %d", split_dataset["train"].num_rows)

logger.info("Test data size: %d", split_dataset["test"].num_rows)

data_collator = DataCollatorForCompletionOnlyLM(

tokenizer=tokenizer, mlm=False, return_tensors="pt", pad_to_multiple_of=8

)

if not dbfs_output_dir:

logger.warn("Will NOT save to DBFS")

training_args = TrainingArguments(

output_dir=local_output_dir,

per_device_train_batch_size=per_device_train_batch_size,

per_device_eval_batch_size=per_device_eval_batch_size,

fp16=False,

bf16=bf16,

learning_rate=lr,

num_train_epochs=epochs,

deepspeed=deepspeed,

gradient_checkpointing=gradient_checkpointing,

logging_dir=f"{local_output_dir}/runs",

logging_strategy="steps",

logging_steps=logging_steps,

evaluation_strategy="steps",

eval_steps=eval_steps,

save_strategy="steps",

save_steps=save_steps,

save_total_limit=save_total_limit,

load_best_model_at_end=False,

report_to="tensorboard",

disable_tqdm=True,

remove_unused_columns=False,

local_rank=local_rank,

warmup_steps=warmup_steps,

)

logger.info("Instantiating Trainer")

trainer = Trainer(

model=model,

tokenizer=tokenizer,

args=training_args,

train_dataset=split_dataset["train"],

eval_dataset=split_dataset["test"],

data_collator=data_collator,

)

logger.info("Training")

trainer.train()

logger.info(f"Saving Model to {local_output_dir}")

trainer.save_model(output_dir=local_output_dir)

if dbfs_output_dir:

logger.info(f"Saving Model to {dbfs_output_dir}")

trainer.save_model(output_dir=dbfs_output_dir)

logger.info("Done.")

This code trains a language model using a pre-existing model and its tokenizer. It preprocesses the data, splits it into train and test sets, and collates the preprocessed data into batches. The model is trained using the specified settings and the output is saved to the specified directories. The training process is completed once the model is saved. Dolly was built using GPT-3 as the pre-trained model. Specifically, Databricks used the GPT-3 6B model, which has 6 billion parameters, to fine-tune and create Dolly.

Step 3: Model evaluation

You can evaluate LLMs like Dolly using several techniques, including perplexity and human evaluation. Perplexity is a metric used to evaluate the quality of language models by measuring how well they can predict the next word in a sequence of words. A lower perplexity score indicates better performance. The Dolly model achieved a perplexity score of around 20 on the C4 dataset, which is a large corpus of text used to train language models.

In addition to perplexity, the Dolly model was evaluated through human evaluation. Specifically, human evaluators were asked to assess the coherence and fluency of the text generated by the model. The evaluators were also asked to compare the output of the Dolly model with that of other state-of-the-art language models, such as GPT-3. The human evaluation results showed that the Dolly model’s performance was comparable to other state-of-the-art language models in terms of coherence and fluency.

Step 4: Feedback and iteration

When building an LLM, gathering feedback and iterating based on that feedback is crucial to improve the model’s performance. The process’s core should have the ability to rapidly train and deploy models and then gather feedback through various means, such as user surveys, usage metrics, and error analysis.

One of the ways we gather feedback is through user surveys, where we ask users about their experience with the model and whether it met their expectations. Another way is monitoring usage metrics, such as the number of code suggestions generated by the model, the acceptance rate of those suggestions, and the time it takes to respond to a user request.

We also perform error analysis to understand the types of errors the model makes and identify areas for improvement. For example, we may analyze the cases where the model generated incorrect code or failed to generate code altogether. We then use this feedback to retrain the model and improve its performance.

We regularly evaluate and update our data sources, model training objectives, and server architecture to ensure our process remains robust to changes. This allows us to stay current with the latest advancements in the field and continuously improve the model’s performance.

Sample code for model iteration may involve retraining the model with new data or fine-tuning the existing model. For instance, to fine-tune a pre-trained language model for code completion, one may use the following code snippet:

from transformers import AutoTokenizer, AutoModelForCausalLM, Trainer, TrainingArguments

# load pre-trained language model and tokenizer

model_name = "microsoft/CodeGPT-small-java"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# prepare data for fine-tuning

train_dataset = ...

# fine-tune the model

training_args = TrainingArguments(

output_dir='./results',

evaluation_strategy = "epoch",

learning_rate=2e-5,

per_device_train_batch_size=16,

per_device_eval_batch_size=64,

num_train_epochs=1,

weight_decay=0.01,

push_to_hub=False,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

data_collator=lambda data: {'input_ids': tokenizer(data['code'], padding=True, truncation=True, max_length=512).input_ids, 'labels': tokenizer(data['code'], padding=True, truncation=True, max_length=512).input_ids},

)

trainer.train()

Launch your project with LeewayHertz

Elevate your data governance and privacy standards with a private LLM

Industries benefiting from private LLMs

Legal and compliance

In the legal and compliance sector, private LLMs provide a transformative edge. These models can expedite legal research, analyze contracts, and assess regulatory changes by quickly extracting relevant information from vast volumes of documents. This efficiency not only saves time but also enhances accuracy in decision-making. Legal professionals can benefit from LLM-generated insights on case law, statutes, and legal precedents, leading to well-informed strategies. By fine-tuning the LLMs with legal terminology and nuances, organizations can streamline due diligence processes and ensure compliance with ever-evolving regulations.

Finance and banking

Private LLMs offer significant advantages to the finance and banking industries. They can analyze market trends, customer interactions, financial reports, and risk assessment data. These models assist in generating insights into investment strategies, predicting market shifts, and managing customer inquiries. The LLMs’ ability to process and summarize large volumes of financial information expedites decision-making for investment professionals and financial advisors. By training the LLMs with financial jargon and industry-specific language, institutions can enhance their analytical capabilities and provide personalized services to clients.

Cybersecurity and digital forensics

The cybersecurity and digital forensics industry is heavily reliant on maintaining the utmost data security and privacy. Private LLMs play a pivotal role in analyzing security logs, identifying potential threats, and devising response strategies. These models help security teams sift through immense amounts of data to detect anomalies, suspicious patterns, and potential breaches. By aiding in the identification of vulnerabilities and generating insights for threat mitigation, private LLMs contribute to enhancing an organization’s overall cybersecurity posture. Their contribution in this context is vital, as data breaches can lead to compromised systems, financial losses, reputational damage, and legal implications.

Defense and intelligence

Defense and intelligence agencies handle highly classified information related to national security, intelligence gathering, and strategic planning. Within this context, private Large Language Models (LLMs) offer invaluable support. By analyzing intricate security threats, deciphering encrypted communications, and generating actionable insights, these LLMs empower agencies to swiftly and comprehensively assess potential risks. The role of private LLMs in enhancing threat detection, intelligence decoding, and strategic decision-making is paramount. However, the protection of these LLMs from data breaches is of utmost significance, as breaches can inflict substantial and lasting harm on national security efforts, potentially compromising classified data and jeopardizing critical operations.

How can LeewayHertz AI development services help you build a private LLM?

LeewayHertz excels in developing private Large Language Models (LLMs) from the ground up for your specific business domain. Our approach involves understanding your specific requirements, data, and objectives to construct an LLM aimed at enhancing your business operations, decision-making, and customer interactions, giving you an edge in leveraging AI for specialized industry applications.

LeewayHertz offers several specialized services as part of private Large Language Models (LLMs) development:

Consulting and strategy development

Our consulting service evaluates your business workflows to identify opportunities for optimization with LLMs. We craft a tailored strategy focusing on data security, compliance, and scalability. Our specialized LLMs aim to streamline your processes, increase productivity, and improve customer experiences.

Data engineering

Our data engineering service involves meticulous collection, cleaning, and annotation of raw data to make it insightful and usable. We specialize in organizing and standardizing large, unstructured datasets from varied sources, ensuring they are primed for effective LLM training. Our focus on data quality and consistency ensures that your large language models yield reliable, actionable outcomes, driving transformative results in your AI projects.

Domain-specific LLM development

Our service focuses on developing domain-specific LLMs tailored to your industry, whether it’s healthcare, finance, or retail. To create domain-specific LLMs, we fine-tune existing models with relevant data enabling them to understand and respond accurately within your domain’s context. Our comprehensive process includes data preparation, model fine-tuning, and continuous optimization, providing you with a powerful tool for content generation and automation tailored to your specific business needs.

LLM-powered solution development

Our approach involves collaborating with clients to comprehend their specific challenges and goals. Utilizing LLMs, we provide custom solutions adept at handling a range of tasks, from natural language understanding and content generation to data analysis and automation. These LLM-powered solutions are designed to transform your business operations, streamline processes, and secure a competitive advantage in the market.

Seamless integration

We integrate the LLM-powered solutions we build into your existing business systems and workflows, enhancing decision-making, automating tasks, and fostering innovation. This seamless integration with platforms like content management systems boosts productivity and efficiency within your familiar operational framework.

Continuous support and maintenance

We offer continuous model monitoring, ensuring alignment with evolving data and use cases, while also managing troubleshooting, bug fixes, and updates. Our service also includes proactive performance optimization to ensure your solutions maintain peak efficiency and value.

Endnote

Language models are the backbone of natural language processing technology and have changed how we interact with language and technology. Large language models (LLMs) are one of the most significant developments in this field, with remarkable performance in generating human-like text and processing natural language tasks.

LLMs, like GPT-3, have become increasingly popular for their ability to generate high-quality, coherent text, making them invaluable for various applications, including content creation, chatbots and voice assistants. These models are trained on vast amounts of data, allowing them to learn the nuances of language and predict contextually relevant outputs.

However, building an LLM requires NLP, data science and software engineering expertise. It involves training the model on a large dataset, fine-tuning it for specific use cases and deploying it to production environments. Therefore, it’s essential to have a team of experts who can handle the complexity of building and deploying an LLM.

An expert company specializing in LLMs can help organizations leverage the power of these models and customize them to their specific needs. They can also provide ongoing support, including maintenance, troubleshooting and upgrades, ensuring that the LLM continues to perform optimally.

Unlock new insights and opportunities with custom-built LLMs tailored to your business use case. Contact our AI experts for consultancy and development needs and take your business to the next level.

Start a conversation by filling the form

All information will be kept confidential.

FAQs

What are Large Language Models (LLMs)?

Large Language Models (LLMs) are advanced artificial intelligence models proficient in comprehending and producing human-like language. These models undergo extensive training on vast datasets, enabling them to exhibit remarkable accuracy in tasks such as language translation, text summarization, and sentiment analysis. Their capacity to process and generate text at a significant scale marks a significant advancement in the field of Natural Language Processing (NLP).

What are the essential building blocks of Large Language Models?

LLMs are built upon several essential building blocks. At their core is a deep neural network architecture, often based on transformer models, which excel at capturing complex patterns and dependencies in sequential data. These models require vast amounts of diverse and high-quality training data to learn language representations effectively. Pre-training is a crucial step, where the model learns from massive datasets, followed by fine-tuning on specific tasks or domains to enhance performance. LLMs leverage attention mechanisms for contextual understanding, enabling them to capture long-range dependencies in text. Additionally, large-scale computational resources, including powerful GPUs or TPUs, are essential for training these massive models efficiently. Regularization techniques and optimization strategies are also applied to manage the model’s complexity and improve training stability. The combination of these elements results in powerful and versatile LLMs capable of understanding and generating human-like text across various applications.

What is private LLM development?

Private LLM development involves crafting a personalized and specialized language model to suit the distinct needs of a particular organization. This approach grants comprehensive authority over the model’s training, architecture, and deployment, ensuring it is tailored for specific and optimized performance in a targeted context or industry.

Why is there a growing interest in private LLMs?

There is a rising concern about the privacy and security of data used to train LLMs. Many pre-trained models use public datasets containing sensitive information. Private large language models, trained on specific, private datasets, address these concerns by minimizing the risk of unauthorized access and misuse of sensitive information.

Why should you choose a private LLM over a general-purpose model?

Choosing a private LLM over a general-purpose model involves considerations related to data privacy, customization, and specific business requirements. Here are some reasons why you should might opt for a private LLM:

- Data privacy and security: Private LLMs allow organizations to keep their sensitive data within their own infrastructure, mitigating the risks associated with sharing proprietary information with external models. This is crucial for industries with strict data privacy regulations.

- Customization for specific use cases: Private LLMs can be fine-tuned on proprietary data, ensuring the model is specifically tailored to address the unique requirements of a particular industry or organization. This customization can result in more accurate and relevant outputs for specific tasks.

- Industry-specific nuances: Certain industries have specific terminologies, jargon, or nuances that may not be well-represented in general-purpose models. A private LLM, trained on industry-specific data, can better capture and understand these nuances, leading to more accurate and contextually relevant results.

- Reduced dependency on external services: Using a private LLM reduces dependence on external cloud services or third-party platforms. This provides greater control over the model, reduces the risk of service disruptions, and ensures continued access to language processing capabilities even in offline or restricted network environments.

- Compliance with regulations: In regulated industries, using private LLMs can facilitate compliance with data protection and privacy regulations. Keeping data and models in-house allows organizations to have more control over how data is handled and ensures alignment with specific legal requirements.

- Preservation of intellectual property: Private LLMs protect an organization’s intellectual property by keeping proprietary data and insights within the company. This helps maintain a competitive edge and prevents the risk of inadvertently sharing sensitive information with external parties.

- Enhanced trust and reputation: Choosing a private LLM demonstrates a commitment to data privacy and security, which can enhance trust with customers, partners, and stakeholders. This can be particularly important for organizations that prioritize building and maintaining a strong reputation.

How is a private LLM tailored to a business’s needs?

A private Large Language Model (LLM) is tailored to a business’s needs through meticulous customization. This involves training the model using datasets specific to the industry, aligning it with the organization’s applications, terminology, and contextual requirements. This customization ensures better performance and relevance for specific use cases.

How does LeewayHertz approach private Large Language Model (LLM) development?

Our AI experts, possessing extensive proficiency in data engineering, Machine Learning (ML) and Natural Language Processing (NLP) technologies along with associated toolkits, specialize in constructing private large language models. The models are trained using clients’ proprietary data, ensuring a nuanced and precise understanding of the unique aspects of their industry. This customized approach ensures that our private LLMs can provide contextually relevant and highly insightful responses to queries, offering a solution intricately aligned with your business requirements.

Insights

How to use LLMs in synthesizing training data?

Harnessing the power of large language models (LLMs), a mighty tool capable of understanding, generating, and even refining human-like text we can generate synthesized training data that is flawless and train our models more efficiently.

Build an LLM-powered application using LangChain: A comprehensive step-by-step guide

LangChain is a framework that provides a set of tools, components, and interfaces for developing LLM-powered applications.

How to use LLMs for creating a content-based recommendation system for entertainment platforms?

Content-based recommendation systems leverage the intrinsic features of items (such as movies, songs, or books) to make personalized suggestions.