Getting started with generative AI: A beginner’s guide

Generative AI has significantly impacted how we approach content creation and other content-related tasks such as language translation and question-answering, by generating human-like outputs leveraging machine learning techniques. From OpenAI’s groundbreaking language models based applications like ChatGPT and DALL-E to Google’s Bard, generative AI is changing the content industry by pushing the boundaries of what is possible and creating content in novel ways.

Trained on vast amounts of data with the use of unsupervised and semi-supervised learning algorithms, generative AI allows for creating new content from scratch. One of the most exciting developments in this field is large language models that enable computer programs to comprehend text and generate new content.

At the heart of generative AI is a neural network that is trained to recognize patterns in data and then use these patterns to generate new content. By using customized parameters during training, generative AI models can make their own conclusions and identify features in the data. This allows them to predict the most relevant patterns to input and generate corresponding content.

Although generative AI is capable of producing incredible results, it still requires human intervention at the beginning and end of the training process to achieve optimal outcomes. Human input is necessary to provide the initial data set, identify the relevant features to be learned by the model and evaluate the output for quality and relevance. Thus, generative AI combines human and machine intelligence to create new opportunities for content generation.

In this guide, we will walk you through the basics of generative AI, how it works, and what exciting applications it has in store for the future.

- What is generative AI?

- A brief history of generative AI

- The generative AI tech stack

- Different types of generative AI models

- How does generative AI work?

- Role of natural language processing in generative AI

- Applications of generative AI

- Generative AI use cases

- The future of generative AI

What is generative AI?

Generative AI, also known as creative AI, is a subset of machine learning that generates new and unique content using artificial intelligence. It differs from traditional AI models designed to recognize patterns and make predictions based on existing data.

Generative AI represents a significant departure from traditional AI, which relies on analyzing and processing existing data to make predictions and recommendations. Traditional AI models are trained on large datasets to recognize patterns and relationships between variables, which are then used to make predictions and decisions on new data inputs. On the other hand, generative AI focuses on creating new data that does not already exist in the training dataset, unlike traditional AI systems that produce predictable and consistent outputs based on the input data and predefined rules. Generative AI uses algorithms and models specifically designed for generating new content, such as images, music, and text.

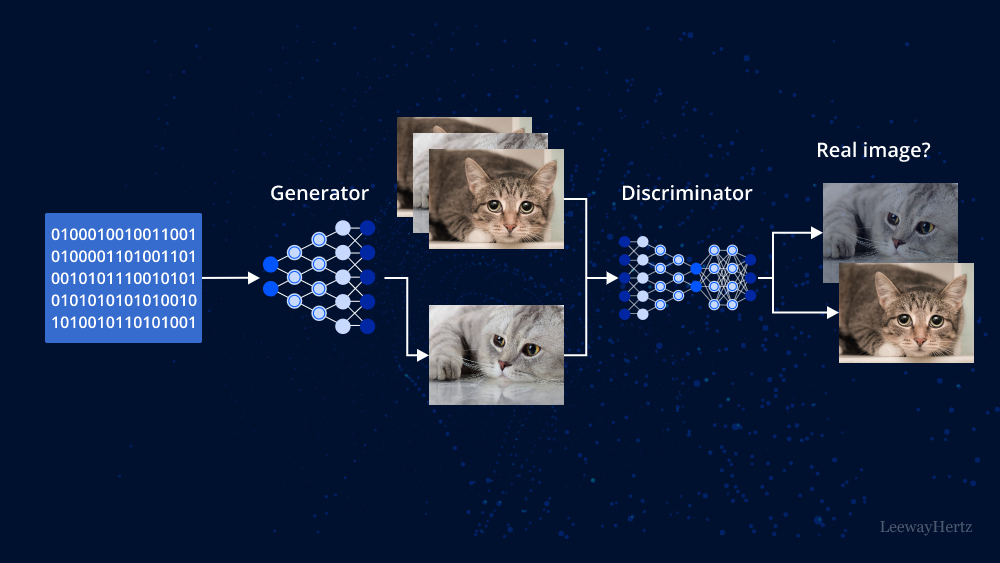

One of the most popular techniques used in generative AI is Generative Adversarial Networks (GANs). GANs consist of two neural networks: a generator and a discriminator. The generator creates new data based on the training data, while the discriminator evaluates the data and determines whether it is real or fake. Through this process, the generator improves its output until it can create content that is indistinguishable from real data.

Another technique used in generative AI is Variational Autoencoders (VAEs). VAEs use a probabilistic approach to learn a compressed representation of the input data, which is then used to generate new data. Unlike GANs, VAEs do not generate exact replicas of the training data but produce new variations of the input data.

The applications of generative AI are vast and varied. In art and design, generative AI can be used to create unique and original designs for fashion, furniture, and architecture. In music, it can be used to generate new and interesting compositions. In writing, it can produce text-based content, such as news articles and stories. These applications represent a significant departure from the traditional use cases of AI, which have typically been focused on classification, regression, and clustering tasks.

Despite the exciting possibilities of generative AI, there are also potential risks and ethical concerns that need to be addressed. For example, there is a risk that generative AI could be used to create fake news or generate fake images for propaganda purposes. Additionally, bias in the data used to train the models is also possible, resulting in biased output. These issues need to be carefully considered by developers and stakeholders to ensure that generative AI is used responsibly and ethically.

A brief history of generative AI

The roots of generative AI date back to the early days of artificial intelligence research in the 1950s and 1960s, when computer programs were developed to generate simple pieces of text or music. However, the real progress began in the 2010s with the advent of deep learning, which brought significant strides in accuracy and realism.

A key milestone in the history of generative AI was the introduction of Experiments in Musical Intelligence (EMI) by David Cope in 1997. EMI combined rule-based and statistical techniques to generate new music items in the style of famous composers.

2010 represents another significant milestone when Google introduced its “autocomplete” feature, which predicts what a user is typing and offers suggestions to complete the sentence. This was made possible by a language model trained on vast amounts of text data.

In 2013, the Deep Boltzmann Machine (DBM) was introduced by a team of researchers at the University of Toronto. This generative neural network learned to represent complex data distributions and paved the way for developing other generative models.

However, the real breakthrough came in 2014 with the introduction of Generative Adversarial Networks (GANs) by Ian Goodfellow and his colleagues. GANs generated new data by pitting two networks against each other in a game-like setting, creating realistic images and videos that could fool human observers.

In 2015, Amazon introduced Alexa, a voice assistant that responded to natural language queries and helped popularize the use of voice assistants in daily life.

In 2019, OpenAI released its GPT-2 language model, capable of producing human-like text in various styles and genres, generating long and coherent pieces of text that could easily pass as being written by a human.

In 2021, OpenAI announced the release of GPT-3.5, an improved version of GPT-3 that is more powerful and efficient. With its advanced language capabilities and the ability to generate coherent and meaningful text, GPT-3.5 has gained popularity among developers and businesses worldwide.

Moreover, OpenAI has also announced its recent release of GPT-4, a multimodal model expected to be even more advanced and capable than its predecessor. GPT-4 is expected to advance the field of generative AI with improved natural language processing and enhanced capabilities.

These milestones demonstrate the continuous innovation and breakthroughs in machine learning research, leading to increasingly sophisticated and realistic generative AI models. With the development of new technologies, we can expect to see even more impressive and creative applications of generative AI in the future.

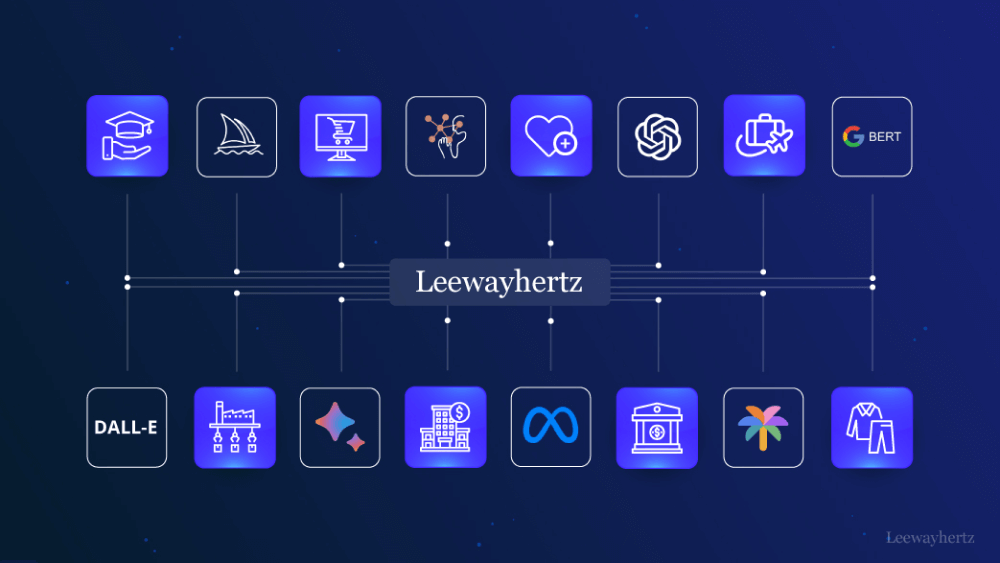

The generative AI tech stack

With the evolution of generative AI, we also have a comprehensive tech stack that facilitates rapid innovation and a more streamlined development process. The tech stack includes:

Application frameworks

The advent of application frameworks has positively impacted software development by speeding up the adoption of new technologies, making programming more intuitive, simplifying the development process, and increasing the efficiency of developers in refining their software. Different development frameworks have become prominent or widely recognized over time, each with its unique characteristics and features that support and promote the development of software applications. LangChain has been embraced by the developer community as an open-source hub for the development of LLM-based applications. Fixie, on the other hand, is paving the way for an enterprise-level platform dedicated to the creation, deployment, and management of AI agents. Similarly, cloud service providers are also contributing to the framework scene, with offerings like Microsoft’s Semantic Kernel and Google Cloud’s Vertex AI platform.

These frameworks are being harnessed by developers to design applications capable of content generation, building semantic systems for natural language-driven content search, autonomous task-performing agents and the like. These applications are already instigating significant shifts in our creative processes, information synthesis, and work paradigms.

The tools ecosystem empowers application developers to realize their innovative ideas by leveraging domain expertise and understanding of their customers, bypassing the need for a deep technical understanding of the underlying infrastructure. The current ecosystem is structured around four key components: models, data, evaluation platform, and deployment.

Models

Let’s delve into the cornerstone of this ecosystem, Foundation Models (FMs). FMs are designed to emulate human-like reasoning, effectively serving as the ‘brain’ of the operation. Interestingly, developers have a plethora of FM options to choose from, each with variations in output quality, modalities, context window size, and trade-offs between cost and latency. More often than not, the optimal design necessitates a mix of several FMs in a single application.

Hence, developers have the freedom to choose from models built by various providers. If they decide to utilize an open-source model (like Stable Diffusion, GPT-J, FLAN T-5, Llama), numerous hosting services are available to them. Advancements spearheaded by companies like OctoML now empower developers to host models not only on servers but also deploy them on edge devices and even directly in browsers. This enhancement boosts privacy and security while minimizing latency and cost. Developers also have the ability to train their own language model using a diverse range of emerging platforms. Many of these platforms offer open-source models, ready to be utilized by developers right out of the gate.

Data

Large Language Models (LLMs) indeed bring a great deal of power to the table. However, they come with an inherent limitation – their reasoning is confined to the information they were trained on. This can be restricting for developers who need to base decisions on their own unique data sets. Fortunately, there are strategies and tools available to connect and operationalize this crucial data:

- Data loaders: A variety of data, from a multitude of sources, can be integrated into the system by developers. Data loaders facilitate this process, allowing for the inclusion of both structured data from databases and unstructured data. Enterprises are utilizing these tools to construct personalized content generation and semantic search applications that incorporate unstructured data from PDFs, documents, presentations, and more, all through advanced ETL pipelines, like those offered by Unstructured.io.

- Vector databases: As LLM applications grow, particularly in the realm of semantic search systems and conversational interfaces, developers increasingly seek to vectorize an array of unstructured data using LLM embeddings. These vectors are then stored for efficient querying – a task performed by vector databases. Vector databases are available in both closed and open-source formats and differ in terms of supported modalities, performance, and the overall developer experience. Several standalone offerings exist alongside those integrated into existing database systems.

- Context window: To personalize model outputs, developers often resort to the technique known as retrieval-augmented generation. This technique allows developers to incorporate data directly into the prompt, achieving a higher level of personalization without needing to modify the model’s weights via fine-tuning. LangChain and LlamaIndex are notable projects offering data structures that facilitate the integration of data into the model’s context window.

Evaluation platform

In the realm of Large Language models (LLMs), developers often grapple with a balancing act between model performance, the cost of inference, and latency. They can, however, enhance performance on all these fronts by iterating prompts, fine-tuning the model, or shifting between different model providers. Yet, gauging performance proves to be a complicated task due to the probabilistic character of LLMs and task non-determinism.

Nevertheless, there is a variety of evaluation tools available to developers that assist in determining optimal prompts, tracking both offline and online experimentation, and overseeing model performance once in production:

- Prompt engineering: An assortment of No Code / Low Code tools are available to aid developers in iterating prompts and examining a range of model outputs. These platforms provide a valuable resource for prompt engineers to zero in on the most effective prompts for their application’s experience.

- Experimentation: Machine Learning (ML) engineers who seek to experiment with prompts, hyperparameters, fine-tuning, or the models themselves have access to numerous tools for tracking their trials. Experimental models can be appraised offline in a staging environment using benchmark datasets, human labelers, or even LLMs themselves. However, offline methods have their limits. For production-level performance evaluation, developers can employ tools like Statsig. Data-driven experimentation and swift iteration cycles are pivotal in building a defensible product.

- Observability: Post the deployment of an application, it becomes essential to monitor the model’s performance, cost, latency, and behavior over time. Such platforms can inform future iterations of prompts and model experimentation. For example, WhyLabs has recently introduced LangKit, offering developers insight into the quality of model outputs, safeguards against harmful usage patterns, and checks for responsible AI usage.

Deployment

The final stage is deployment, where developers aspire to transfer their applications into production. They can opt to self-host these applications or resort to third-party services for deployment. Tools like Fixie offer a seamless route for developers to construct, share, and launch AI applications.

In essence, the generative AI stack provides a comprehensive platform supporting the creation, testing, and deployment of AI applications, consequently reshaping how we create and function.

Different types of generative AI models

There are several types of generative AI, including:

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are a type of deep learning model used for generative tasks, such as creating new images, music, or other types of content.

The architectural components of GAN consist of two neural networks: a generator and a discriminator. The generator is responsible for generating new data samples intended to be similar to the original training data, whereas the discriminator tries to distinguish between the generated samples and real ones.

During training, the generator starts by creating random noise as input and tries to generate data samples similar to the training data. The discriminator receives both real and generated samples and tries to distinguish between them. The generator uses the feedback from the discriminator to improve its samples and the discriminator adjusts its weights to better distinguish between real and generated data. This process continues iteratively until the generator can produce samples that are indistinguishable from real data.

One of the key advantages of GANs is their ability to generate highly realistic samples that are difficult to distinguish from real data. GANs have been used to create realistic images of faces, landscapes, and even art. They can also be used in other applications, such as generating music or speech.

However, training GANs can be challenging, as it requires balancing the generator and discriminator networks and preventing the model from getting stuck in a local minimum. There are also issues with mode collapse, where the generator produces only a limited subset of the possible outputs.

Despite these challenges, GANs are useful for generative tasks and can be applied in various fields.

Variational Autoencoders (VAEs)

Variational Autoencoders (VAEs) are a type of generative model that can learn a latent representation of input data by training an encoder and decoder network in an unsupervised manner. They are often used for image generation, data compression and feature extraction tasks.

The basic idea behind VAEs is to learn a probabilistic distribution of the data being fed to the model. The encoder network maps the input data to a probability distribution in the latent space, which can be thought of as a compressed representation of the input data. The decoder network then maps the latent representation back to the original input data. The goal is to minimize the reconstruction error between the input and output data while also regularizing the latent distribution to ensure that it follows a pre-defined probability distribution, such as a Gaussian.

The key difference between VAEs and traditional autoencoders is that VAEs learn a probabilistic distribution in the latent space, whereas traditional autoencoders learn a deterministic mapping. This means that VAEs can generate new data samples by sampling from the learned latent distribution, which can be useful for tasks such as image generation.

To train a VAE, we first define a loss function that consists of two terms: a reconstruction loss that measures the difference between the input and output data and a regularization loss that measures the difference between the learned latent distribution and the pre-defined probability distribution. The overall objective is to minimize this loss function using stochastic gradient descent.

Autoregressive models

Autoregressive models are a class of statistical models that use a regression approach to model the dependencies between consecutive observations in a time series. These models are widely used in various applications, such as natural language processing, speech recognition, and time-series analysis.

In autoregressive models, the next value in a time series is predicted based on the previous values. The model assumes that the current observation is dependent on the previous observations, which are captured by a set of parameters learned during training. Specifically, the model takes the previous values of the time series as inputs and predicts the next value. This process is repeated for each time step in the series.

Autoregressive models come in different forms, depending on the type of input and output variables. For instance, if the input variable is the same as the output variable, the model is considered a univariate autoregressive model. In contrast, if the input variable differs from the output variable, the model is considered a multivariate autoregressive model.

Autoregressive models can be further categorized based on the order of autoregression. For example, a first-order autoregressive model (AR(1)) only considers the previous observation in the time series to predict the next value. A second-order autoregressive model (AR(2)) considers the two previous observations and so on.

Autoregressive models are often trained using maximum likelihood estimation, which involves finding the parameters that maximize the likelihood of the observed data which is done by minimizing the negative log-likelihood of the model using gradient descent.

Launch your project with LeewayHertz

Level up your content creation game with robust generative AI-powered solutions

Flow-based models

Flow-based models are a type of generative AI model that learns a mapping from a simple distribution, such as a Gaussian distribution, to the more complex distribution of the input data. This is achieved by applying a series of invertible transformations to the base distribution. The result of these transformations is a bijective mapping between the input and output distributions, which allows the model to generate new samples from the input distribution. Since the transformations are invertible, both the forward and inverse mappings can be computed efficiently.

Flow-based models are trained using maximum likelihood estimation, which involves minimizing the negative log-likelihood of the data under the model. In other words, the model is trained to minimize the difference between the output distribution of the model and the true distribution of the input data.

To do this, the log-likelihood of the data under the model is computed, which measures how well the model is able to generate new samples that match the input data. This log-likelihood is then used to calculate the gradients, which are used to update the parameters of the model through backpropagation.

One of the key advantages of flow-based models is that they can generate new data samples by sampling from the base distribution and then applying the series of transformations in reverse order. This means that the model can generate high-quality samples that closely resemble the training data. Additionally, flow-based models can be used for tasks such as data compression and anomaly detection since they can compress the data into a lower-dimensional latent space and detect outliers based on their likelihood under the learned distribution.

Rule-based models

Rule-based models are a class of models that use logical rules to represent knowledge and make predictions about a given domain. These models are widely used in various applications, such as expert systems, decision support systems and natural language processing.

In rule-based models, a set of rules are defined based on the knowledge and expertise of domain experts. These rules are usually expressed as if-then statements, where the antecedent specifies the conditions under which the rule is applicable, and the consequent specifies the action to be taken when the conditions are met. For example, a rule in a medical expert system might state, “If a patient has a fever and a cough, then they may have pneumonia.”

The rules in a rule-based model can be represented using different types of logic, such as propositional logic or first-order logic. In propositional logic, the rules are expressed in simple logical propositions such as “if A is true, then B is true.” In first-order logic, the rules are expressed in terms of objects, variables and quantifiers.

Rule-based models can be used for various tasks such as classification, prediction, and diagnosis. The rules assign a class label to an input based on its features in classification tasks. In prediction tasks, the rules are used to predict the system’s future state based on its current state. In diagnosis tasks, the rules identify the causes of a particular symptom or condition.

One of the main advantages of rule-based models is their ability to represent complex knowledge and expertise transparently and interpretably. This makes them particularly useful in domains such as medicine and law, where the decisions made by the model need to be explainable to human experts. Additionally, rule-based models can handle noisy and incomplete data and can be easily updated as new knowledge becomes available.

How does generative AI work?

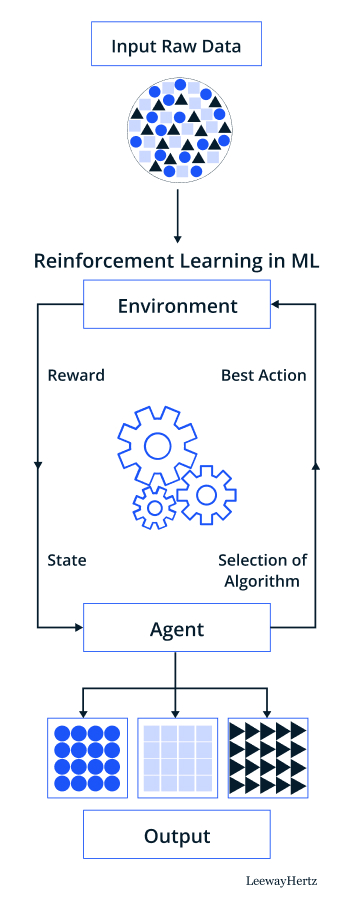

Generative AI creates new digital content, such as images, videos, audio, text, or code, using unsupervised learning methods. The inner working of generative AI can vary from solution to solution, but certain characteristics are common among them.

Generative AI differs from discriminatory AI, which makes classifications between inputs. Discriminatory AI aims to judge incoming inputs based on what it learned during training. On the other hand, generative AI models aim to create synthetic data.

During the training phase, generative AI models are provided with restricted parameters. This strategy challenges the model to formulate its own judgments on the most significant characteristics of the training data. In other words, the model learns to identify the underlying patterns and features of the training data, which it can then use to generate new content similar to the original data.

Generative AI models can use different techniques to generate new content, such as generative adversarial networks, transformer-based models, or variational auto-encoders.

Generative AI is often semi-supervised, meaning it uses both labeled and unlabeled training data to learn the underlying patterns and features of the dataset. This allows the model to understand the dataset better and generate new content beyond what is explicitly provided.

Generative AI technology comes in various forms, but three primary types are commonly used:

- Generative Adversarial Networks or GANs: This technology can generate visual or multimedia outputs from image and language inputs. GANs consist of two neural networks that work together: a generator and a discriminator. The generator generates synthetic data, while the discriminator evaluates the authenticity of the data. The two networks are trained together, with the generator attempting to create realistic outputs that fool the discriminator into thinking they are real.

- Transformer-based models: This type of technology, like Generative Pre-Trained (GPT) language models, leverages Internet-led data to generate textual material, such as website articles, press releases, and whitepapers. Transformer models use a self-attention mechanism that allows them to capture the relationships between different words in a sentence. This approach has led to impressive results in natural language processing tasks such as language translation, question-answering, and text generation.

- Variational auto-encoders: This type of technology consists of an encoder and a decoder. The encoder codes the input data into a compressed code, while the decoder decompresses this code and reproduces the original information. Variational auto-encoders can be trained on a wide range of data types, including images, audio, and text. They have shown promising results in generating high-quality synthetic data, as well as in data compression and representation learning.

Role of natural language processing in generative AI

Generative AI is a rapidly developing area that is changing how we engage with technology. At the heart of this development is natural language processing (NLP), a subfield of artificial intelligence that enables computers to understand and interpret human language. NLP is a critical component of generative AI, allowing for the creation of new text and speech data such as product descriptions, news articles, and synthetic voices for text-to-speech applications.

NLP technologies, such as tokenization, part-of-speech tagging, named entity recognition, sentiment analysis, machine translation, and text summarization, form the foundation of many generative AI applications. Recent advancements in deep learning have led to the development of powerful neural NLP models like RNNs, BERT, GPT-3, XLNet, RoBERTa, T5, and ELECTRA, which have significantly improved the accuracy and efficiency of generative AI applications that rely on NLP technologies.

RNNs are particularly powerful neural networks that can process sequential data like text or speech, making them well-suited for language modeling, speech recognition, machine translation, and chatbots. Transformer models like BERT and GPT-3 have taken NLP to the next level, leveraging large-scale pre-training and fine-tuning to achieve state-of-the-art performance on a wide range of NLP tasks.

With the help of these neural NLP models, generative AI can generate new text data with higher accuracy and relevance, create more advanced chatbots and virtual assistants that can understand and respond to natural language queries with greater accuracy and efficiency and even generate synthetic voices that sound human-like.

Applications of generative AI

Generative AI has significantly changed and impacted how digital content is created and consumed. Its numerous applications span various fields, from creative industries to medical applications, financial services and chatbots.

Creative industries

Generative AI is bringing significant changes to the creative industries of art, music, and fashion. With the help of machine learning algorithms, artists and designers can create fresh and innovative content that would be challenging or impossible to create manually. For instance, generative AI has opened up new avenues for digital art, such as deep dream images, which rely on convolutional neural networks to generate abstract and surreal images. In music, generative AI can be used to generate entirely new musical styles or create original compositions. In the realm of fashion, generative AI can design new clothing styles or generate digital models that can be used for virtual try-on applications.

Industrial applications of generative AI

The impact of generative AI extends beyond the confines of science fiction and the tech industry. This new technology has caused significant improvements in industrial settings, particularly in manufacturing and product design, by introducing innovative approaches and techniques that have improved how things are produced and designed. By harnessing the power of generative AI, engineers can take product design to the next level by creating highly efficient and cost-effective designs. These innovative designs are efficient in reducing development time and resources while improving the overall quality of the product.

One of the most notable applications of generative AI is in aircraft component design. Engineers can use this technology to optimize the design of aircraft components, creating lighter and more fuel-efficient designs that meet the industry’s stringent safety standards.

In the automotive manufacturing sector, generative AI has also proven to be a valuable asset. By leveraging generative AI, engineers can design components such as engine parts or suspension systems, resulting in more efficient and reliable vehicles built to last.

Medical applications of generative AI

In medicine, generative AI is transforming how we approach drug discovery and medical imaging, leading to better patient outcomes.

By leveraging generative AI, medical professionals can gain new insights into complex diseases and develop innovative treatments that were previously out of reach. For instance, generative AI can analyze the chemical properties of existing drugs and generate new chemical structures that have the potential to be more effective. This capability allows researchers to design more precise drugs with improved efficacy and fewer side effects.

Generative AI can also be a game-changer in medical imaging. By analyzing X-rays or MRI scans, generative AI can assist doctors in identifying potential health issues more quickly and accurately, enabling timely interventions that can ultimately save lives. This technology can also help medical professionals predict disease progression, monitor treatment effectiveness and develop personalized treatment plans.

Financial services

The impact of generative AI in the financial services industry is remarkable. This innovative technology has opened up new avenues for banks, hedge funds, and other financial institutions to manage risk and detect fraud more effectively.

By harnessing the power of generative AI, financial institutions can analyze large financial data sets, gain valuable insights into customer behavior, and identify potential risks and fraudulent activities. This capability helps financial institutions protect themselves from potential threats and enhances customer trust by ensuring the safety and security of their financial transactions.

Hedge funds, in particular, can benefit greatly from generative AI technology. By leveraging the processing power of this technology, hedge funds can quickly analyze vast amounts of financial data, extract key insights and make data-driven decisions in real-time, which enables hedge funds to benchmark against comparables and generate insightful reports in a matter of minutes, reducing the need for traditional financial analysts who rely on manual data processing.

With the help of intelligent applications, generative AI solutions enable faster and more accurate decision-making, reducing the need for human intervention and enabling financial institutions to stay ahead of the curve.

Chatbots and voice bots

The widespread use of chatbots and voice bots is changing how businesses interact with customers, streamlining the customer experience and making interactions more efficient and personalized. Generative AI has significantly improved the development of chatbots and voice bots, allowing businesses to improve customer experience.

Chatbots have a wide range of applications, from e-commerce to customer service, and are particularly useful in providing customers with relevant information about products, helping them make purchasing decisions, and providing post-purchase support. With the help of generative AI, chatbots can be programmed to respond to customer queries naturally and personally, enhancing customer satisfaction and building brand loyalty.

Similarly, voice bots offer a hands-free and intuitive way for customers to interact with businesses. Voice bots can be used in various environments, such as cars, homes, and workplaces, providing users instant access to information, entertainment, and assistance without manual interaction. With the help of generative AI, voice bots can be programmed to understand natural language, respond to user queries, and even anticipate user needs, making interactions seamless and effortless.

Launch your project with LeewayHertz

Level up your content creation game with robust generative AI-powered solutions

Generative AI use cases

Generative AI has a wide range of applications in various industries, including marketing, healthcare, music, and engineering. Let’s dive into the details of each use case:

- Image generation for illustrations: With generative AI, anyone can create graphics based on a specified context, topic, or place by converting words into visuals. This application can be beneficial for marketing campaigns, helping designers produce realistic graphics that are visually appealing.

- Image-to-photo conversion: This use case finds applicability in the healthcare industry, as it enables the creation of realistic depictions based on rudimentary pictures or sketches. It is also used in map design and visualizing X-ray results.

- Image-to-image generation: This application involves altering an image’s external characteristics, such as color, material, or shape while preserving its fundamental properties. This use case can benefit retail and video/image surveillance industries by transforming daylight photographs into nocturnal ones.

- Music experience optimization: Generative AI can produce fresh audio material for ads and creative purposes, improving the listening experience on social media or music platforms like Spotify. This application can also generate short clips or audio snippets for various purposes.

- Text generation: This use case involves generating dialogues, headings, and ads, and it has enormous applications in the marketing, gaming, and communications sectors. Generative AI can produce real-time chats with customers or create product details, blogs, and social media materials.

- Equipment design: Generative AI can generate machine components and subassemblies, optimizing designs with material efficiency, clarity, and manufacturing efficiency in mind. In some cases, the design can be fed into a 3D printing machine, allowing for the automatic production of parts.

- Coding: Generative AI can generate code without human coding, making it an essential tool for professionals and non-technical individuals. This application represents the next step in the evolution of no-code application development, making software development more accessible and efficient.

The future of generative AI

Generative AI is a game-changer in the field of AI because it enables computers to be creative and generate something new, which sets it apart from most AI systems that are primarily used as classifiers. Its impact is being felt not only in the art and marketing industries but also in fields such as pharmaceuticals, medtech and biotech, where it has the potential to drive new discoveries and solutions that can benefit humankind.

In the pharmaceutical industry, for example, generative AI can be used to discover new drugs and treatments by analyzing vast amounts of data and generating new hypotheses based on that data. It can help speed up the process of drug discovery by reducing the time and resources needed to identify promising compounds, which could ultimately lead to faster development and approval of new medicines.

Similarly, in the field of medtech, generative AI can be used to create personalized treatment plans for patients based on their medical history, symptoms and other factors, which can also be used to design medical devices and implants tailored to patients’ needs for more effective treatments and improved outcomes.

Another area where generative AI is making an impact is in the field of architecture and design. Architects and designers can use generative AI to create new and innovative designs that are optimized for specific functions, such as energy efficiency or structural stability, leading to the development of buildings and structures that are more sustainable and environmentally friendly.

Moreover, generative AI is also used in the gaming industry to create more realistic and immersive gaming environments. It is used to generate new game content, characters, and storylines that are unique and engaging, providing players with a highly personalized gaming experience.

As generative AI continues to evolve and improve, its applications are expected to expand further, with new use cases emerging across industries. With the advent of advanced machine learning models like OpenAI’s GPT-4, we can expect to see more generative AI advancements that could transform how we use the internet and interact with digital content.

Endnote

Generative AI has brought about significant improvements in the operations of businesses, leading to a major shift in the way they function. By automating simple tasks, creating high-quality content and even addressing complex medical issues, generative AI has already begun to make considerable changes in the industries across the board.

To make the most of generative AI, it’s crucial to consider the different types of models available, such as GANs, VAEs, and autoregressive models, and understand how each is suited to different applications. By doing so, businesses can unlock new possibilities and drive innovation in their respective fields.

AI has numerous benefits beyond these obvious ones, and failing to adopt this technology could pose significant risks for businesses. Missed opportunities and inefficiencies are two such risks that could set companies behind their competitors. By being open-minded about the potential of generative AI and recognizing humans’ vital role in the process, businesses can stay ahead of the curve and unlock new possibilities for growth and innovation.

Leverage the power of generative AI and position yourself at the forefront of innovation. Consult with LeewayHertz’s AI experts and explore the possibilities for your business today!

Start a conversation by filling the form

All information will be kept confidential.

Insights

Redefining logistics: The impact of generative AI in supply chains

Incorporating generative AI promises to be a game-changer for supply chain management, propelling it into an era of unprecedented innovation.

How to create a generative video model?

Generative video models are machine learning algorithms generating new video data based on patterns learned from training datasets. Explore more about it!

Understanding generative AI models: A comprehensive overview

Generative AI is a technology used to generate new and original content. It leverages advanced algorithms and neural networks to autonomously produce outputs that mimic human creativity and decision-making.