Generative adversarial networks (GANs) : a deep dive into the architecture and training process

Recent AI development has shown several groundbreaking inventions, from ChatGPT to the Action Transformer model. However, Generative Adversarial Networks (GAN) is a particularly significant development in machine learning that has captured the attention of researchers and industry professionals alike. GANs use powerful deep learning methods, such as convolutional neural networks, to learn the underlying patterns and regularities of a given dataset and use that knowledge to create new synthetic examples that are indistinguishable from the real ones. The generator model takes random input and generates a new example that resembles to the original dataset, whereas the discriminator model evaluates whether an example is real or fake. The two models are trained in a zero-sum game, where the generator tries to produce more realistic samples to fool the discriminator while the discriminator tries to differentiate between real and fake samples. The process continues until the generator can produce realistic samples that are indistinguishable from the real ones.

One of the most impressive features of Generative Adversarial Networks is their ability to generate photorealistic images and videos. They have been used successfully in various image-to-image translation tasks, such as converting day-to-night or winter-to-summer scenes. They are also used in generating photorealistic images of objects, scenes, and people that are difficult to distinguish from real images. This has significant implications for fields such as advertising, entertainment, and gaming, where creating high-quality content is crucial.

However, Generative Adversarial Networks have also raised concerns about their potential misuse, particularly in creating fake media content. Generative Adversarial Networks can learn to mimic any data distribution, including creating highly convincing fake images and videos that can be used to deceive people. This has serious implications for society, as it can be used to spread misinformation, damage reputations, and manipulate public opinion.

In this article, we will take a deep dive into the architecture and training process of Generative Adversarial Networks to understand their capabilities and limitations.

- What is a Generative Adversarial Network(GAN)?

- An overview of the Generative Adversarial Network architecture

- Types of Generative Adversarial Networks

- How to train Generative Adversarial Networks?

- Future of Generative Adversarial Networks

What is a Generative Adversarial Network(GAN)?

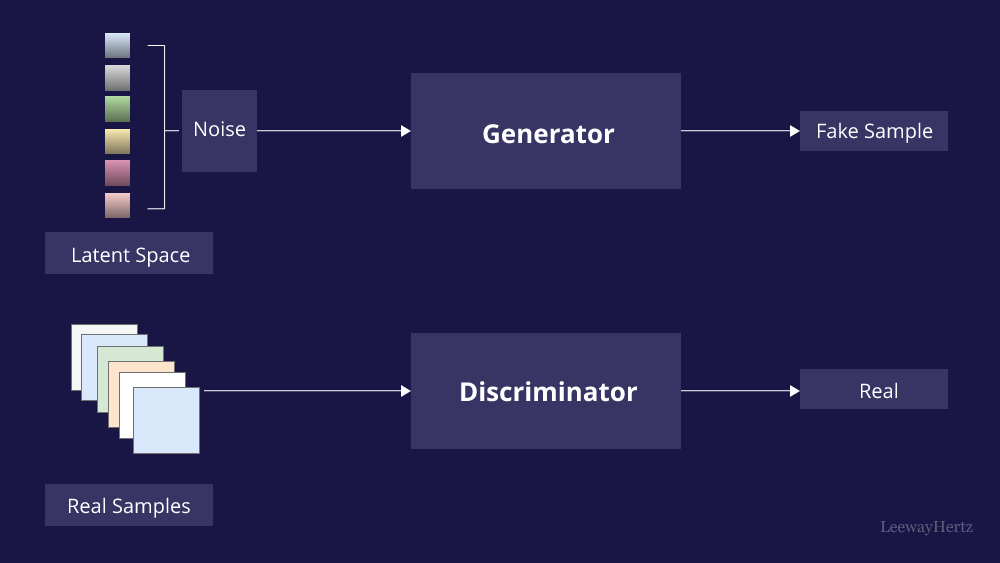

GANs, short for Generative Adversarial Networks, are a type of generative model based on deep learning. They were first introduced in the 2014 paper “Generative Adversarial Networks” by Ian Goodfellow and his team. GANs are a type of neural network used for unsupervised learning, meaning they can create new data without being explicitly told what to generate. To understand GANs, having some knowledge of Convolutional Neural Networks (CNNs) is helpful. CNNs are used to classify images based on their labels. In contrast, GANs can be divided into two parts: the Generator and the Discriminator. The Discriminator is similar to a CNN, as it is trained on real data and learns to recognize what real data looks like. However, the Discriminator only has two output values – 1 or 0 – depending on whether the data is real or fake. The Generator, on the other hand, is an inverse CNN. It takes a random noise vector as input and generates new data based on that input. The Generator’s goal is to create realistic data that can fool the Discriminator into thinking it’s real. The Generator keeps improving its output until the Discriminator can no longer distinguish between real and generated data.

Convolutional Neural Networks (CNNs) are the preferred models for both the generator and discriminator in Generative Adversarial Networks (GANs), typically used with image data. This is because the original concept of GANs was introduced in computer vision, where CNNs had already shown remarkable progress in tasks such as face recognition and object detection. By modeling image data, the generator’s input space, also known as the latent space, provides a compressed representation of the image or photograph set used to train the GAN model. This makes it easy for developers or users of the model to assess the quality of the output, as it is in a visually assessable form. This attribute, among others, has likely contributed to the focus on CNNs for computer vision applications and the incredible advancements made by GANs compared to other generative models, whether they are based on deep learning or not.

The training process for GANs can be considered a competition between the Generator and the Discriminator. The Generator tries to create better and more realistic data, while the Discriminator tries to differentiate between real and fake data. Through this competition, the Generator keeps improving until it can produce data indistinguishable from real data.

Overall, GANs are a generative model that can create new data without being explicitly told what to generate. They consist of a Generator that produces data and a Discriminator that evaluates its realism. Through this adversarial training process, the Generator learns to create more realistic data, resulting in a generative model capable of producing high-quality output.

An overview of the Generative Adversarial Network architecture

The architecture of a General Adversarial Network (GAN) consists of two main components, the Generator and the Discriminator, which are trained in an adversarial manner. The Generator is responsible for generating new samples intended to be similar to the training data, while the Discriminator is responsible for distinguishing between the generated samples and the real training data. Let’s dive deep into each section.

Generator architecture

The Generator takes random noise as input and generates synthetic samples that are intended to be similar to the real training data. The Generator typically consists of one or more deep neural networks, often using convolutional layers to generate images or recurrent layers to generate sequential data. The output of the Generator is fed to the Discriminator, which is then trained to distinguish between the generated samples and the real training data.

The generator is a crucial building block in the GAN architecture, and understanding its role and structure is essential to comprehend the GAN training process. The generator architecture comprises three components: the latent space, the generator, and the image generation section. The generator samples from the latent space and creates a relationship between the latent space and the output. We then create a neural network that maps from the input (latent space) to the output (image for most examples).

During the adversarial training process, we connect the generator and discriminator together in a model and train the generator to produce images that are indistinguishable from real ones. Ultimately, the generator produces the output images we see after the entire training process. Training GANs directly refer to training the generator, and the discriminator will need to train for a few epochs before beginning the training process in most architectures.

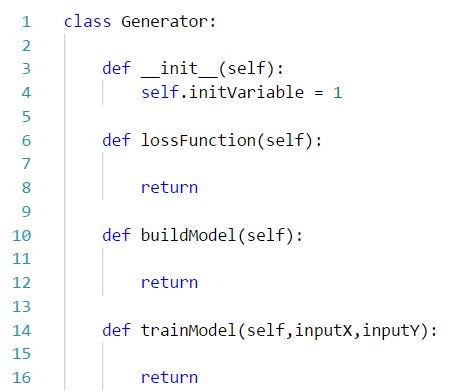

Each component of the GAN architecture is defined as a class, and the generator class has three primary functions: the class template, the loss function, and the buildModel function.

The loss function defines a custom loss function for training the model if needed, and the buildModel function constructs the actual neural network model. Specific training sequences for a model will go inside this class, although we’ll likely not use the internal training methods for anything other than the discriminator. Understanding the code structure we’ll build in each chapter is essential to developing these components.

Discriminator architecture

The discriminator in GAN architecture serves as a deep neural network that distinguishes between real and fake images by generating a scalar value between 0 and 1, indicating the probability that the input is real. It is trained to be an accurate binary classifier, minimizing the binary cross-entropy loss between its predictions and true labels. The discriminator’s architecture usually includes a Convolution Neural Network (CNN) and is trained using both real and generated datasets to balance its training with the generator.

The discriminator is a crucial component of GAN architecture as it acts as an adaptive loss function, learning and adapting to the underlying distribution of data rather than relying on heuristic techniques. It evaluates the authenticity of both real and generated images and gradually learns to distinguish between them, allowing the generator to generate new, previously unseen data from the latent space. The generator is trained to minimize the log loss of the discriminator’s output for generated samples, aiming to generate realistic images while minimizing the difference between the generated and real training data. The training process for GANs involves iteratively training the generator and discriminator in an adversarial manner until convergence, allowing the generation of new data similar to the training data.

Launch your project with LeewayHertz

Unleash the full potential of AI with the power of our GAN-based solutions

Types of Generative Adversarial Networks

The different types of GANs are typically determined based on the specific modifications or extensions made to the original GAN architecture. These modifications can be made to the generator, the discriminator, or both, aiming to improve the stability, quality, or efficiency of the GANs. Some common types of GANs include:

Conditional GANs

Conditional GANs (cGANs) are a type of Generative Adversarial Network (GAN) that allows the generator network to be conditioned on an additional input vector. This conditioning can generate images corresponding to a specific class, category, or attribute. The architecture of a cGAN is similar to that of a traditional GAN, with a condition vector concatenated with the input noise vector fed into the generator. The discriminator network is also modified to take in both the generated image and the condition vector as inputs.

During training, the generator is given a random noise vector and a condition vector corresponding to a particular class or attribute. The generator then generates an image that is intended to match the condition vector. The discriminator is trained to distinguish between real images and fake images generated by the generator, considering both the image and the condition vector. The loss function for a cGAN includes both the standard GAN loss, which encourages the generator to produce realistic images that can fool the discriminator and a conditioning loss, which encourages the generated images to match the desired condition vector. The conditioning loss can take different forms depending on the problem being solved and the nature of the condition vector.

One of the main advantages of cGANs is that they allow for greater control over the generation process, as the generator can be conditioned on specific attributes or classes. This can be particularly useful in applications such as image editing or synthesis, where the goal is to generate images with specific properties or characteristics.

Some examples of applications of cGANs include image-to-image translation, where the goal is to generate images of one domain that match the style or content of another domain, and text-to-image synthesis, where the goal is to generate images based on textual descriptions.

Deep Convolutional GANs

Deep Convolutional GANs (DCGANs) are a type of Generative Adversarial Network (GAN) that use deep convolutional neural networks (CNNs) in both the generator and discriminator networks, making DCGANs particularly effective at generating high-quality images with fine details and textures.

The architecture of a DCGAN typically consists of several convolutional layers in both the generator and discriminator networks, followed by a few fully connected layers. The generator takes as input a noise vector and produces an image while the discriminator takes as input an image and outputs a probability that represents whether the image is real or fake. During training, the generator is optimized to produce images that can fool the discriminator, while the discriminator is optimized to correctly distinguish between real and fake images generated by the generator. The loss function for a DCGAN includes both the standard GAN loss, which encourages the generator to produce realistic images, and additional regularization terms that help to stabilize the training process.

One of the main advantages of DCGANs is that they are able to generate high-quality images with fine details and textures which is often difficult to achieve with other types of GANs. This is partly due to the use of convolutional layers, which allow the network to capture spatial features and patterns in the input images.

DCGANs have been used in various applications, including image synthesis, image editing, and image-to-image translation. They have also been combined with other deep learning techniques, such as variational autoencoders, to create more powerful generative models.

However, training DCGANs comes with some challenges related to stability and mode collapse that occurs when the generator produces a limited set of images rather than exploring the full range of possible images. Various techniques can be used to address these challenges, including modifying the loss function, adjusting the architecture of the networks, and using different regularization techniques.

Wasserstein GANs

Wasserstein GANs (WGANs) are a type of Generative Adversarial Network (GAN) that use the Wasserstein distance (also known as the Earth Mover’s distance) as a measurement between the generated and real data distributions, providing several advantages over traditional GANs, which include improved stability and more reliable gradient information.

The architecture of a WGAN is not different than the traditional GAN, involving a generator network that produces fake images and a discriminator network that distinguishes between real and fake images. However, instead of using a binary output for the discriminator, a WGAN uses a continuous output that estimates the Wasserstein distance between the real and fake data distributions. During training, the generator is optimized to minimize the Wasserstein distance between the generated and real data distributions, while the discriminator is optimized to maximize this distance, leading to a more stable training process. It is worth mentioning that Wasserstein distance provides a smoother measure of distance than the binary cross-entropy used in traditional GANs.

One of the main advantages of WGANs is that they provide more reliable gradient information during training, helping to avoid problems such as vanishing gradients and mode collapse. In addition, the use of the Wasserstein distance provides a clearer measure of the quality of the generated images, as it directly measures the distance between the generated and real data distributions.

WGANs have been used in various applications, including image synthesis, image-to-image translation, and style transfer along with additional techniques such as gradient penalty, which improves stability and performance.

However, some challenges are associated with using WGANs, particularly related to the computation of the Wasserstein distance and the need for careful tuning of hyperparameters. There are also some limitations to the Wasserstein distance as a measure of distance between distributions, which can impact the model’s performance in certain situations.

CycleGANs

CycleGANs are a Generative Adversarial Network (GAN) used for image-to-image translation tasks, such as converting an image from one domain to another. Unlike traditional GANs, CycleGANs do not require paired training data, making them more flexible and easier to apply in real-world settings.

The architecture of a CycleGAN consists of two generators and two discriminators. One generator takes as input an image from one domain and produces an image in another domain whereas the other generator takes as input the generated image and produces an image in the original domain. The two discriminators are used to distinguish between real and fake images in each domain. During training, the generators are optimized to minimize the difference between the original image and the produced image by the other generator, while the discriminators are optimized to distinguish between real and fake images correctly. This process is repeated in both directions, creating a cycle between the two domains.

CycleGANs do not require paired training data which makes them more flexible and easier to apply in real-world settings. For example, they can be used to translate images from one style to another or generate synthetic images similar to real images in a particular domain.

CycleGANs have been used in various applications, including image style transfer, object recognition, and video processing. Additionally, they are also used to generate high-quality images from low-quality inputs, such as converting a low-resolution image to a high-resolution image.

However, CycleGANs come with certain challenges like complexity of the training process and the need for careful tuning of hyperparameters. In addition, there is a risk of mode collapse, where the generator produces a limited set of images that do not fully capture the diversity of the target domain.

StyleGAN

StyleGAN is yet another GAN architecture widely recognized for its ability to generate high-quality and highly realistic images. It was introduced by Tero Karras et al. from NVIDIA in 2019 and has since undergone several advancements, including the release of StyleGAN2. StyleGAN focuses on generating images with diverse and controllable styles. Unlike traditional GANs, StyleGAN operates at multiple scales, enabling it to generate images with fine details and coherent structures.

At the heart of StyleGAN are “style modulation layers,” which allow precise control over the visual style of the generated images. When generating an image, StyleGAN starts from a basic starting point and combines a “style” and random noise to shape the final result. This ensures that each image produced has its own unique characteristics.

What sets StyleGAN apart is its introduction of a “mapping network.” This network converts random numbers into a special representation called an “intermediate latent space.” This new representation helps the network better understand and manipulate the different attributes needed to create diverse and natural-looking images.

By combining the style modulation layers with the mapping network, StyleGAN produces high-quality images that are often indistinguishable from real photographs. This breakthrough has opened up exciting possibilities for AI-generated art, design, and various creative applications.

The StyleGAN architecture has undergone several improvements and refinements in subsequent versions (such as StyleGAN2 and StyleGAN3) to address issues like artifacts and improve the quality and disentanglement of the generated images. Researchers and developers have drawn inspiration from StyleGAN and have explored extensions and applications based on its unique architecture, leading to further advancements and novel uses in the field of generative AI.

How to train Generative Adversarial Networks?

The two components of GAN – Generator and Discriminator are trained simultaneously in a minimax game where the generator tries to create realistic samples to fool the discriminator while the discriminator tries to classify real and generated samples correctly. Here are some steps to train GANs using examples:

Data preprocessing

Data preprocessing is crucial in machine learning, as it involves converting raw data into a format that can be effectively used and analyzed by machine learning algorithms. In the context of GANs, proper data preprocessing ensures that the generator and discriminator models receive correctly formatted and cleaned input data for training.

Data preprocessing involves several important steps, including cleaning, normalization, transformation, and augmentation. Data cleaning involves the removal of irrelevant or noisy data from the dataset, while data normalization ensures that the input data have similar ranges of values and is not biased toward any particular feature. Data transformation involves converting the input data into a format that machine learning algorithms can easily process. Data augmentation helps increase the training set’s diversity and improve the model’s performance by creating new training examples through transformations such as cropping, flipping, and rotating images.

In the case of image datasets, data cleaning may involve the removal of blurry or corrupted images, while data transformation may include resizing or converting images to grayscale or a specific file format. The importance of data preprocessing cannot be overstated, as it can significantly impact the performance and accuracy of machine learning models.

Defining Generator architecture

The generator of a Generative Adversarial Network (GAN) takes input from a random noise vector and generates an output resembling the real data to create new data samples, approximating the real data distribution. So, to develop a successful GAN model, it is essential to define the architecture of the generator in a proper way which involves several steps:

- Input layer: The generator takes a random noise vector as input, typically drawn from a Gaussian or uniform distribution.

- Hidden layers: The generator uses a series of hidden layers to transform the noise vector into an output that resembles the real data. Different neural network architectures, including fully connected layers, convolutional layers, or recurrent layers are used to implement these hidden layers.

- Output layer: The final layer of the generator produces the output, whereas the number of neurons in this layer should match the dimensions of the real data.

- Activation functions: The activation function used in the output layer depends on the generated data type. For instance, a sigmoid activation function is commonly used to produce output values between 0 and 1 for image data.

- Loss function: The binary cross-entropy loss is typically used to measure the difference between the generated output and the real data during training.

- Optimization: The generator is trained using an optimizer such as stochastic gradient descent (SGD) or Adam.

- Hyperparameters: Several hyperparameters need to be defined to train the generator, such as the learning rate, batch size, number of epochs, and the size of the hidden layers.

Defining Discriminator architecture

In GAN, the discriminator is a neural network responsible for classifying the generated data from the generator as real or fake.

To define the architecture of the discriminator in GAN, we first need to determine the input and output dimensions of the discriminator. The input dimension is the size of the image or data that the discriminator is going to classify, while the output dimension is typically a binary output indicating whether the input is real or fake. After determining the input and output dimensions, we can start designing the neural network architecture of the discriminator. The discriminator typically uses convolutional neural network (CNN) layers to extract features from the input data. These features are then fed into a fully connected layer that outputs the binary classification of real or fake. The number of CNN layers and the size of the filters used in these layers are hyperparameters that can be tuned based on the size and complexity of the input data. In general, the number of CNN layers can range from a few to dozens, depending on the complexity of the dataset.

Additionally, batch normalization layers can be added between the CNN layers to improve the stability and performance of the discriminator. Dropout layers can also be used to prevent the overfitting of the discriminator. The loss function of the discriminator in GAN is typically binary cross-entropy loss, which measures the difference between the predicted and actual output labels.

Training the generator and discriminator

In a Generative Adversarial Network (GAN), training the discriminator involves using both real and fake data to teach the binary classifier to distinguish between the two. The steps include creating a training dataset, generating fake data, combining both real and fake data, training the discriminator using backpropagation, and updating the generator. Conversely, training the generator requires optimizing its weights to produce synthetic data that can deceive the discriminator. The steps include generating fake data, feeding it to the discriminator, calculating the generator loss, backpropagating the loss to update the generator weights, and repeating the process. Finally, training both components simultaneously involves generating random noise vectors, creating mixed batches of real and fake data, training the discriminator, generating new batches of fake data, setting the labels for the fake data as “real,” and updating the generator weights using the generator loss. This adversarial training process produces increasingly realistic synthetic data over time, resulting in very convincing images that are difficult to distinguish from real ones.

The process of training the generator and discriminator together in a GAN is often referred to as adversarial training since the two components work in opposition. This process can be challenging, as the generator and discriminator must learn to adapt to each other’s behavior to produce high-quality results. However, when done correctly, GANs can produce very realistic images that are difficult to distinguish from real ones.

Evaluating the GAN

Evaluating the performance of a GAN during training can be challenging because the ultimate goal is to generate realistic samples that closely resemble the real data distribution. Therefore, evaluating the quality of the generated samples is subjective and depends on the specific data domain.

One common method of evaluating the GAN is to use a combination of qualitative and quantitative measures. Qualitative measures involve visually inspecting and comparing the generated samples to real ones. For example, if the GAN generates images, we can compare the generated images to a sample of real images from the training set to see how realistic they look. If the GAN generates text, we can compare it to a sample of real text from the training set to see how coherent and grammatically correct it is.

Quantitative measures involve using metrics to evaluate the generated samples’ quality numerically. Some commonly used quantitative measures for GANs include:

- Inception score: This measures the diversity and quality of generated samples. It computes the KL divergence between the marginal class distribution of the generated samples and the real samples and also measures the classifier’s confidence in classifying the generated samples.

- Frechet Inception Distance (FID): This measures the similarity between the distribution of real and generated samples in feature space. It uses the activations of an intermediate layer of a pre-trained classifier to compute the distance.

- Precision and recall: These are metrics from the field of information retrieval and can be used to measure the quality of generated text or images. Precision measures how many generated samples are relevant (i.e., similar to real samples), while recall measures how many relevant samples are generated.

- Wasserstein distance: This measures the distance between the real and generated distributions in terms of their underlying probability densities. It is commonly used in Wasserstein GANs (WGANs) to train the GAN.

It is important to note that evaluating the GAN during training is an iterative process. As the GAN is trained, the quality of generated samples may improve, but the GAN may also suffer from mode collapse or other issues. Therefore, monitoring the GAN during training is important to ensure that it learns effectively and produces high-quality outputs. One way to monitor a GAN is by examining the loss functions of the generator and discriminator networks, which can provide insights into the training progress and identify potential problems. For example, if the generator loss is consistently higher than the discriminator loss, it may indicate that the generator is not producing realistic samples.

Other methods to monitor a GAN include visual inspection of the generated samples, measuring the diversity of the generated samples, and testing the GAN on a validation dataset. It is also important to tune the hyperparameters of the GAN, such as the learning rate and batch size, to optimize its performance.

Tuning the GAN

Tuning the GAN (Generative Adversarial Network) during training is important to achieve optimal results which involve optimizing various hyperparameters that can affect the training process and the quality of generated data.

Here are some important hyperparameters that are tuned during the GAN training process:

- Learning rate: The learning rate determines how fast the model adjusts its parameters during training. It is a crucial hyperparameter that can impact the stability of GAN training. If the learning rate is too high, the GAN may become unstable and fail to converge. On the other hand, if the learning rate is too low, the training process may become slow and take longer to generate good-quality samples.

- Batch size: Batch size is the number of training examples used in one training iteration which determines the number of samples generated by the generator and the number of samples used by the discriminator to learn. Larger batch size can speed up the training process, but it may also result in overfitting and reduce the diversity of generated samples. The smaller batch size can help the GAN converges better, but it may also increase the training time.

- Loss function: The choice of the loss function is essential in the GAN training process. The generator and the discriminator have different loss functions that should be carefully designed to optimize the training process. For example, the generator’s loss function may use adversarial loss which measures the similarity between the generated and the real samples. In contrast, the discriminator’s loss function can use a binary cross-entropy loss which measures how well it distinguishes between real and fake samples.

- Architecture: The GAN architecture includes the number of layers, neurons, and type of activation functions used in both the generator and the discriminator networks. So, choosing an architecture that balances the GAN’s complexity and stability is crucial. Complex architectures can generate more diverse and high-quality samples, but they may also lead to overfitting which can affect the stability of the GAN.

Launch your project with LeewayHertz

Unleash the full potential of AI with the power of our GAN-based solutions

Future of Generative Adversarial Networks

Generative Adversarial Networks (GANs) have already showcased their potential for various applications, including image and video synthesis, natural language processing, and drug discovery. With continuous advancements and new techniques, GANs will likely find even more exciting applications. Some potential future applications of GANs are:

- Virtual reality and augmented reality: GANs can generate realistic 3D models of objects and environments for use in virtual and augmented reality applications, resulting in more immersive and lifelike experiences, from gaming to architectural design.

- Fashion and design: GANs can create new designs and patterns for clothing, accessories, and home decor which could facilitate more creative and efficient design processes, as well as personalized products based on individual preferences.

- Healthcare: GANs can help improve disease diagnosis and treatment by analyzing and synthesizing medical images and assisting drug discovery and development by generating new molecules with desired properties.

- Robotics: GANs can generate synthetic data for training robots which could enhance their performance in real-world environments. They can also create new robot designs and behaviors.

- Marketing and advertising: GANs can produce realistic images and videos of products and services for use in marketing and advertising campaigns, resulting in more engaging and effective content and personalized content based on individual preferences.

- Art and music: GANs can generate new forms of art and music by synthesizing existing styles and creating entirely new ones, resulting in more innovative and experimental creative processes and personalized content based on individual tastes.

Current research trends in GANs are as follows:

- Improving stability: Researchers are exploring new techniques such as spectral normalization, weight normalization, and self-attention mechanisms to address the instability of GANs during training which can lead to mode collapse and other issues.

- Addressing bias: GANs can be biased towards certain features or patterns in the data, limiting their generality and accuracy. Researchers are exploring new techniques to address this, such as fairness constraints and adversarial debiasing.

- Conditional generation: Researchers are exploring new techniques for conditional generation, such as auxiliary classifiers and label smoothing, for generating outputs based on additional inputs, such as class labels or attributes.

- High-fidelity generation: Researchers are exploring new techniques, such as progressive growth and attention mechanisms to improve the realism and fidelity of GAN-generated images and videos.

- Applications to new domains: Researchers are exploring new applications of GANs to domains such as music generation, text-to-image synthesis, and speech synthesis.

- Interpreting and understanding GANs: Researchers are exploring new techniques, such as visualization methods and disentanglement methods to interpret and understand the intricate patterns generated by GANs.

Endnote

Although the architecture and training process of GANs are complex, it is essential to understand how to optimize their performance for specific applications. By delving into the architecture and training process of GANs, we have gained valuable insights into how these models work and how to train them effectively. We have also seen how the generator and discriminator components work together to produce realistic data similar to the training data.

As GANs continue to improve, their applications will undoubtedly expand, providing exciting new opportunities for data generation and other fields.

Hence, understanding the architecture and training process of GANs is essential for anyone interested in using this technique that unlocks the full potential of GANs and harnesses their power to create new and innovative solutions for various problems in the real world. So, let’s continue to explore and innovate with GANs and see what else this exciting field has in store for us.

Want to maximize your data generation capabilities? Transform your business with cutting-edge Generative Adversarial Networks solutions with LeewayHertz’s team of AI experts!

Start a conversation by filling the form

All information will be kept confidential.

Insights

The future of workflow automation: Leveraging artificial intelligence for enhanced efficiency

AI workflow automation is the integration of Artificial Intelligence (AI) technologies with workflow automation to streamline business processes, improve efficiency, and drive innovation.

Generative AI in customer service: Innovating for the next generation of customer care

Generative AI transforms customer service by automating routine tasks, providing personalized assistance, ensuring 24/7 availability, and enhancing customer engagement.

AI for ITSM: Enhancing workflows, service delivery and operational efficiency

Leveraging AI in IT Service Management (ITSM) has become a game-changer for organizations seeking to streamline operations, boost productivity, and enhance customer satisfaction.