Exploring diffusion models: A comprehensive guide to key concepts and applications

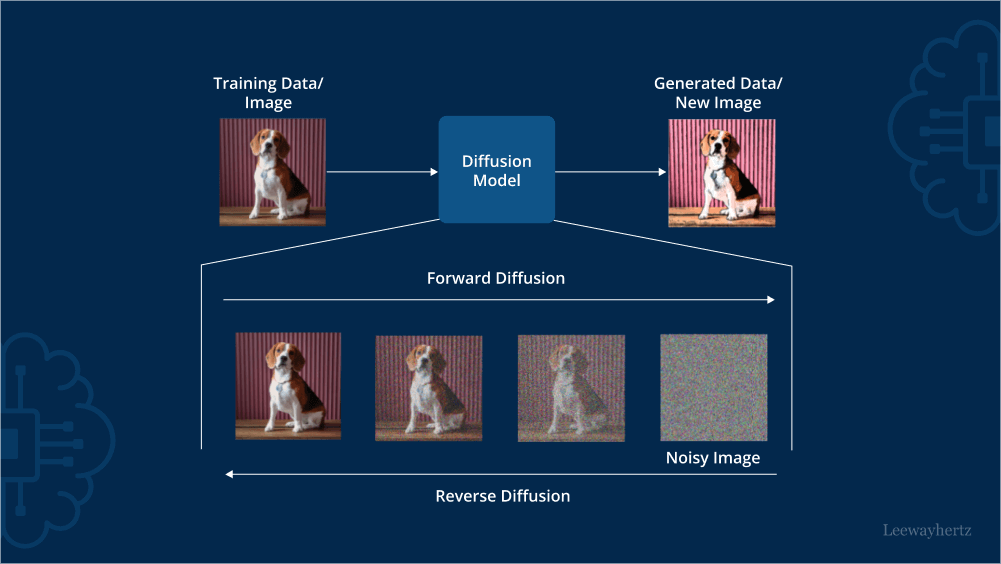

In a seminal 2015 paper named “The Deep Unsupervised Learning using Nonequilibrium Thermodynamics,” Sohl-Dickstein et al. first introduced diffusion models in deep learning. Again in 2019, Song et al. published a paper called, “Generative Modeling by Estimating Gradients of the Data Distribution,” using the same principle but with a different approach. The actual development and training of diffusion models gained momentum in 2020 with the publication of Ho et al.’s paper, “Denoising Diffusion Probabilistic Models,” which has since become widely popular. Despite their relatively recent inception, diffusion models have quickly gained prominence and are now recognized as a vital component within the realm of machine learning. Diffusion models are a new class of deep generative models that break the long-time dominance of Generative Adversarial Networks (GANs) in the challenging task of image synthesis in a variety of domains, ranging from computer vision, natural language processing, temporal data modeling to multi-modal modeling. These models have demonstrated their versatility and effectiveness in addressing challenges and solving problems within diverse areas, including computational chemistry and medical image reconstruction. Diffusion models work on the core principle of creating data comparable to the inputs they were trained on. They function fundamentally by corrupting training data by successively adding gaussian noise, and then learning to recover the data by reversing this noising process. In this article, we will take a look at some of the technical underpinnings of diffusion models, focusing first on their key concepts, image generation techniques, their comparison with GANs followed by their training and applications.

- What are diffusion models?

- How do diffusion models work? A detailed overview of the iterative process at work

- Diffusion models Vs GANs

- How are diffusion models used in image generation?

- How are diffusion models used in video generation?

- Examples of diffusion models for image generation

- Google Space-Time diffusion model: Unveiling Lumiere for realistic video synthesis

- How to train diffusion models?

- Applications of diffusion models

- Practical applications of diffusion models across diverse industries

- Navigating the future of diffusion models

What are diffusion models?

Diffusion models are a type of probabilistic generative model that transforms noise into a representative data sample. Diffusion models function by adding noise to the training data and then learning to retrieve the data by reversing the noise process. The training of diffusion models involves iteratively denoising the input data and updating the model’s parameters to learn the underlying probability distribution and improve the quality of generated samples. Diffusion models take inspiration from the movement of gas molecules from high to low density areas, as observed in thermodynamics. This concept of increasing entropy or heat in physics is also applicable to loss of information due to noise in information theory. By building a learning model that can understand the systematic decay of information, it becomes possible to reverse the process and recover the data from the noise. Similar to VAEs, diffusion modeling optimizes an objective function by projecting data onto the latent space and then recovering it back to the initial state. However, instead of learning the data distribution, diffusion models use a Markov Chain to model a series of noise distributions and decode data by undoing the noise in a hierarchical manner.

Variants of diffusion models

Diffusion models can be categorized into three main variants: Denoising Diffusion Probabilistic Models (DDPMs), Score-based Generative Models (SGMs), and Stochastic Differential Equations (Score SDEs). Each formulation represents a distinct approach to modeling and generating data using diffusion processes.

DDPMs: A DDPM model makes use of two markov chains: a forward chain that perturbs data to noise, and a reverse chain that converts noise back to data. The former is typically hand-designed with the goal to transform any data distribution into a simple prior distribution (e.g., standard gaussian), while the latter markov chain reverses the former by learning transition kernels parameterized by deep neural networks. New data points are subsequently generated by first sampling a random vector from the prior distribution, followed by ancestral sampling through the reverse markov chain. DDPMs are commonly used to eliminate noise from visual data. These models have shown outstanding results in a variety of image denoising applications. They are used in redefining the image and video processing techniques to improve the visual production quality.

SGMs: The key idea of SGM model is to perturb data with a sequence of intensifying gaussian noise and jointly estimate the score functions for all noisy data distributions by training a deep neural network model conditioned on noise levels (called a Noise-conditional Score Network or NCSN). Samples are generated by chaining the score functions at decreasing noise levels with score-based sampling approaches, including stochastic differential equations and ordinary differential equations and their various combinations. Training and sampling are completely decoupled in the formulation of score-based generative models, so one can use a multitude of sampling techniques after the estimation of score functions. SGMs create fresh samples from a specified distribution by learning the estimation score function that estimates the log density of the target distribution. This scoring function may be used to produce new distribution data points. SGMs have shown similar capabilities to GANs in producing high-quality images and videos.

SDEs: DDPMs and SGMs can be further generalized to the case of infinite steps or noise levels, where the perturbation and denoising processes are solutions to Stochastic Differential Equations (SDEs). We call this formulation Score SDE, as it leverages SDEs for noise perturbation and sample generation, and the denoising process requires estimating score functions of noisy data distributions. SDEs are used in model fluctuation tasks in quantum physics and they are also used by financial professionals to calculate financial derivatives for different prices.

How do diffusion models work? A detailed overview of the iterative process at work

The iterative process of diffusion models in AI is a fundamental aspect of their functioning, involving multiple iterations or steps to generate high-quality output. To understand this process, let’s delve deeper into how diffusion models work.

Diffusion models are generative models that aim to capture the underlying distribution of a given dataset. They learn to generate new samples that resemble the training data by iteratively refining their output. The process starts with an initial input or “noise” sample, which is passed through the model. The model then applies probabilistic transformations to iteratively update the sample, making it more closely resemble the desired output.

During each iteration, the diffusion model generates latent variables, which serve as intermediate representations of the data. These latent variables capture essential features and patterns present in the training data. The model then feeds these latent variables back into the diffusion model, allowing it to refine and enhance the generated output further. This feedback loop between the model and the latent variables enables the diffusion model to progressively improve the quality of the generated samples.

The iterative process typically involves applying reversible transformations to the latent variables, which help maintain the statistical properties of the data distribution. By applying these transformations, the model can update the latent variables while preserving the key characteristics of the data. As a result, the generated samples become more coherent, realistic, and representative of the training data distribution.

Launch your project with LeewayHertz!

Leveraging our hands-on experience and knowledge of AI foundation models, we develop custom foundation model-based solutions tailored to your business needs.

The significance of the iterative process in diffusion models

The significance of the iterative process in diffusion models lies in its ability to generate high-quality output that closely resembles the training data. Through multiple iterations, the model learns to capture complex patterns, dependencies, and statistical properties of the data distribution. By iteratively refining the generated samples, diffusion models can overcome initial noise and improve the fidelity and accuracy of the output.

The iterative process allows diffusion models to capture fine-grained details, subtle correlations, and higher-order dependencies that exist in the training data. By repeatedly updating the latent variables and refining the generated samples, the model gradually aligns its output distribution with the target data distribution. This iterative refinement ensures that the generated samples become increasingly realistic and indistinguishable from the real data.

Moreover, the iterative process enables diffusion models to handle a wide range of data modalities, such as images, text, audio, and more. The model can learn the specific characteristics of each modality by iteratively adapting its generative process. This flexibility makes diffusion models suitable for diverse applications in various domains, including image synthesis, text generation, and data augmentation.

The iterative feedback loop is a vital part of both training and functioning of a diffusion model

During training, the model optimizes its parameters to minimize a loss function that quantifies the discrepancy between the generated samples and the training data. The iterative steps in the training process allow the model to gradually refine its generative capabilities, improving the quality and coherence of the output.

Once the diffusion model is trained, the iterative process continues to be a crucial aspect of its functioning during the generation phase. When generating new samples, the model starts with an initial noise sample and iteratively refines it by updating the latent variables. The model’s ability to generate high-quality samples relies on the iterative feedback loop, where the latent variables guide the refinement process.

Overall, the iterative process in diffusion models plays a vital role in their ability to generate realistic and high-quality output. By iteratively refining the generated samples based on the feedback loop between the model and the latent variables, diffusion models can capture complex data distributions and produce output that closely resembles the training data.

Diffusion models Vs GANs

Diffusion models have gained popularity in recent years as they offer several advantages over GANs. One of the most significant advantages is the stability in the training and generation process due to the iterative nature of the diffusion processes. Unlike GANs, where the generator model has to go from pure noise to image in a single step, diffusion models operate in a much more controlled and steady manner. Instead of producing an image in a single step, diffusion models use iterative refinement to gradually improve the generated image quality.

As compared to GANs, diffusion models require only a single model for training and generation, making them less complex and more efficient. Additionally, diffusion models can handle a wide range of data types, including images, audio, and text. This flexibility has enabled researchers to explore various applications of diffusion models, including text-to-image and image inpainting.

How are diffusion models used in image generation?

Diffusion models, are designed to learn the underlying patterns and structures in an image dataset and then use this knowledge to generate new, synthetic data samples. In the case of image generation, the goal is to learn the visual patterns and styles that characterize a set of images and then use this knowledge to create new images that are similar in style and content.

Unconditional image generation is a type of generative modeling where the model is tasked with generating images from random noise vectors. The idea behind this approach is that by providing the model with random noise, it is forced to learn the patterns and structures that are common across all images in the dataset. This means that the model can generate completely new and unique images that do not necessarily correspond to any specific image in the dataset.

Conditional image generation, on the other hand, involves providing the model with additional information or conditioning variables that guide the image generation process. For example, we could provide the model with a textual description of the image we want to generate, such as “a red apple on a white plate,” or with a class label that specifies the category of the object we want to generate, such as “car” or “dog.” By conditioning the image generation process on this extra information, the model can generate images that are tailored to specific requirements or preferences. For example, if we provide the model with the textual description “a red apple on a white plate,” it will generate an image that matches this description. This approach is useful in many applications, such as image synthesis, style transfer, and image editing. Both unconditional and conditional image generation are important techniques in the field of generative modeling. Unconditional image generation allows the model to create completely new and unique images, while conditional image generation allows the model to generate images that are tailored to specific requirements or preferences.

How are diffusion models used in video generation?

Diffusion models play a crucial role in video generation, allowing us to create realistic and dynamic video sequences. Here’s how they are used:

- Frame-by-frame generation:

- Video generation involves creating individual frames (images) and then stitching them together to form a video.

- Diffusion models generate each frame independently, considering the context of neighboring frames. They capture temporal dependencies and ensure smooth transitions between frames.

- Temporal consistency:

- Diffusion models maintain temporal consistency by modeling how pixel values evolve over time.

- They learn to generate frames that smoothly transition from one to the next, avoiding sudden jumps or flickering.

- Noise injection:

- Diffusion models introduce noise into the image during the generation process.

- At each step, the noise level decreases, allowing the model to refine the image details gradually.

- This noise injection helps create realistic textures and variations in video frames.

- Conditional video generation:

- Diffusion models can be conditioned on additional information, such as text descriptions or partial video frames.

- For example, given a textual description like “a cat chasing a ball,” the model generates frames consistent with that description.

- Video inpainting:

- When part of a video frame is missing (due to occlusion or corruption), diffusion models can inpaint the missing regions.

- By leveraging the surrounding context, they fill in the gaps seamlessly.

- Super-resolution:

- Diffusion models can enhance video resolution by generating high-quality frames.

- They learn to upscale low-resolution frames while maintaining visual coherence.

- Style transfer and artistic effects:

- By conditioning diffusion models on style information (e.g., artistic styles or reference images), we can create videos with specific visual aesthetics.

- This allows for artistic video synthesis and creative effects.

- Video prediction and forecasting:

- Diffusion models can predict future video frames based on observed frames.

- Applications include weather forecasting, traffic prediction, and motion planning.

- Video denoising:

- Diffusion models remove noise from video frames, improving overall quality.

- They learn to distinguish between signal and noise, resulting in cleaner videos.

- Generating novel video content:

- Diffusion models can produce entirely new video sequences.

- Given a starting frame or seed, they generate coherent and diverse video content.

Diffusion models contribute significantly to video generation by ensuring smooth transitions, maintaining temporal consistency, and handling various video-related tasks.

Examples of diffusion models used for image generation

Diffusion models have gained popularity for image generation tasks due to their ability to generate high-quality, diverse and realistic images. Examples include: Dall-E 2 by OpenAI, Imagen by Google, Stable Diffusion by StabilityAI and Midjourney

Dall-E 2

Dall-E 2 was launched by OpenAI in April 2022. It is based on OpenAI’s previous ground-breaking works on GLIDE, CLIP, and Dall-E to create original, realistic images and art from text descriptions. DALL-E 2, generates more realistic and accurate images with 4x greater resolution.

Imagen

Google’s diffusion-based image generation algorithm called Imagen harnesses the capabilities of large transformer language models to comprehend text, while relying on the prowess of diffusion models for generating high-quality images with remarkable fidelity. Imagen consists of three image generation diffusion models:

- A diffusion model to generate a 64×64 resolution image.

- Followed by a super-resolution diffusion model to upsample the image to 256×256 resolution.

- And one final super-resolution model, which upsamples the image to 1024×1024 resolution.

Stable Diffusion

Created by StabilityAI, Stable Diffusion is built upon the concept of High-Resolution Image Synthesis with Latent Diffusion Models by Rombach et al. It is the only diffusion-based image generation model in this list that is entirely open-source.

The complete architecture of Stable Diffusion consists of three models:

- A text-encoder that accepts the text prompt- Convert text prompts to computer-readable vectors.

- A U-Net- This is the diffusion model responsible for generating images.

- A Variational autoencoder consisting of an encoder and a decoder model; the encoder is used to reduce the image dimensions. The UNet diffusion model works on this smaller dimension. The decoder is responsible for enhancing/reconstructing the image generated by the diffusion model back to its original size.

Midjourney

Midjourney is one of the many AI image generators that have emerged recently. Unlike DALL-E 2 or some of its other competitors, Midjourney offers more dream-like art style visuals. It can appeal to those working within science-fiction literature or artwork that requires a more gothic feel. Where other AI generators lean more towards photos, Midjourney is more of a painting tool. It aims to offers higher image quality, more diverse outputs, wider stylistic range, support for seamless textures, wider aspect rations, better image promoting, and dynamic range.

Google Space-Time diffusion model: Unveiling Lumiere for realistic video synthesis

A space-time diffusion model is a generative model designed for producing videos that exhibit realistic, diverse, and coherent motion. It specifically tackles the challenge of synthesizing videos with globally coherent motion.

Google Research has introduced a text-to-video diffusion model called Lumiere, which uses a Space-Time U-Net architecture to generate the entire temporal duration of the video at once, through a single pass in the model. This is in contrast to existing video models which synthesize distant keyframes followed by temporal super-resolution – an approach that inherently makes global temporal consistency difficult to achieve. The Google space-time diffusion model, Lumiere is designed for synthesizing videos that portray realistic, diverse and coherent motion – a pivotal challenge in video synthesis. By deploying both spatial and (importantly) temporal down- and up-sampling and leveraging a pre-trained text-to-image diffusion model, Lumiere learns to directly generate a full-frame-rate, low-resolution video by processing it in multiple space-time scales.

Applications of Google space time diffusion model

Google’s Lumiere is a space-time diffusion model designed for synthesizing videos that portray realistic, diverse, and coherent motion. Let’s delve into its applications:

- Text-to-video generation: Lumiere excels in generating videos from textual prompts. Unlike existing video models that synthesize distant keyframes followed by temporal super-resolution, Lumiere generates the entire temporal duration of the video at once, achieving global temporal consistency.

- Video stylization: With Lumiere, off-the-shelf text-based image editing methods can be used for consistent video editing. It allows for style transfer, where you can apply different artistic styles to videos, similar to how you would stylize images.

- Cinemagraphs: The Google space-time diffusion model can animate specific regions within an image, creating captivating cinemagraphs. By providing a mask, you can selectively animate portions of the video while keeping other areas static.

- Video inpainting: Given a masked video (where certain regions are missing), Lumiere can fill in the gaps and complete the video. This is useful for video restoration or removing unwanted elements from a scene.

Google space-time diffusion model opens up exciting possibilities for realistic video generation and creative content manipulation.

What is Space-Time U-net architecture and how does it work?

The Space-Time U-Net architecture is a convolutional neural network used to generate the entire temporal duration of a video simultaneously. It achieves this by utilizing both spatial and temporal down- and up-sampling to produce videos with globally coherent motion. The model learns to directly generate a full-frame-rate, low-resolution video by processing it across multiple space-time scales. This contrasts with existing video models that synthesize distant keyframes followed by temporal super-resolution—an approach that inherently makes achieving global temporal consistency challenging.

The architecture comprises a contracting path and an expansive path. The contracting path includes encoder layers that capture contextual information and reduce the spatial resolution of the input. Conversely, the expansive path incorporates decoder layers that decode the encoded data. These layers use information from the contracting path via skip connections to generate a segmentation map. The encoder layers perform convolutional operations, decreasing the spatial resolution of the feature maps while increasing their depth, thereby capturing increasingly abstract representations of the input. The decoder layers in the expansive path upsample the feature maps while also conducting convolutional operations. The skip connections from the contracting path aid in preserving spatial information lost during the contracting path, enhancing the decoder layers’ ability to locate features accurately.

How to train diffusion models?

Training is critical in diffusion models as it is the process through which the model learns to generate new samples that closely resemble the training data. By optimizing the model parameters to maximize the likelihood of the observed data, the model can learn the underlying patterns and structure in the data, and generate new samples that capture the same characteristics. The training process enables the model to generalize to new data and perform well on tasks such as image, audio, or text generation. The quality and efficiency of the training process can significantly impact the performance of the model, making it essential to carefully tune hyperparameters and apply regularization techniques to prevent overfitting.

Data gathering: Data gathering is a critical stage in training a diffusion model. The data required to train the model must accurately represent the network structure and the connections between all the datapoints to get the desired result.

Data pre-processing: After collecting the data, it must be cleaned and pre-processed to guarantee that it can be used to train a diffusion model. This might include deleting missing or repetitive data, dealing with outliers, or converting the data into a training-ready format.

Data transformation: The next step in training the data for a diffusion model is data transformation. The data may be graphed or scaled to verify that all variables have similar ranges. The type of data transformation utilized will be determined by the specific needs of the diffusion model being trained as well as the nature of the data.

Division of the training test sets: The training set is used to train the model, while the test set is used to evaluate the model’s performance. It is critical to ensure that the training and test sets accurately represent the data as a whole and are not biased towards specific conditions.

Comparison of the diffusion models: Threshold models, susceptible-infected (SI) models, and independent cascade models are some of the most well-known forms of diffusion models. The diffusion model chosen is determined by the application’s specific needs. These might range from size of the model to network architectural complexity or the sort of diffusion being modelled.

Selection criteria: When choosing a diffusion model for training, consider the model’s accuracy, computational efficiency and interoperability. It may also be necessary to evaluate the availability of data and the simplicity with which the model may be integrated into an existing system.

Model hyperparameters: The hyperparameters that impact and govern the behavior of a diffusion model is determined by the application’s unique requirements and the type of data being used. To guarantee that the model performs best, the hyperparameters must be properly tuned.

Establishing the model parameters: This stage comprises establishing the hyperparameters outlined in the preceding section, as well as any additional model parameters necessary for the kind of diffusion model being utilized. It is critical to properly tune the model parameters so that the model can understand the underlying structure of the data and avoid overfitting.

After the data has been divided and the model parameters have been determined, the next step is to train the model. The training procedure usually entails repeatedly iterating over the training set and adjusting the model parameters based on the model’s performance on the training set.

Applications of diffusion models

Diffusion models have multidimensional applications catering to diverse industries like gaming, architecture, interior design, healthcare etc. They can be used to generate videos, 3D models, human motion, modify existing images, and restore images.

Text to videos: One of the significant applications of diffusion models is generating videos directly with text prompts. By extending the concept of text-to-image to videos, one can use diffusion models to generate videos from stories, songs, poems, etc. The model can learn the underlying patterns and structures of the video content and generate videos that match the given text prompt.

Text to 3D: In the paper “Dreamfusion,” the authors used NeRFs (Neural Radiance Fields) along with a trained 2D text-to-image diffusion model to perform text-to-3D synthesis. This technique is useful in generating 3D models from textual descriptions, which can be used in various industries like architecture, interior design, and gaming.

Text to motion: Text to motion is another exciting application of diffusion models, where the models are used to generate simple human motion. For instance, the “Human Motion Diffusion Model” can learn human motion and generate a variety of motions like walking, running, and jumping from textual descriptions.

Image to image: Image-to-image (Img2Img) is a technique used to modify existing images. The technique can transform an existing image to a target domain using a text prompt. For example, we can generate a new image with the same content as an existing image but with some transformations. The text prompt provides a textual description of the transformation we want.

Image inpainting: Image inpainting is a technique used to restore images by removing unwanted objects or replacing them with other objects/textures/designs. To perform image inpainting, the user first draws a mask around the object or pixels that need to be altered. After creating the mask, the user can tell the model how it should alter the masked pixels. Diffusion models can be used to generate high-quality images in this context.

Image outpainting: Image outpainting, also known as infinity painting, is a process in which the diffusion model adds details outside/beyond the original image. This technique can be used to extend the original image by utilizing parts of the original image and adding newly generated pixels or reference pixels to bring new textures and concepts using the text prompt.

Research processes: Diffusion models also find application in the study of brain processes, cognitive functions, and the intricate pathways involved in human decision-making. By simulating cognitive processes using the neural foundations of diffusion models, neuroscience researchers can unlock profound insights into the underlying mechanisms at work. These discoveries hold immense potential for advancing the diagnosis and treatment of neurological disorders, ultimately leading to improved patient care and well-being.

Practical applications of diffusion models across diverse industries

Digital art creation & graphic design

Diffusion models, renowned for their precision and reliability, emerge as indispensable tools in generating realistic synthetic data, surpassing adversarial networks in various tasks. They find extensive use in digital art creation and graphic design, producing top-tier images applicable to medical imaging, artistic design, and beyond. Designers benefit from streamlined image editing, color correction, and noise reduction tasks, enhancing efficiency and creativity in the design process.

Film, animation, and entertainment

In the realms of film, animation, and entertainment, diffusion models redefine production processes, offering cost-effective solutions for creating realistic backgrounds, characters, and special effects. These models expedite content creation, enabling filmmakers to explore unconventional ideas and unleash creativity without being hindered by high production costs. Augmented and virtual reality experiences also stand to benefit, with diffusion models facilitating rapid content creation and immersive user interactions.

Music, sound design, and neuroscience

The integration of diffusion models extends to music, sound design, and neuroscience, where they facilitate the generation of unique soundscapes, musical compositions, and simulations of cognitive processes. In neuroscience, diffusion models serve as powerful tools for understanding brain mechanisms, predicting neural patterns, and refining AI algorithms. This symbiotic relationship between diffusion models and neuroscience yields insights that advance medical diagnostics, neurological disorder management, and brain-machine interface development.

Healthcare, biology, and market research

In healthcare and biology, diffusion models streamline early diagnosis, enhance image synthesis, and aid in protein sequence design, offering invaluable contributions to medical imaging and biological data analysis. Market researchers leverage diffusion models to analyze consumer behavior, predict demand, and optimize marketing strategies, enabling businesses to make informed decisions and foster growth.

3D modeling and retail visualization

The evolution of 3D modeling has significantly impacted the product design, video game development, and CGI art creation. Designers harness the power of textual prompts to generate intricate 3D models, accelerating the visualization and iteration processes. Additionally, retailers utilize diffusion models to create high-quality product visuals, refine pricing strategies, and craft immersive marketing content, elevating brand recognition and customer engagement.

In essence, diffusion models permeate diverse industries, offering unparalleled capabilities in data generation and creative expression. As technology continues to evolve, the integration of diffusion models promises to redefine the boundaries of innovation and creativity across various fields, paving the way for transformative advancements and novel applications.

Navigating the future of diffusion models

The field of diffusion models is rapidly evolving, and several exciting trends are shaping its future. Here are some key areas to watch:

Efficient sampling techniques: Researchers are exploring novel methods to improve the efficiency of sampling in diffusion models. Techniques like annealed Langevin dynamics and Hamiltonian Monte Carlo are gaining attention. Adaptive sampling approaches that dynamically adjust the sampling process based on model performance are also emerging.

Hybrid models and combinations: Combining diffusion models with other generative models (such as Variational Autoencoders (VAEs) or GANs) is an active area of research. These hybrid models aim to leverage the strengths of both approaches. Researchers are investigating ways to fuse diffusion-based and autoregressive models for better performance.

Handling structured data: Diffusion models have primarily been applied to unstructured data like images and text. However, there’s growing interest in extending them to handle structured data (e.g., graphs, time series, molecular structures). Techniques for incorporating domain-specific knowledge and handling data with special structures are being explored.

Applications beyond image and video generation: While diffusion models excel in image and video generation, their potential extends to other domains. Expect to see more applications in natural language processing, audio synthesis, and scientific simulations.

Interdisciplinary research: Researchers are collaborating across disciplines to apply diffusion models to diverse scientific problems. Fields like computational chemistry, climate modeling, and healthcare are benefiting from diffusion-based approaches.

Scalability and parallelization: Handling large-scale data efficiently remains a challenge. Researchers are devising strategies for parallelized training and scalable architectures.

Conclusion

The potential of diffusion models is truly remarkable, and we are only scratching the surface of what they can do. These models are expanding rapidly and opening up new opportunities for art, business, and society at large. However, embracing this technology and its capabilities is essential for unlocking its full potential. Businesses need to take action and start implementing diffusion models to keep up with the rapidly changing landscape of technology. By doing so, they can unlock previously untapped levels of productivity and creativity, giving them an edge in their respective industries.

One innovative example of a diffusion model is the Google space-time diffusion model, Lumiere, a text-to-video generation framework based on a pre-trained text-to-image diffusion model. Lumiere addresses the limitation of globally coherent motion in existing models by proposing a Space-Time U-Net architecture. With Lumiere, off-the-shelf text-based image editing methods can be used for consistent video editing, and it facilitates a wide range of content creation tasks, including image-to-video, video inpainting, and stylized generation.

The possibilities for innovation and advancement within the realm of diffusion models are endless, and the time to start exploring them is now. Diffusion models have the potential to redefine the way we live, work, and interact with technology, and we can’t wait to see what the future holds. As we continue to push the boundaries of what is possible, we hope that this guide serves as a valuable resource for those looking to explore the capabilities of diffusion models and the world of AI more broadly.

Stay ahead of the curve and explore the possibilities of diffusion models to position your business at the forefront of innovation. Contact LeewayHertz’s AI experts to build your subsequent diffusion model-powered solution tailored to your needs.

Start a conversation by filling the form

All information will be kept confidential.

FAQs

What are diffusion models?

Diffusion models are generative models used to create data similar to the training data. They work by adding Gaussian noise to the data during training and then learning to reverse this process during generation.

How do diffusion models work?

What are the applications of diffusion models?

Diffusion models excel in image synthesis, video generation, and molecule design. They are also used in computer vision, natural language generation, and more.

What challenges do diffusion models face?

What types of diffusion models exist?

There are several types of diffusion models:

- Continuous diffusion models: Smoothly describe the spread of phenomena over time and space, handling various data inputs like text and music.

- Denoising Diffusion Probabilistic Models (DDPMs): Primarily used to reduce noise in data by adding and removing noise to generate clearer samples.

- Noise-conditioned Score-Based Generative Models (SGMs): Blend randomness with real data by conditioning on noise, useful for tasks like text-to-image generation.

- Stochastic Differential Equations (SDEs): Capture system behavior over time considering random fluctuations, finding applications in scientific and engineering domains.

How can diffusion models enhance image quality?

What are the practical uses of diffusion models?

There are several applications of diffusion models. Here are some of them-

- Image generation: Creating images from scratch.

- Inpainting: Filling in missing parts of images.

- Video generation: Producing high-quality videos.

- Drug discovery: Simulating molecular interactions.

- Content creation: Inspiring art and design.

- Retail optimization: Improving recommendations.

- Social media analysis: Identifying trends.

- AR/VR enhancement: Creating realistic environments.

How does LeewayHertz assist with diffusion models?

LeewayHertz provides comprehensive support for diffusion models. Their expertise spans model development, training, and deployment. We guide clients through the intricacies of diffusion models, ensuring effective implementation and optimal performance. Whether it’s image generation, natural language processing, or other applications, LeewayHertz offers tailored solutions and consulting services.

What measures does LeewayHertz take to ensure data security in diffusion modeling?

How does LeewayHertz ensure robust training of diffusion models?

Insights

Generative AI in asset management: Redefining decision-making in finance

Generative AI is reshaping asset management by incorporating advanced predictive capabilities, fundamentally altering decision-making in finance for more informed investments.

Redefining logistics: The impact of generative AI in supply chains

Incorporating generative AI promises to be a game-changer for supply chain management, propelling it into an era of unprecedented innovation.

From diagnosis to treatment: Exploring the applications of generative AI in healthcare

Generative AI in healthcare refers to the application of generative AI techniques and models in various aspects of the healthcare industry.