AI model security: Concerns, best practices and techniques

Security is not just an important component of a computer system, it’s the lifeblood that guards its functionality, credibility, and trustworthiness. Traditional IT security practices, which involve protecting systems, countering attacks, and strengthening defenses against adversarial red teams, are well-established. However, the rapidly growing field of Artificial Intelligence (AI) introduces fresh challenges that require attention and specialized approaches. These challenges require us to intensify our efforts to protect the ever-expanding digital ecosystem.

As AI becomes increasingly integral to our daily lives, its applications continue to evolve, giving rise to a new array of attack vectors and threats that are yet to be fully explored. MLOps pipelines, inference servers, data lakes – all are susceptible to breaches if not properly secured, patched for common vulnerabilities and exposures (CVE), and hardened in accordance with applicable regulations such as FIPS, DISA-STIG, PCI-DSS, and NCSC. Consequently, Machine Learning (ML) models, in particular, have become attractive targets for adversaries. These models, driven by data, are being targeted by a plethora of adversarial attacks, leading to significant financial losses and undermining user safety and privacy. Security, especially data security and Intellectual Property (IP) protection, often takes a backseat as companies rush to leverage AI’s hype. But this overlooks the fact that data is the fuel of AI, and its security is paramount.

Moreover, AI’s rapid evolution has caught many off guard, including data science teams who might lack understanding of how ML applications could be exploited. This lack of knowledge emphasizes the need for urgent implementation of robust security measures, particularly for models handling sensitive or Personally Identifiable Information (PII). The stakes are incredibly high, considering some of these models analyze crucial data – from credit card transactions and mortgage information to legal documents and health data.

This article highlights the importance of securing AI models, exploring the techniques and best practices involved, and advocating for a holistic approach to AI security. Because the reality is, while we stand on the brink of technological breakthroughs, we also face an escalating battle to keep our AI-driven future secure.

- Understanding AI models: Their types and lifecycle

- Significance of intellectual property and data security

- Different risks and threats to AI models

- Effects of adversarial attacks and preventions

- Security scenarios and principles in AI

- How to secure AI models – techniques

- How to secure generative AI models?

- Ethical considerations in AI security

- AI model security in different industries

- Best practices in AI model security

- The future of AI model security

Understanding AI models: Their types and lifecycle

Machine learning models, a subset of artificial intelligence, use algorithms to identify patterns and predict outcomes from data. These models automate complex tasks in fields such as healthcare, finance, and marketing. From predicting customer behavior to detecting fraud and image recognition, machine learning models drive intelligent, adaptive systems that aid businesses in enhancing decision-making, customer experiences, and efficiency.

Understanding the types of machine learning models and their life cycle is fundamental for security assessment. Different ML models possess unique characteristics and vulnerabilities, necessitating specific security measures. Likewise, each stage of the model’s lifecycle can pose distinct security risks, hence security measures must be integrated throughout the life cycle. Let’s get an overview.

Types of machine learning models

Machine learning models can be primarily categorized into supervised learning, unsupervised learning, and reinforcement learning. These distinct categories represent the different learning mechanisms, each offering unique capabilities from predicting outcomes, identifying hidden patterns, to making data-driven decisions based on reward systems.

- Supervised learning: This type uses labeled data to make predictions, classify data, and identify trends. Examples include Support Vector Machines (SVMs), decision trees, naive bayes, and logistic regression.

Common supervised learning models- Linear regression: Predicts a continuous variable using one or more predictors. Assumes a linear relationship between variables.

- Logistic regression: Predicts a categorical variable using one or more predictors. Assumes a logistic relationship between variables.

- Decision trees: Predicts a categorical or continuous variable using one or more predictors. Assumes a hierarchical relationship between variables.

- Support vector machines: Predicts a categorical or continuous variable using one or more predictors. Assumes a separable relationship between variables.

- Unsupervised learning: This type doesn’t use labeled data, but instead, finds patterns within data by identifying clusters, anomalies, and other features. Examples include k-means clustering, hierarchical clustering, and neural networks.

Common unsupervised learning models- Clustering: Groups data points together based on their similarity. Useful for finding patterns and hidden relationships in data.

- Principal Component Analysis (PCA): Reduces the dimensionality of data by finding the linear combinations of variables that explain the greatest variance.

- K-Means: Partitions data points into a fixed number of clusters. Useful for identifying groups of similar data points and outliers.

- Reinforcement learning: This type uses rewards or punishments to teach machines to make decisions that maximize rewards or minimize punishments. Examples include Q-learning, SARSA, and Deep Q-Networks.

Common reinforcement learning models- Q-learning: A model-free reinforcement learning algorithm used to find an optimal action-selection policy for a given environment.

- Deep Q-learning: A variant of Q-learning that uses a deep neural network as the function approximator for the Q-value function.

- Dueling neural networks: A deep neural network architecture composed of two separate streams, an advantage stream, and a value stream. Used in areas including robotics, game-playing, and control systems.

Build secure AI solutions with LeewayHertz!

With a strong commitment to security, our team designs, develops and deploys robust AI solutions tailored to your unique business needs.

The life-cycle of machine learning models

The life cycle of a machine learning model is a multi-phase process that guides the development and deployment of effective ML models. From understanding the business problem and data collection, through data preparation, model training, evaluation, and finally deployment, each stage plays a crucial role in ensuring the model’s accuracy and efficiency. This cyclical process is continuously repeated, refining the model as new data and insights become available.

- Business understanding: This phase involves defining the problem statement and setting clear business objectives. Determining business objectives lays the foundation for further steps and provides the project with a specific direction.

- Data understanding: Once the problem statement is clear, the process moves to data collection. This phase includes collecting raw data, understanding its nature, exploring it, and validating its quality. It’s crucial to ensure that the data collected correlates with the problem statement.

- Data preparation: In this phase, the collected data undergoes cleansing and transformation. This includes cleaning the selected data, constructing new features, identifying relationships between features, and formatting the data as required.

- Modeling: Once the data is ready, it is used to train the model. This phase involves selecting the appropriate modeling technique, generating a test design, and training the model. While preliminary evaluation can take place at this stage, the main evaluation will take place using validation data.

- Evaluation: Evaluating the model is a vital step where the trained model’s performance is assessed to decide if it’s ready for deployment. Various evaluation techniques are used to determine the model’s readiness.

- Deployment: Finally, the model is deployed into the production environment, and maintenance begins. This phase involves planning for maintenance, where the model is evaluated periodically. Reporting, presenting model performance, and project reviewing also happen at this stage.

This lifecycle doesn’t end at deployment. Often, steps 3-6 are iterated over time to continuously improve the model and ensure it remains effective as new data and insights become available.

Significance of intellectual property and data security

In the data science landscape, the significance of Intellectual Property (IP) and data security cannot be overstated. While the primary focus often lies in ensuring optimal accuracy and user satisfaction of AI applications, these vital aspects should not be neglected.

Intellectual Property is a fundamental consideration in data science as it delineates ownership rights. Interestingly, not all AI components can be shielded by IP laws. For instance, algorithms cannot be protected by patents and copyrights, but a specific algorithmic implementation can. Thus, in the AI domain, the true proprietary element often lies in the data utilized for training models – the quality and uniqueness of this data directly impacts the model’s accuracy.

However, the risk surfaces when your model becomes publicly accessible. Adversarial attacks can exploit prediction APIs to extract information about the data’s distribution, potentially revealing sensitive information about the training data. To mitigate such risks, it is crucial to control who has access to your model, either through authentication systems or by obscuring the precise results of the model behind an array of applications.

Data privacy, again, underscores the significance of data as the primary ingredient in AI. It is vital to ensure that the utilized data is either owned by you or used with permission. Moreover, it’s crucial to safeguard this data during the development and deployment phases. It is also important to remember that once your model is online, the data used to train it could be indirectly exposed through the model’s predictions, hence a thorough understanding of potential data exposure is needed.

A mindful approach towards data ownership, security, and privacy is paramount in ensuring the ethical and secure application of data science and AI.

Different risks and threats to AI models

The CIA triangle, in the context of AI model security, helps understand the diverse threats that can compromise a machine learning model.

- Confidentiality: Confidentiality refers to the privacy of the data. In the context of AI, attacks targeting confidentiality aim to access sensitive training data through methods like model inversion. Attackers can also seek to steal the intellectual property that underlies the model, such as the structure of the neural network and its hyperparameters.

- Integrity: Integrity relates to the trustworthiness and reliability of the data. For AI models, this involves the accuracy of the predictions made by the model. If an attacker can manipulate the data or the model to decrease its predictive accuracy, this constitutes an integrity attack. For instance, an attacker might aim to increase the rate of false negatives in a credit card fraud detection system.

- Availability: Availability refers to the accessibility and usability of the data. In terms of AI, availability attacks are designed to make a model useless by either blocking access to it or by increasing its error rate to the point where it is rendered useless. These attacks could involve overloading a system with requests or feeding it misleading data to impair its functionality.

Here are some of the potential security threats to AI models:

Data poisoning attacks

Data poisoning is a serious threat to AI model security. It involves manipulating the training data to control the prediction behavior of AI models, a tactic that can be effective with models that use reinforcement learning or those that are re-trained daily with new inputs. This technique can be deployed in both white-box and black-box scenarios. A real-world application of this method could alter the operations of recommender systems across a range of platforms, including social media, e-commerce sites, video hosting services, and even dating apps. The process of data poisoning can be automated using advanced tools for commanding and controlling the attack, or it could involve manual efforts such as employing troll farms to inundate your competitor’s product with negative opinions. Hence, vigilance and robust security measures are essential to prevent such data poisoning threats.

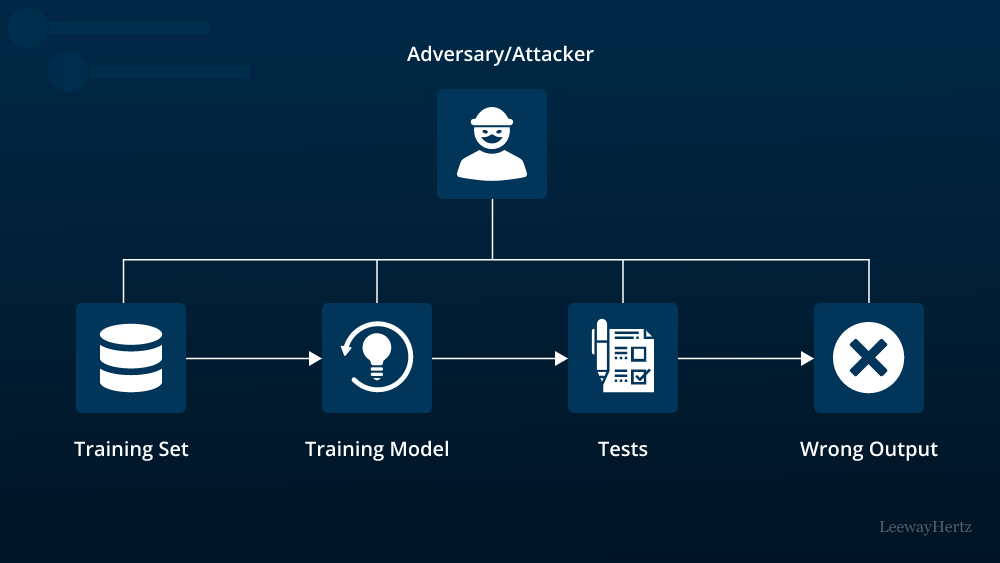

Adversarial attacks

Adversarial attacks represent a major challenge to the security of AI models. These attacks primarily occur when an externally accessible AI model, such as one deployed via machine learning as a service (MLaaS) or used in open systems like traffic cameras, is intentionally manipulated. The objective of the adversary could be to avoid detection or modify the model’s outcomes. These manipulations can vary in complexity: they might be as straightforward as donning an “adversarial t-shirt” to trick recognition systems, or as complex as altering social media content to sway election results. The attacks are further classified based on the attacker’s access to the model’s parameters: ‘white-box’ attacks entail complete access, whereas ‘black-box’ attacks occur without such access. This categorization highlights the necessity of robust defensive strategies to ensure AI model security.

Evasion attacks

Evasion attacks are targeted towards the operational phase of AI models. In this type of attack, the data is altered subtly yet effectively, leading to inaccurate output predictions, despite the input appearing authentic. To put it simply, the attacker tweaks the data processed by the model to mislead it, rather than manipulating the training data.

One practical example could be a loan application scenario where an attacker conceals their real country of origin using a Virtual Private Network (VPN). This could deceive a model that considers geographic location as a risk factor in loan approval.

Evasion attacks are particularly prominent in areas like image recognition. By carefully designing an image, attackers can confuse AI models to produce incorrect identification or classification. A well-known instance was presented by Google researchers, where they demonstrated how incorporating carefully crafted noise into an image can completely alter the model’s recognition. In this case, the noise layer was barely discernible to the human eye, yet it made the model misidentify a panda as a gibbon, proving the potential effectiveness of evasion attacks.

Model inversion attacks

Model inversion attacks pose a significant threat to the security and confidentiality of AI models. In these attacks, an adversary attempts to reveal sensitive information from the model’s outputs, even when they do not have direct access to the underlying data. Specifically, an attacker uses the model’s outputs and the access they have to some part of the input data to make educated guesses about other sensitive features of the input data that were used in training the model.

For instance, if the model is a classifier that has been trained on sensitive medical data, an attacker could use the model’s predictions along with demographic information to infer sensitive details about a patient’s health record. This threat is particularly significant in privacy-sensitive domains such as healthcare, finance, and personal services. Therefore, implementing privacy-preserving mechanisms and robust security measures are essential to protect AI models from model inversion attacks.

Model stealing

Model stealing poses a major security threat in the realm of AI. It involves unauthorized duplication of trained models, thereby bypassing the substantial investments made in data gathering, preprocessing, and model training.

Consider this scenario: A hedge fund invests heavily in developing a stock trading model, using proprietary data and devoting significant time and resources for its development. Similarly, a leading pharmaceutical company creates a complex model for targeted therapies, relying on confidential, proprietary data. The moment these AI models are exposed, they are susceptible to theft. Competitors can swiftly copy these models without shouldering any upfront costs, essentially reaping the benefits of the original company’s million-dollar investments within minutes. This not only erodes the original company’s competitive advantage but can also have financial repercussions.

Therefore, securing AI models against theft is crucial. Organizations need to implement robust measures to prevent model stealing, ensuring the safety of their investments, maintaining their competitive edge, and upholding the confidentiality of proprietary data.

Model extraction attacks

Model extraction attacks are a type of security threat where attackers aim to create a duplicate or approximation of a machine learning model by using its public API. In such attacks, the attackers do not get direct access to the model’s parameters or the training data. Instead, they manipulate the model’s input-output pairs to learn about its structure and functionality.

For example, an attacker might feed different inputs into a public-facing AI model and observe its outputs. By analyzing these input-output pairs, they could reverse-engineer or approximate the model’s internal workings. This allows them to create a “clone” of the model that behaves similarly to the original. This could enable them to use the model for their own purposes, bypass any usage restrictions or fees, or even uncover sensitive information about the original training data.

Model extraction attacks can be damaging because they allow unauthorized parties to benefit from the time, resources, and data that were invested into creating the original model. They could also lead to potential misuse of the extracted model or the exposure of sensitive data. Therefore, it’s crucial to implement protective measures, such as differential privacy, access rate limiting, and robustness against adversarial inputs, to guard against these kinds of attacks.

Prompt injection

Prompt injection attacks represent a significant security concern in the domain of language learning models, such as AI-driven chatbots and virtual assistants. These attacks involve manipulative tactics where attackers deceive users into revealing confidential information. This form of social engineering is escalating as cybercriminals discover innovative methods to exploit weak spots in AI technologies. A notable instance is the security flaw found in Bing Chat. This highlights the growing need for vigilance and proactive countermeasures in AI development.

Responsibly developing AI entails anticipating potential security threats from the beginning, including the risk of prompt injection attacks. While designing chatbots or other natural language processing systems, developers should integrate suitable security safeguards. These precautions can ensure the protection of users’ sensitive data, bolstering trust in AI solutions and promoting safer digital ecosystems.

Build secure AI solutions with LeewayHertz!

With a strong commitment to security, our team designs, develops and deploys robust AI solutions tailored to your unique business needs.

Effects of adversarial attacks and preventions

Adversarial attacks pose significant threats to the accuracy and financial stability of organizations utilizing machine learning models. By manipulating the input data, these attacks can substantially degrade the accuracy of ML models. For instance, a joint research by the University of Oxford and Microsoft Research uncovered that adversarial attacks could plummet the model’s accuracy by a staggering 90%, endangering user safety, security, and potentially leading to substantial financial losses. The financial toll of these adversarial attacks can be hefty.

In the face of these security threats, cryptographic techniques such as Secure Multi-Party Computation (SMPC), Homomorphic Encryption (HE), and Differential Privacy (DP) are being employed as defensive measures.

- SMPC is a method that enables the secure exchange of data amongst ML models, without exposing the content to the other participating parties, thereby safeguarding the model from adversaries aiming to manipulate data or pilfer confidential information.

- Homomorphic encryption, on the other hand, is an encryption form that allows for processing data while still encrypted. This method maintains data privacy and ensures accurate computation results, which is crucial in protecting ML models from adversaries manipulating the training data.

- Lastly, differential privacy is a cryptographic technique committed to preserving the privacy of training data. This technique guarantees the secure use of data, protecting it from compromise and ensuring its utilization aligns with its intended purpose. These methods are invaluable in preserving the integrity of ML models and shielding them from adversarial attacks.

Security scenarios and principles in AI

Understanding various security scenarios and implementing key principles is crucial to enhancing the safety of AI applications. This understanding helps data science teams adopt effective strategies to safeguard their systems.

Enhancing security during model building and execution

During the stages of model building and execution, strict security measures are paramount. This is especially critical when using open-source tools like TensorFlow or PyTorch. The use of these tools can potentially expose your system to exploits. While open-source communities are usually prompt at bug-fixing, the time taken to implement these fixes could still provide a window for hackers. To reinforce security, consider deploying authentication and encryption frameworks. For instance, Python’s hashlib can be used for encrypting models, resources, and directories. A robust practice is to deploy the models along with their hash values and authenticate the models at runtime before proceeding to production.

Security considerations during model deployment and updates

The process of model deployment and updates often follows a CI/CD pipeline process. Integrating security into this process is vital. For instance, implementing authentication checks during the CI/CD process is an essential step. Also, ensure that the Continuous Deployment (CD) is performed by individuals with appropriate access permissions. Adhering to these standard software engineering norms can greatly enhance the security during this phase.

Real-time model security

For real-time models, a heightened level of security is required due to their immediate output nature. Here, data science teams can leverage Python’s Pycrypto for encryption to enhance the security of model inputs. Furthermore, integrating spam detection and rate limiting mechanisms, along with robust authentication and registration procedures, can bolster the security for these real-time ML applications. This way, the applications are protected from potential attacks and manipulations.

How to secure AI models – techniques

AI model security encompasses a wide range of techniques designed to safeguard the confidentiality, integrity, and availability of both data and models. Here’s a brief overview of these techniques:

Data protection techniques

- Data encryption: This involves encoding data in a way that only authorized parties can access it. It is a vital method for protecting sensitive data from unwanted intrusions during both storage and transmission.

- Differential privacy: Differential privacy introduces “noise” to the data in a way that provides data privacy and security. It prevents attackers from learning sensitive information about individuals in the dataset while still allowing for useful computations on the data as a whole.

Model protection techniques

- Model hardening: Model hardening is the process of strengthening the model to make it more resistant to attacks. This involves measures like implementing secure coding practices, applying security patches, and protecting access to the model.

- Regularization: Regularization techniques are used to prevent overfitting in machine learning models, which can make them less vulnerable to adversarial attacks. L1 and L2 are examples of regularization techniques.

- Adversarial training: This method involves training the model on both normal and adversarial samples to enhance its resilience to adversarial attacks. By including adversarial examples in the training set, the model becomes better equipped to handle such instances in the future.

Threat detection techniques

- Intrusion Detection Systems (IDS): IDS are tools that monitor networks or systems for malicious activities or policy violations. These systems are essential for detecting potential threats or intrusions in real time, allowing for immediate action to mitigate the threat.

- Anomaly Detection: Anomaly detection techniques identify instances that deviate significantly from the norm. By recognizing anomalous behavior, these methods can quickly flag potential threats or attacks, prompting further investigation.

By integrating these techniques into their security protocols, organizations can better protect their AI models from various types of attacks, thus ensuring the confidentiality, integrity, and availability of their data and models.

Securing AI models based on the above techniques is a multifaceted process, primarily involving two distinct but complementary approaches: Prevention and detection.

- Prevention technique: Prevention focuses on proactive measures to safeguard AI models from adversarial attacks, data breaches, and unauthorized access. This includes techniques such as secure model development and execution, encryption of sensitive data, and implementing robust authentication mechanisms. Employing secure coding practices and using verified libraries also falls under this category. Another aspect of prevention is ensuring data privacy during model training and deployment stages, often achieved through techniques such as differential privacy. Prevention strategies aim at blocking threats before they manifest, thereby securing the integrity of the AI model and the confidentiality of the data.

- Detection technique: Even with robust preventive measures in place, some security threats might still manage to infiltrate the system. This is where the detection techniques come in. Detection revolves around identifying abnormal behaviors, breaches, and attacks on the AI model after they have occurred. Techniques such as model watermarking can be used to trace any unauthorized copies of the AI model, asserting ownership and facilitating legal recourse. Monitoring traffic to the inference API and observing for anomalies can detect potential model extraction attacks. Additionally, using tools that provide logging, monitoring, and alerting capabilities can swiftly flag and report any suspicious activity, enabling timely response and mitigation.

Both prevention and detection techniques are crucial components of a comprehensive AI model security strategy. The former helps avoid potential security issues, while the latter ensures swift identification and response to any security breaches that occur, thereby minimizing their impact. Balancing these approaches provides a robust defense mechanism for AI models, ensuring their safe and efficient functioning.

Securing AI models: The prevention approach

Securing AI models requires strategic planning and well-defined techniques. The preventive approach seeks to mitigate security threats before they materialize. Here is how:

- Re-training the model: In certain circumstances, it’s possible to train a new model from scratch using different architecture or parameters, achieving accuracy that’s comparable to the original model. This approach, coupled with blue-green deployment mechanisms to route some adversarial traffic to the new model, can significantly reduce the effectiveness of a cloned model. In practice, it could lower the F1 score of the copied model by up to 42%.

- Employing differential privacy: Differential Privacy (DP) serves as a shield against attacks aiming to duplicate the decision boundary of a model. It ensures the outputs for all samples in the boundary-sensitive zone are almost indistinguishable by introducing perturbations to these outputs via a Boundary Differential Privacy Layer (BDPL).

- Input and output perturbations: Perturbing input and output probabilities to maximize the deviation from the original gradient can be a successful preventative technique. For instance, employing the reverse sigmoid activation function as a defense can produce the same probability for different logic values, leading to incorrect gradient values and complicating the extraction process.

- Model modification: Another prevention method focuses on altering the model’s architecture and/or parameters rather than disturbing the data. This approach is particularly applicable when the architecture is novel and offers certain benefits over existing ones. The primary goal is to safeguard the architecture itself, not a specific trained instance of the architecture or training hyperparameters. This can prevent an attacker from applying this architecture to a different domain, enhancing the overall security of the AI model.

Build secure AI solutions with LeewayHertz!

With a strong commitment to security, our team designs, develops and deploys robust AI solutions tailored to your unique business needs.

Securing AI models: Key detection techniques

- Detection through: Watermarking is a widely recognized technique used in various industries, like photography and currency production, to establish and assert ownership. In the context of AI models, watermarking involves embedding unique, known data such as specific parameters, weights, or detection capabilities for unique features. While this method doesn’t prevent theft of the model, it can serve as strong evidence to assert your ownership in legal disputes, should your model be stolen and used by others.

- Monitoring-based detection: A robust Logging, Monitoring, and Alerting (LMA) framework, such as an open-source Cloud Observability Service (COS), can significantly aid in the detection of adversarial attacks on your models. This can be achieved by scrutinizing the traffic towards your inference API and identifying anomalies or specific patterns often used in model extraction attacks. Many open-source observability tools come pre-configured with alerting rules to assist in this process, making it a potent tool to protect against unauthorized access or attacks on your models.

Mitigating the risk of prompt injection

To mitigate the risk of prompt injection attacks in Large Language Models (LLMs), several strategies can be employed. End-to-end encryption is one effective measure, ensuring all communication between users and chatbots remains private and impenetrable by malicious entities. Coupled with multi-factor authentication, requiring users to confirm their identity through multiple means, this can significantly enhance security when accessing sensitive data.

Developers should also prioritize routine security audits and vulnerability assessments for their AI-driven chatbots and virtual assistants. Such assessments can unveil potential security weaknesses and provide the opportunity to address them promptly, fortifying the system’s overall security.

Adversarial training

Adversarial training serves as a crucial strategy in enhancing the security of AI models. This technique involves teaching the model to detect and correctly classify adversarial examples – instances that are intentionally designed to deceive the model. Taking the image recognition model as an instance, the incorrectly labeled image of a panda would be treated as an adversarial example.

The challenge, however, lies in initially identifying these adversarial examples, as they are crafted to be deceptive. Consequently, research in this sphere focuses not only on defending against such instances but also on automating their discovery. Tools like IBM’s Adversarial Robustness Toolbox simplify this process by aiding in adversarial training, thereby fortifying AI models against these stealthy attacks.

Switching models

Another approach in bolstering AI model security involves employing an array of models within your system, a technique known as model switching. Instead of relying on a single model to make predictions, the system intermittently changes the model in use. This dynamism generates a moving target, complicating the attacker’s job as they can’t ascertain which model is operational at a given time.

For an attack to be fruitful, they might need to compromise every model in use – an exponentially more challenging task compared to exploiting a single model. Why is that? Crafting malicious data (poisoning) or finding adversarial examples that mislead an entire set of models is considerably more laborious and complex. This higher level of difficulty acts as an additional layer of protection, making your AI system more robust and resilient against potential attacks. Thus, model switching serves as an effective strategy in the arsenal of AI model security techniques.

Generalized models

Another strategy in enhancing AI model security involves the application of generalized models. Unlike the model switching approach, this tactic doesn’t alternate between multiple models but instead amalgamates them to forge a single, generalized model. Consequently, each contributing model has a say in the final prediction made by the generalized model.

This is an important defense mechanism because an adversarial example that can deceive one model might not be able to deceive the entire ensemble. To effectively fool a generalized model, an attacker would have to find an adversarial example that confounds every individual model contributing to it – a much more demanding task.

In essence, a generalized model is akin to a democratic system where every individual model votes to reach the final decision, mitigating the risk of a single compromised or fooled model swaying the outcome. As a result, generalized models are notably more robust and resistant to adversarial examples, providing an elevated level of security in AI applications.

How to secure generative AI models?

The rapid emergence of ChatGPT marks a significant development in the broader generative AI transformation, characterized by advancements in large language models and foundational AI technologies. This transformation is enhancing AI’s capabilities across various domains, including image, audio, video, and text, enabling machines to produce creative and valuable content on demand.

This technological leap presents vast opportunities across all industries, exciting both business leaders and employees. However, it’s crucial to be aware of associated business and security risks, such as data leakage, intellectual property theft, malicious content, misuse of AI technologies, widespread misinformation, copyright issues, and the reinforcement of biases. To address these risks effectively, businesses must develop and implement a robust security strategy from the outset, ensuring generative AI is utilized securely and responsibly across their operations.

Securing data in generative AI environments

Establishing a secure environment to reduce data loss risks is crucial, especially with the use of generative AI applications like ChatGPT. This risk, stemming from inadvertent data sharing by employees, is tangible and needs addressing. Proactive technical measures can significantly mitigate these risks. For instance, businesses can control data flow more effectively by replacing standard interfaces with custom-built front-ends that directly interact with the underlying AI APIs. Additionally, implementing sandboxes for data isolation helps maintain data integrity and reduce bias. It’s essential to keep sensitive data under strict organizational control, possibly in secure enclaves, while less critical data might be shared with external hosted services. Integrating “trust by design” is key to creating and maintaining these secure systems.

Begin employee training immediately

The rapid adoption of ChatGPT highlights a unique challenge in the business world. Employees are increasingly exploring this technology through social media and other online platforms, which can lead to misinformation and confusion about its proper use. Moreover, the easy accessibility of these apps on personal devices introduces risks of unauthorized “shadow IT” and cybersecurity vulnerabilities. To address these issues, it’s crucial to initiate comprehensive training programs for the workforce, focusing on the correct and secure use of such technologies.

Maintain data transparency in generative AI

Acknowledging and transparently addressing the risks linked to the training data is vital for businesses using or modifying external foundation models for generative AI. Poor quality data can negatively impact the AI’s output and the company’s reputation. It’s crucial for businesses to scrutinize the data sources for biases or inaccuracies, as the AI’s output reflects the quality of its training data. While eliminating these risks is challenging, transparency about data usage and the AI’s training process is key. Establishing clear guidelines about data handling, bias, privacy, and intellectual property rights is essential for informed and responsible usage of generative AI technologies.

Combine human insight and AI for enhanced security

It’s crucial to use AI responsibly to benefit everyone. Integrating human oversight into generative AI applications enhances their overall robustness. Implementing a ‘human in the loop’ system adds an extra layer of security and helps verify the AI’s responses. Using reinforcement learning with human feedback, where the AI model is refined based on human input, further strengthens this approach. Additionally, employing a secondary AI to monitor and evaluate the primary AI’s responses facilitates continuous improvement and safeguards the system against potential misuse.

Acknowledge and address new risks in AI models

AI models can be vulnerable to various cyber attacks, including the increasingly prevalent ‘prompt injection’ attack. In such attacks, attackers manipulate the AI model to produce inaccurate or harmful responses by embedding misleading instructions, such as using hidden text in prompts. These methods can circumvent the security measures embedded in the system. Consequently, it’s vital for business leaders to be aware of these emerging threats and to develop comprehensive security strategies to protect their AI models from such vulnerabilities.

Ethical considerations in AI security

The rapid advancement of artificial intelligence in recent years has brought about transformative changes across various sectors, reshaping the way we live, work, and interact with technology. As AI becomes increasingly integrated into our lives, it raises a plethora of ethical concerns that need to be addressed with caution. The development and implementation of AI technologies present profound challenges concerning their influence on society, individual rights, and the future of humanity. These challenges range from data privacy and bias to transparency and accountability. Here are some key ethical issues surrounding AI and the necessity for responsible development and deployment.

Central to AI research is the use of massive volumes of data to train algorithms and models. Ensuring the privacy and security of individuals’ sensitive information is critical to prevent it from falling into the wrong hands. Ethical AI developers must adhere to strict data protection practices and proactively defend against data breaches, unauthorized access, and misuse of personal information.

Algorithmic bias: AI systems are as accurate as the data on which they are trained. Biases in training data can lead to skewed outcomes, perpetuating societal prejudices. Developers must meticulously examine and eliminate biases in datasets to provide equitable outcomes for all users, irrespective of race, gender, age, or other protected characteristics, to create ethical AI.

Explainability and transparency: AI algorithms can be complex, rendering it challenging to comprehend how they arrive at certain decisions. The “black-box” nature of some AI models raises concerns about transparency and accountability. Ethical AI development requires the establishment of interpretable models that can elucidate their reasoning and offer transparent insights into their decision-making processes.

Human oversight and control: While AI can automate numerous tasks and processes, maintaining human oversight and control is imperative. Ethical AI should not fully replace human judgment but should supplement human decision-making, empowering individuals with the ability to intervene or override AI recommendations when necessary.

Potential job displacement: The swift adoption of AI in the workforce raises apprehensions about potential job displacement and economic inequality. Ethical considerations necessitate that organizations undertake measures to reskill and upskill workers affected by AI implementation, ensuring a just transition for the workforce.

Informed consent: AI applications often rely on user data to provide personalized services. Ethical developers must obtain informed consent from users before collecting and utilizing their data, respecting individual autonomy and privacy rights.

Safety and reliability: In sectors such as autonomous vehicles and medical diagnostics, the safety and reliability of AI systems are paramount. Ethical AI developers must prioritize safety measures, rigorous testing, and fail-safe mechanisms to prevent potential harm to users and society.

Dual use of AI technology: AI technology can be used for both beneficial and potentially harmful purposes. Ethical considerations call for developers to be aware of AI’s dual-use nature and consider the broader societal implications of the technologies they create.

Long-term societal impact: AI development should be guided by considerations of its long-term societal impact. Ethical developers must assess potential risks and unintended consequences, considering not only immediate benefits but also the wider implications for humanity, the environment, and future generations.

Regulatory compliance: Ethical AI development aligns with existing laws and regulations concerning privacy, discrimination, safety, and other relevant areas. Developers must ensure compliance with relevant laws and consider ethical guidelines to fill gaps where regulations may be lacking.

As AI continues to shape our world, ethical considerations become ever more critical. Responsible AI development necessitates a commitment to data privacy, fairness, transparency, and societal impact. It is the collective responsibility of developers, policymakers, and stakeholders to ensure that AI technologies are designed and deployed in ways that benefit humanity, uphold individual rights, and foster a more equitable and sustainable future for all. By embracing ethical AI principles, we can harness the immense potential of AI while safeguarding the values that define our humanity.

AI model security in different industries

AI model security requirements and challenges differ significantly across various industries due to their distinct operational environments and regulatory landscapes. Here is a detailed look at how these vary across key sectors:

- Healthcare: In healthcare, AI models are used for diagnostics, treatment recommendations, and patient data analysis. Security challenges include protecting sensitive patient data under HIPAA regulations, ensuring the accuracy of diagnostic tools, and guarding against malicious manipulations that could lead to misdiagnoses or inappropriate treatments.

- Finance: The finance sector utilizes AI for risk assessment, fraud detection, and algorithmic trading. Security priorities here involve safeguarding financial data, ensuring transactional integrity, and maintaining compliance with stringent financial regulations like GDPR and SOX. There is also the challenge of preventing AI models from being exploited in market manipulations.

- Automotive: In the automotive industry, especially in autonomous vehicles, AI model security ensures navigation systems’ safety and reliability. Security measures must protect against hacking or tampering that could lead to accidents or misuse of the vehicles.

- Retail: AI in retail is used for customer service (like chatbots), inventory management, and personalized marketing. Security challenges include safeguarding customer data, ensuring that recommendation systems are not manipulated, and maintaining customer privacy.

- Manufacturing: AI models in manufacturing are used for predictive maintenance, supply chain optimization, and quality control. Security concerns include protecting intellectual property, ensuring the integrity of automation systems, and defending against industrial espionage.

- Energy and Utilities: In this sector, AI is crucial for grid management and predictive maintenance of infrastructure. Security concerns focus on protecting critical infrastructure from cyberattacks that could disrupt power supply and ensuring the reliability of predictive models for energy distribution.

- Telecommunications: AI in telecom is used for network optimization and customer service. Security involves safeguarding customer data, ensuring the integrity of communication networks, and protecting against service disruptions.

- Education: AI applications in education include personalized learning and administrative automation. Security challenges involve protecting student data privacy and ensuring that educational content delivered by AI is accurate and unbiased.

- Agriculture: In agriculture, AI assists in crop monitoring and yield prediction. Security concerns include protecting farm data and ensuring the accuracy of AI predictions, which are vital for food security.

Each of these industries faces distinct AI model security challenges, necessitating industry-specific strategies to address them effectively.

Best practices in AI model security

Implementing robust data security protocols

Securing AI models begins with safeguarding the data they interact with. This involves the encryption of sensitive data using sophisticated algorithms like AES-256, secure storage of data, and utilizing secure transmission channels. Additionally, stringent access controls should be established to limit who can access sensitive data. For instance, if a company uses an AI model for customer service, the model may need access to sensitive customer data, such as contact information and purchase history. Securing this data with robust encryption will ensure it remains unreadable if intercepted, without the correct encryption key.

Secure and private AI design principles

In the context of AI model security, secure and private AI design principles involve data minimization, privacy by design and by default, secure data handling, user consent and transparency, anonymization and pseudonymization, and the incorporation of privacy-preserving technologies. These principles focus on minimizing data usage, integrating privacy safeguards from the start, handling data securely throughout its lifecycle. This ensures user understanding and consent for data usage, making it difficult to link data to individuals, and using technologies like differential privacy and homomorphic encryption to protect data while allowing analysis. This integrated approach emphasizes proactive security measures and prioritizes privacy as an inherent aspect of AI system design.

Regular monitoring and auditing

Routine auditing and monitoring of AI models can help identify potential security breaches and address them promptly. This includes observing model outputs, tracking user activity, and frequently updating security policies and procedures to adapt to changing threat landscapes.

Employ multi-factor authentication

The use of multi-factor authentication, which requires more than one method of authentication from independent categories of credentials, can provide an additional layer of security. It reduces the risk of unauthorized access to sensitive information, as it would require an attacker to compromise more than just a single piece of evidence. Multi-factor authentication methods can range from passwords and security tokens to biometrics like fingerprints or facial recognition.

Prioritize user education

One of the most potent defenses against security threats is an informed user base. It’s crucial to educate users about potential risks associated with AI models, such as phishing attacks or the misuse of deepfakes. Regular training sessions can help users identify potential security threats and understand the correct procedures for reporting these to relevant authorities.

By integrating these best practices into their security strategy, organizations can enhance the protection of their AI models and the sensitive data they handle, reducing the potential for breaches and unauthorized access.

Developing incident response plans

Creating an incident response plan is a critical aspect of best practices in AI model security. This proactive strategy involves identifying potential threats, preparing detection mechanisms, and defining steps to respond, investigate, and recover from any security incidents efficiently. It also entails conducting post-incident analyses to extract lessons and improve the plan continuously. This practice aims to limit damage, reduce recovery time and costs, and ensure the preservation of trust among stakeholders in the event of a security breach or cyberattack. Regular updates to the plan and training employees to respond effectively are essential components of an effective incident response strategy.

The future of AI model security

Artificial intelligence is rapidly transforming the technological landscape, enhancing efficiency and precision across numerous sectors. However, the rise of AI and machine learning systems has also introduced a new set of security threats, making the development of advanced security techniques for AI systems more critical than ever. Here are some emerging security techniques in the field:

Adversarial machine learning

This approach involves understanding the vulnerabilities of AI systems to craft inputs that deceive the system, known as adversarial attacks. By identifying these vulnerabilities, developers can reinforce their systems against potential exploits. Adversarial machine learning is a specialized field within machine learning that focuses on teaching neural networks to identify and counteract manipulative data inputs or malicious activities. The objective is not solely to classify ‘bad’ inputs, but to proactively uncover potential weak spots and design more adaptable learning algorithms.

Privacy-preserving machine learning

As machine learning becomes increasingly pervasive, privacy protection has emerged as a critical concern. Risks arise from data and model privacy issues, including data breaches, misuse of personal data, unauthorized third-party data sharing, and adversarial attempts to exploit machine learning models’ weaknesses. To mitigate these threats, several privacy-preserving machine learning techniques have been developed. These include differential privacy, which adds noise to data to protect individual privacy, homomorphic encryption, which allows for computations on encrypted data, and federated learning, a decentralized approach where multiple devices train a model without sharing raw data. Each technique has its advantages and challenges, and they all continue to be areas of active research in privacy-preserving machine learning.

Robustness testing

Robustness testing is a type of software testing that assesses a system’s ability to handle abnormal or unexpected conditions, ensuring its reliability and reducing the risk of failure. It uncovers hidden bugs, improves software quality, enhances performance across platforms, and ultimately, boosts user confidence by providing a consistently stable performance.

A variety of testing methods are employed to ensure robustness, such as functional testing to examine all software functionalities, regression testing to ensure software modifications don’t result in unexpected issues, load testing to check software performance under various loads, and fuzz testing to identify vulnerabilities. Other tests include mutation testing that checks for coding or input flaws, black-box testing that focuses on the software’s external behavior, security testing to verify resistance to attacks, use case testing to examine functionality from the user’s perspective, negative testing to test behavior under invalid inputs, and stress testing to evaluate workload capabilities under heavy load.

Robustness testing scenarios include handling large data, unexpected inputs and edge cases, system failures and recovery, sustained usage, and compatibility with various environments.

While automation of these tests can be beneficial in terms of time, effort, and resources, it’s crucial to choose the right robustness testing tools, such as Testsigma, JMeter, Selenium, Appium, or Loadmeter.

The benefits of robustness testing include ensuring software reliability, enhancing user experience, reducing maintenance costs, preventing data corruption, and increasing security.

Explainable AI (XAI) for transparency

Explainable AI (XAI) brings transparency to AI models by providing insights into the decision-making process. This is achieved through model visualization, feature importance analysis, natural language explanations, and counterfactual explanations. Visualization illustrates decision-making processes, feature analysis highlights influential variables, natural language explanations articulate decision pathways, and counterfactual explanations offer alternative scenarios. The transparency levels in AI systems range from ‘Black Box,’ offering no insight, through ‘Gray Box’ with limited visibility, to ‘White Box’ providing complete transparency. The needed transparency level varies based on the specific application of the AI system.

Secure multi-party computation

Secure Multi-party Computation (SMPC) is a cryptographic approach that ensures data privacy by enabling multiple parties to jointly compute a function using their private inputs. In SMPC, personal data is split into smaller, encrypted portions, each dispatched to an independent server. Thus, no single server holds the entire data. The aggregated, encoded data needs to be combined to reveal the ‘secret’ or personal data. Furthermore, computations can be performed on this data without disclosing it, by requiring each server to process its fragment of data individually.

Blockchain for AI Security

Blockchain technology can significantly bolster the security of AI models by leveraging its key features like decentralization, immutability, traceability, smart contracts, data privacy, and identity verification. The decentralization aspect eliminates a single point of attack, increasing the resilience of AI models against breaches. The immutability of blockchain ensures that the data used in training AI models and the models themselves cannot be illicitly altered, maintaining the integrity of the models. Through blockchain, every alteration or decision made by the AI model can be audibly traced, providing unparalleled transparency and accountability. The use of smart contracts automates the enforcement of data access and usage rules, preventing unauthorized or unethical use of AI models. Furthermore, blockchain allows secure multi-party computation, ensuring data privacy during AI model training by keeping the data decentralized. Lastly, blockchain’s secure identity verification enhances the safety of AI systems by preventing unauthorized access. Therefore, integrating AI with blockchain can establish a secure, transparent, and decentralized AI environment.

Quantum cryptography

Quantum cryptography provides a significant boost to AI model security through its unbreakable encryption methods based on quantum mechanics principles. Its ability to use quantum bits, which exist in multiple states simultaneously, adds a higher degree of complexity to encryption, increasing protection against potential hackers. Key to this is Quantum Key Distribution (QKD), which securely transmits quantum keys between parties, alerting them to any secret attempts due to the quantum uncertainty principle. Consequently, quantum cryptography can securely encrypt sensitive data used in AI models and protect the models themselves from unauthorized access or manipulation. Additionally, with the looming threat of quantum computers breaking current cryptographic methods, quantum cryptography’s robust encryption will be a vital tool in securing AI models in the future.

AI security techniques are still emerging, and this is a dynamic field of research as new threats and vulnerabilities continue to appear. As AI technology continues to evolve, it’s likely that we will see even more innovative security techniques developed to protect these advanced systems.

Endnote

At a time when AI and machine learning systems have become intrinsic to our everyday lives, securing these AI models is of paramount importance. From personalized marketing recommendations to high-stakes fields such as healthcare, finance, and defense, the need for robust AI model security is ubiquitous. The integrity, confidentiality, and availability of these systems are critical in ensuring their successful application, protecting sensitive information, and maintaining the trust of the public.

The potential for misuse or malicious attacks grows as we increasingly rely on AI systems to make vital decisions. Therefore, a strong security framework that safeguards against such threats while enabling the beneficial use of AI is necessary. Robustness testing, secure multi-party computation, and an emphasis on transparency through techniques like Explainable AI are all critical components of this framework.

Moreover, as AI technology continues to evolve, it’s also necessary to anticipate and prepare for future security challenges. Technological advancements like quantum computing will invariably change the landscape of AI security. Quantum cryptography, for instance, holds the promise of theoretically unbreakable encryption, bolstering the security of data transmission among parties.

As we move into the future, it’s critical for researchers, developers, and policymakers alike to prioritize security in the design and implementation of AI systems. Embracing innovations such as quantum cryptography, blockchain technology, and explainable AI will be essential in creating a safer, more reliable, and more trustworthy AI environment for all.

Ready to secure your AI systems? Trust our experts to safeguard your technologies. Contact us today for a comprehensive AI security consultation to protect your future.

Start a conversation by filling the form

All information will be kept confidential.

Insights

How to build a private LLM?

Language models are the backbone of natural language processing (NLP) and have changed how we interact with language and technology.

How to build an AI-powered chatbot?

A chatbot is an artificial intelligence (AI) program that simulates human conversation by interacting with people via text or speech.

Redefining customer experience: The role of AI in customer support

The synergy between AI and customer service has opened new avenues for efficient communication, personalized service delivery, and valuable insights into customer behavior.