How to build an enterprise AI solution?

In an ever-changing digital landscape, businesses need a competitive edge to stay ahead of the curve. They must automate their processes and operations to improve decision-making and increase efficiency, productivity, and profitability. Enterprise AI, a sub-domain of enterprise software helps businesses achieve that.

Enterprise AI solutions are rapidly transforming how enterprises function. With the ability to process large volumes of data and automate routine tasks, AI-based enterprise-level solutions are helping businesses enhance operational efficiency, reduce costs, improve decision-making processes and do much more. For instance, AI-powered chatbots and other customer service tools improve the customer experience, while predictive maintenance systems reduce downtime and maintenance costs. The insights generated by AI ensure better decision-making and help enterprises gain a competitive advantage.

This article discusses what an enterprise AI solution is, the four major advancements that set the stage for enterprise AI solutions, and some potential benefits of building an enterprise AI solution. Finally, we will discuss eight detailed steps to build an enterprise AI solution.

- What is an enterprise AI application?

- Major advancements that laid the road for enterprise AI applications

- Enterprise AI architecture: The five layer model

- The strategy to adopt while building an enterprise AI solution

- Core principles to follow while building an enterprise AI solution

- Why build an enterprise AI solution?

- How to build an enterprise AI solution?

- How to implement an enterprise AI solution?

What is an enterprise AI solution?

An enterprise AI solution is an AI-based technology that is designed and implemented to solve specific business challenges or streamline business processes within an enterprise or organization. It involves the application of machine learning, natural language processing, computer vision, and other AI techniques to develop intelligent systems that can automate tasks, analyze data, and provide insights.

Enterprise AI solutions can be customized to meet the unique needs of different organizations, and they can be used in various industries, including healthcare, finance, manufacturing, retail, and more. They can be used to improve customer experience, increase operational efficiency, reduce costs, and help organizations make data-driven decisions. Now, let’s also discuss what an enterprise AI application is.

An enterprise AI application is a software application that leverages artificial intelligence (AI) technologies to improve business processes and decision-making within an enterprise setting. Some common examples of enterprise AI applications include customer service chatbots that understand and respond to customer inquiries in real-time, fraud detection systems that analyze transaction data and identify potential fraudulent activity, predictive maintenance systems, and supply chain optimization tools that optimize inventory levels and reduce transportation costs.

Major advancements that laid the road for enterprise AI solutions

The significance of enterprise AI cannot be overemphasized. As stated earlier, businesses can improve operational efficiency, automate routine tasks, and provide better customer experiences using enterprise AI solutions. Thus, it is easy to infer that businesses that embrace it will be best positioned to succeed in the future. But what were the driving forces behind this breakthrough? Taking a deep dive into the critical technological advancements that underpin enterprise AI development will give a well-rounded perspective on the technology’s capabilities and potential impact.

The first of these advancements is the emergence of machine learning as a subfield of AI. ML enables machines to learn from data to perform certain tasks and functions without being explicitly programmed to do so. Machine learning systems don’t need a list of set rules to make decisions but instead learn patterns from past training data. ML systems are highly diverse and adapt quickly to changing conditions, business requirements, and circumstances as their underlying training data evolve. ML systems have proven to outperform rules-based software across a variety of business use cases, such as in medical diagnostics, operational reliability, customer churn detection, and demand forecasting.

The second major advancement for enterprise AI solutions is the availability of vast amounts of digital data across enterprises instead of paper-based data. The success of Enterprise AI will remain dictated by the quantity, quality, and scope of data to which firms have access. Several AI solutions rely on supervised learning, which requires accurately labeled data. In cases where we use unsupervised learning for anomaly detection, the more data we have, the more accurate our results can be. Since AI and ML systems learn from historical data, the performance of these systems increases dramatically with the availability of higher volumes and a more diverse data set. With this rapid increase in available data volumes and the dramatic expansion in the variety of data sources, AI and machine learning systems are set to succeed across enterprise-grade use cases.

The third advancement has been the widespread adoption of IoT sensors across all major industries, from energy, infrastructure, manufacturing, and telecommunications to logistics, retail, and healthcare. Owing to the vast number of sensors deployed across value chains, organizations can now have real-time visibility and insights across operations, supply chains, and customer service. While it may be difficult to monitor and act on this vast amount of real-time data manually or with rules-based software, enterprise AI solutions can make this feasible for us. This feature can unlock significant benefits for organizations across use cases such as predictive maintenance, quality control, operational safety, logistics management, and fraud monitoring.

The next major advancement has been the emergence of the elastic cloud. AI and ML systems imbibe and improve their decision-making through training. Training an ML model is finding an optimal set of model weights and parameters that best represent the relationship between the inputs and outputs observed in the training data. While the performance of the model improves significantly with an increase in the size of the training dataset and the number of training iterations, demand for storage resources needed for training can become material. Since a single enterprise AI solution may include thousands of ML models, each requiring consistent re-training, the need for storage resources can grow rapidly. The availability of elastic, cloud-based, and distributed computing and storage systems at a minimal cost addresses the model training challenge and is a major enabler for enterprise AI solutions. The elastic cloud provides managed services for running AI applications, eliminating organizations’ need to manage the underlying infrastructure and freeing up IT resources for other tasks. Additionally, it integrates with the Elastic Stack, which provides a comprehensive set of data analysis and visualization tools, making it easier for organizations to gain valuable insights from their AI applications and make data-driven decisions.

Enterprise AI architecture: The five layer model

The enterprise AI architecture involves a five-layered structure, each layer serving a distinct function while being developed autonomously. This approach allows each layer to connect with the organization’s existing technology stack, maintaining flexibility across different vendors and build-versus-buy preferences. The layers are as follows:

AI infrastructure management:

The primary role of this layer is to optimize infrastructure across multiple service providers, ensuring ample processing power for model training. Developed by cross-functional teams, the models created are relevant to various enterprise units. The infrastructure layer manages data storage, hosts applications (both on-premises and cloud-based), trains AI models, and executes inference. It also simplifies complexity across public and private cloud systems. The key users are teams configuring and operating infrastructures from multiple AI vendors and Machine Learning (ML) operations teams.

AI engineering lifecycle management (ML Operations)

This layer standardizes the AI lifecycle, supporting a variety of development tools and frameworks. It collects AI artifacts (versions and metadata) from across models for future use. Open-source and third-party frameworks are utilized, supported by a microservices-based cloud-native architecture. This layer facilitates data scientists in using ML tools for training and deploying models on an enterprise scale. It also assists testers in validating AI artifacts and IT operations teams in configuring and deploying AI policies.

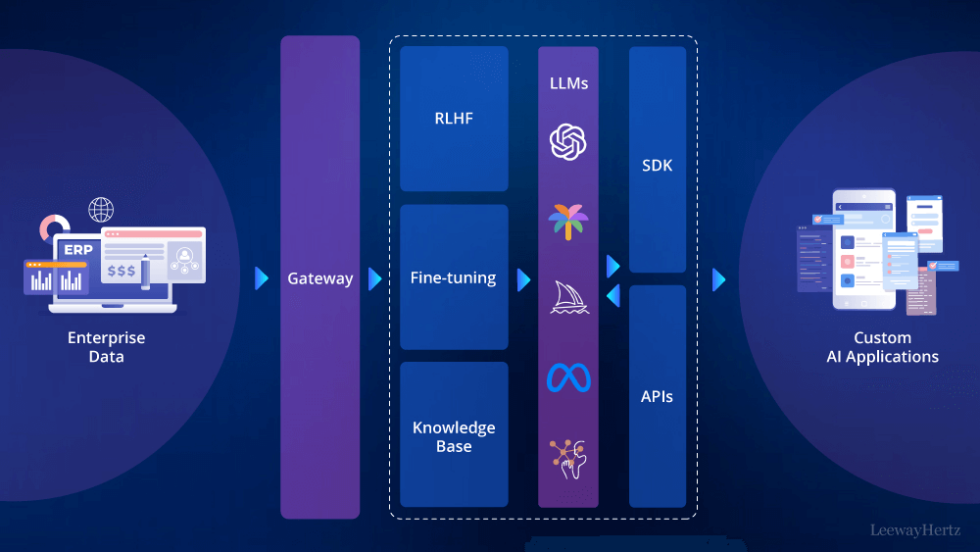

AI services (APIs)

This layer provides a standardized API and catalog for user access to AI services. With a microservices-based design, each API offers specific, well-defined functions. One AI service can be invoked from multiple applications, offering flexibility to switch models without altering the underlying system. This layer serves as a catalog of enterprise AI requirements for internal data scientists, third-party AI vendors, and hyperscalers. Designers, developers, and AI monitoring teams are the primary users.

AI control center

The AI control center is designed to ensure consistency and optimization of AI systems across business functions. It collects, reports, and measures AI metrics against business KPIs, allowing business teams to understand model usage and intervene if necessary. This layer is used by IT support teams, data scientists, and regulatory compliance teams.

AI store

The AI store offers a comprehensive view of all AI artifacts, including success stories, capabilities, and innovations. It promotes quality content via social feedback, quality metrics, and ratings monitoring. Telemetry is used to track user behavior, while the integrated development and ML operations gather automatic updates from various AI tools and services. Primarily designed to encourage AI adoption, this layer is used by all stakeholders – end users, testers, data scientists, operations teams, infrastructure teams, and IT managers.

The strategy to adopt while building an enterprise AI solution

Developing a successful strategy for an enterprise AI solution involves a number of important steps:

Aligning AI with your business strategy

AI, though a powerful tool, is not an end in itself. The application of AI should be strategically integrated with your overall business goals. As a starting point, organizations should:

- Evaluate their existing business strategy for relevance.

- Synchronize their business strategy with AI possibilities.

- Identify processes where AI can create value and where it’s more beneficial to employ alternative technologies.

- Ensure the processes identified for AI integration are primed for it.

- Avoid unnecessary expenses on AI projects initiated without a clear strategic direction. Achieving success in AI projects demands precise upfront cost estimation, high-quality data, and a robust strategic plan.

Develop a data strategy

Quality data is the lifeblood of AI systems. Therefore, a robust data strategy is a prerequisite for scaling AI in your organization. This involves:

- Managing all aspects of data lifecycle from collection and storage to integration and cleaning.

- Providing high-quality, accurately labeled data to your AI systems.

- Automating data pipelines for scalability, since manual data management is only feasible for smaller AI models.

Organizations lacking in extensive data can still leverage AI by focusing on enhancing the quality of their existing datasets. Quality, not quantity, can lead to high performing AI models.

Build a robust technology infrastructure

Given that AI systems are resource-intensive, having the right technology infrastructure to develop and deploy AI models is crucial. The computational power to train prominent AI models has been on an exponential growth path, and organizations must be equipped to meet these demands.

Set up a cross-functional center of excellence

A successful enterprise AI strategy should involve a dedicated business unit that oversees and coordinates all AI initiatives in the organization. This unit, known as AI Center of Excellence (AI CoE), identifies AI use cases and strategizes their execution. To effectively bridge the gap between decision-making and AI implementation, an AI CoE should consist of a diverse team including AI professionals, IT experts, business executives, and domain specialists.

Adopt responsible AI development

As AI systems gain more influence, the ethical aspects of AI come to the forefront. Unfair and biased decisions by AI can impact public trust and expose your organization to legal risks. It’s imperative that business executives and AI practitioners familiarize themselves with the principles of responsible AI development, such as fairness, transparency, privacy, and security.

Enhance employee engagement

A comprehensive AI strategy should not solely focus on technology investment but also on human engagement. Implementing AI on a large scale necessitates new skills acquisition, which might cause apprehension among employees fearing job displacement.

To address these challenges, organizations should:

- Offer re-skilling and upskilling opportunities.

- Restructure business processes, workflows, and policies.

- Enhance communication to ensure everyone understands the changes, reasons, and expectations. Such measures can help businesses prepare for the changes that large-scale AI adoption will bring while fostering increased employee engagement.

Launch your project with LeewayHertz

Unleash the potential of AI for your enterprise with our expert enterprise AI development services

Core principles to follow while building an enterprise AI solution

Unify all data

A fundamental aspect of process re-engineering in a company involves integrating data from a variety of systems and sensors into a single, constantly updated data image. This necessitates the gathering and processing of massive datasets from countless disparate sources, including legacy IT systems, internet sources, and vast sensor networks.

For instance, a Fortune 500 manufacturer may need to consolidate 50 petabytes of data scattered across 5,000 systems. These systems could represent a wide array of areas, such as customer relations, dealer interactions, ordering systems, pricing, product design, engineering, manufacturing, human resources, logistics, and supplier systems. Complicating factors could include mergers and acquisitions, differing product lines, varying geographies, and diverse customer engagement channels like online platforms, physical stores, call centers, and field representatives.

The key to facilitating this broad data integration is a scalable enterprise message bus and data integration service. This service should offer adaptable industry-specific data exchange models. For example, HL7 for healthcare, eTOM for telecommunications, CIM for power utilities, PRODML and WITSML for oil and gas, and SWIFT for banking. By mapping data from source systems to a common data exchange model, the number of system interfaces that need to be developed and maintained can be significantly reduced.

As a result, implementing a comprehensive enterprise AI platform that integrates with 15 to 20 source systems can be achieved in three to six months, rather than years.

Multi-cloud deployment

The principle of enabling multi-cloud deployments is central to the architecture of an enterprise AI solution. This principle underscores the need for flexibility and scalability when handling large data sets across various cloud environments, be it private, public, or hybrid. By utilizing container technologies, such as Mesosphere, the AI platform becomes flexible and capable of leveraging the most suitable services provided by different cloud providers, such as AWS Kinesis or Azure Streams.

Furthermore, the multi-cloud operation is a must, giving your platform the ability to function across various cloud services, access data from private clouds, or use specialized services like Google Translate. Also, the platform should adhere to data sovereignty regulations by enabling deployment within specific geographic locations.

When considering installation, your AI platform should fit into a customer’s virtual private cloud account (like Azure or AWS) and even accommodate specialized clouds with industry or government-specific security certifications, such as AWS GovCloud or C2S.

As for data storage, a variety of data stores are necessary, including relational databases for transactional support, key-value stores for low-latency data, and distributed file systems for unstructured data. If a data lake is already in place, the platform should be capable of accessing data directly from that source. This principle emphasizes versatility and adaptability when it comes to cloud-based data handling and storage in enterprise AI solutions.

Provide edge deployment options

A key principle of building an enterprise AI solution is to “Provide Edge Deployment Options.” This principle emphasizes the need for the AI platform to function in areas with low-latency computing needs or limited network bandwidth. This means enabling local processing and running AI analytics, predictions, and inferences directly on remote gateways and edge devices. In essence, your AI solution should be adaptable and capable of operating efficiently, regardless of network conditions.

Multi-format data in-place

As part of building an effective enterprise AI solution, it’s essential to be able to access multi-format data in place. AI applications should have services that can process data in a variety of ways – from batches and microbatches to real-time streams and in-memory processing across a server cluster. This is critical for data scientists who need to test analytics features and algorithms against large-scale data sets. Moreover, the AI platform should maintain high-security standards by ensuring data encryption during transit and at rest.

The AI platform architecture should also permit the integration of various services without changing the application code. It should support data virtualization, allowing developers to work with data without needing an in-depth understanding of the underlying data stores. A range of database technologies should be accommodated, including relational data stores, distributed file systems, key-value stores, and graph stores, along with legacy applications and systems like SAP, OSIsoft PI, and SCADA systems.

Implementing enterprise object model

In building an enterprise AI solution, one of the core principles is to establish a “Consistent Object Model Across the Enterprise”. This means that the AI platform should have an active object model that represents entities (like assets, products, and customers) and their relationships, irrespective of the underlying data formats and storage methods. Unlike the passive models in typical tools, this active model is interpreted by the AI platform during runtime, offering significant flexibility to dynamically handle changes. The platform should be capable of accommodating alterations to the object model which are versioned and become immediately active, all without the need to re-write the application code.

Facilitate AI microservices throughout the enterprise

As a fundamental aspect of constructing an enterprise AI solution, the platform should offer a well-structured catalog of AI-based microservices. These services, accessible throughout the enterprise and governed by security protocols, enable developers to swiftly and effectively build applications, utilizing top-notch components.

Ensure data governance and security at the enterprise level

In building an enterprise AI solution, it’s vital to have robust data governance and security. Your platform should ensure strong encryption and multi-level user access controls, authorizing all interactions with data objects, services, and ML algorithms. Access rights should be dynamically adjustable and based on user-specific permissions. Moreover, your platform should be compatible with external security services, like centralized consent management services, especially in sectors like healthcare and finance.

Facilitate comprehensive AI model development cycle

To establish a successful AI solution, it is essential to incorporate a platform that supports a full life-cycle model development. This enables data scientists to rapidly design, test, and launch machine learning and deep learning algorithms. They should have the freedom to utilize any programming language they are proficient in, such as Python, R, Scala, or Java. This accelerates the creation of these algorithms and ensures they’re constructed using the most recent data sets, enhancing their precision and accuracy.

This platform should eliminate the need for any programming language conversion when deploying these algorithms, reducing time, effort, and potential errors. Furthermore, machine learning algorithms should offer APIs to automate predictions and retraining as required, ensuring that they operate optimally based on incoming data. Predictions generated by AI should be able to trigger events and notifications or contribute to other routines, including simulations involving constraint programming.

Inclusive of external IDEs, tools, and frameworks

To promote productivity and foster innovation, an enterprise AI solution should be compatible with a variety of third-party technologies and tools. It should offer flexibility to accommodate different programming languages, machine learning libraries, and data visualization tools. The solution should also support standardized interfaces and provide seamless integration of new software, all while ensuring the organization’s existing functions are not disturbed. This open approach enhances collaboration and keeps pace with evolving technology trends.

Facilitate team-based AI application creation

In the realm of AI, data scientists often work solo, spending a lot of time on tasks like data cleaning and normalization. The machine learning algorithms they create may not meet IT standards and may need to be reprogrammed. These algorithms might also not be optimal due to not being tested on enough data.

An ideal AI platform should solve these issues. It should let data scientists create and fine-tune algorithms using their preferred programming language, and let them test these algorithms on sufficient amounts of real production data. The platform should also provide tools and services to assist with data cleaning, normalization, object modeling, and representation, helping to speed up the development process. It should also allow the immediate deployment of machine learning algorithms in production through a standard RESTful API.

The platform should provide easy access to data objects, methods, services, and machine-learning algorithms through standard programming languages and development tools. It should also provide visual tools for rapid application configuration, APIs for metadata synchronization with external repositories, and version control through synchronization with common source code repositories like git. This encourages collaborative development and helps to make the work of data scientists more effective and efficient.

Why build an enterprise AI solution?

As technology continues to evolve rapidly, it’s crucial for businesses to use advanced tools to remain competitive and foster growth. Developing an enterprise AI solution provides a strategic edge, helping companies to automate operations, boost efficiency, improve security, and heighten productivity. Integrating AI across all departments can help businesses extract actionable insights from their data, simplify workflows, and deliver exceptional service, paving the way for future success.

Enhanced automation

Companies can introduce specialized machine learning algorithms and other AI-based applications to increase efficiency and streamline operations across a range of departments, including sales, finance, marketing, HR, customer service, and production. By applying machine learning to sales, businesses can more effectively identify potential clients, fully automate their production chain, and let AI take the lead in HR recruitment. AI tools, recognized for their precise calculations and fast data processing, can help businesses operate more quickly, facilitating significant growth.

Components may include:

- AI chatbots and applications

- Machine learning algorithms

- Neural networks and deep learning

- Visual data processing

- Cloud-based AI systems

Increased operational efficiency

Businesses can utilize a variety of AI tools to instantly draw valuable insights from their extensive data sets. By creating predictive machine learning algorithms, companies can anticipate future growth following an investment. These predictive algorithms can help to understand customer behavior more effectively. AI technologies can swiftly analyze vast amounts of data, providing information that supports robust decision-making.

Key elements may include:

- Predictive machine learning algorithms

- Data generation and enhancement

- Sentiment analysis

- Data analysis management

- Raw data handling

Improved security

Companies can incorporate virtual cryptocurrency within their app or marketplace to generate additional value for initial users, ensuring rapid early network growth. These early adopters can profit from the increase in app token price, encouraging rapid platform expansion by attracting new users.

Essential features might include:

- Custom machine learning algorithms

- Voice recognition technology

- Video search capabilities

- Neural networks

- Facial recognition technology

Increased productivity

By implementing AI solutions, businesses can adopt a “human-augmented approach”, where employees collaborate with AI software. Using AI-based speech-to-text during client meetings allows for automatic contract drafting. Businesses can create their own proactive AI assistant to support employees daily. AI-enabled solutions can also help to remove workflow bottlenecks and pinpoint tasks that can be enhanced and automated with AI tools.

Incorporating components such as:

- Language comprehension capabilities

- Enterprise-focused AI applications

- Voice processing technology

- AI-powered Text-to-Speech

- AI-enabled Speech-to-Text

Launch your project with LeewayHertz

Unleash the potential of AI for your enterprise with our expert enterprise AI development services

How to build an enterprise AI solution?

Step 1: Defining the business problem

The first step in building an enterprise AI solution is to identify the business problem that the AI solution will go on to solve.

- Identifying the business problem to be solved with AI: From customer experience, operations, costs, to revenue- AI can truly offer a solution to the challenges faced by any of these departments. The goal of this step is to identify the business problem that the AI solution will solve and align it with the organization’s goals and objectives.

- Aligning the AI solution with your business goals and objectives: This step requires a sound understanding of what the organization wants to achieve and how AI can support these goals. Aligning the AI solution with the organization’s goals and objectives can ensure the solution is topical, impact-driven, and in sync with the overall business strategy.

- Defining the problem statement: This step can be used interchangeably with the first one. Defining the business problem helps organizations to understand the business challenges they face, categorize them, and determine how AI can help to solve them.

Step 2: Assessing the data

Gathering and assessing the data is a critical step in building an effective enterprise AI solution. The quality, quantity, relevance, structure, and the process of cleaning and preprocessing the data are key considerations.

- Quality and quantity of data available: The data should be varied, relevant to the business problem being solved, and free of errors or discrepancies. If the data is not of the desired quality or quantity, the AI solution may not provide accurate results.

- Relevance and structure of the data: The data must be relevant to the business problem being solved and structured appropriately for the chosen AI algorithms. If the data is unstructured, preprocessing may be required to convert it, so it can be used to train AI models.

- Cleaning and preprocessing the data: This stage involves removing inconsistencies, handling missing values, and transforming the data into a format that can be used to train the AI models. Scaling or normalizing the data, encoding categorical variables, and splitting the data into training and testing sets may be undertaken.

Step 3: Choosing the right AI technologies

A full array of AI algorithms and technologies are available, and selecting the appropriate ones for a particular business problem is essential.

- Types of AI algorithms and technologies: Some types of AI algorithms and technologies include supervised learning, unsupervised learning, reinforcement learning, and deep learning. Each type is suited to solving a specific business problem.

- Selecting the appropriate technologies for the business problem: In order to select the most appropriate technology for a problem, there must be a thorough understanding of the problem and the data available to solve it. The selection process must consider factors like the size and complexity of the data, the type of problem being solved, and the desired outcome from the troubleshooting. An introspective evaluation of the strengths and weaknesses of different AI algorithms and technologies is necessary to make an informed decision.

Step 4: Building the data pipeline

A series of processes that make the movement of data from its source to the AI models possible, the data pipeline, plays a crucial role in the success of the AI solution.

- Designing and implementing a data pipeline: The design and implementation of the data pipeline involve a series of decisions around data sources, storage options, and the processing steps required. Scalability, security, and efficiency are key when it comes to designing the pipeline. The pipeline must meet the requirements of the AI models and the business problem being solved.

- Ingesting, processing, and storing the data: The data ingestion process involves extracting data from databases or other data sources, while the data processing steps may involve cleaning, transforming, and normalizing the data. Finally, the data storage process ensures that the data is protected and easily accessible for training the AI models.

Step 5: Training the AI models

The goal of this step is to create and train the AI models that can accurately solve business problems and provide valuable insights.

- Training the models using the data pipeline and selected algorithms: The data from the pipeline trains the models, and the algorithms generate predictions. The training process is an iterative process that involves adjusting the parameters of the models to optimize their performance.

- Evaluating the performance of the models: The evaluation of the models includes comparing the predictions generated by the models to the actual outcomes and determining the accuracy and reliability of the models. This information is used for further development of the models.

- Making improvements and refinements as needed: Based on how the models perform, improvements and refinements may be required. This could be improving the accuracy or reliability of the model or even just adjusting the parameters of the models, collecting more data, or selecting different algorithms.

Step 6: Deploying the AI solution

Some call this the final step in the development of an enterprise AI solution. The goal here is to integrate the AI solution into the existing enterprise systems and processes. This collaboration ensures that the solution operates smoothly and provides value to businesses.

- Integrating the AI solution with existing enterprise systems and processes: This step involves connecting the AI solution to databases, APIs, or other enterprise systems to exchange data and information. The integration process is key for the organization’s existing systems and processes, which get the opportunity to align with the AI solutions.

- Ensuring scalability, security, and reliability: Scalability refers to the ability of the AI solution to handle large amounts of data and processing demands. Security refers to the protocols in place to protect sensitive data. Reliability refers to the ability of the AI solution to perform consistently and accurately and to provide valuable insights.

Step 7: Monitoring and evaluating

An ongoing process in the implementation of an enterprise AI solution, monitoring and evaluation involves continuous monitoring of the performance of the AI solution, evaluating its impact on the business, and making improvements and refinements as needed.

- Performance monitoring of the AI solution: The AI solution must deliver efficiently and effectively. In order to ensure this, certain measures, such as tracking key metrics like accuracy, speed, and reliability, can be taken. Performance monitoring can help identify potential concerns with the AI solution, such as data quality problems or algorithmic inefficiencies, and make improvements as needed.

- Evaluating the impact on the business: This is essentially the process of determining the value that the AI solution is providing to the organization. The process may involve measuring the impact on business outcomes, such as increased efficiency, reduced costs, or improved customer satisfaction.

- Making improvements and refinements as needed: Based on the results of performance monitoring and impact evaluation, the AI solution may need improvements and refinements to ensure that it continues to provide value to businesses. Sometimes these refinements can look like making changes to the data pipeline, updating algorithms, or improving the integration with existing enterprise systems and processes.

Step 8: Plan for continuous improvement

The goal of this plan is to ensure that the AI solution remains dynamic and continues to evolve over time to meet the changing needs of businesses.

- Staying current and relevant with new technologies and use cases: It is important for organizations to stay abreast with new technologies and use cases being developed in the field of AI. Attending conferences and workshops, conducting research, or engaging with experts in the field are all important ways to do so.

- Making Continuous Improvements to the AI Solution: This step involves making updates and refinements to the data pipeline, algorithms, and existing enterprise systems and processes. The AI solution must continue to better over time and meet the diverse needs of businesses.

How to implement an enterprise AI solution?

Implementing an AI enterprise solution effectively is vital to ensure that the resources invested do not go to waste. Here are six steps to help you integrate AI solutions into your organization effectively:

Clearly define your objectives

The first step is to identify the problems within your business that can be solved using AI solutions. This will help you set the goals for your AI project and ensure they align with your overall business objectives and current market trends. To identify the most suitable AI solution for your business, consider the following questions:

- What are the primary challenges your current business model is facing?

- How can an AI solution address these problems to benefit your business?

- Is an AI solution capable of resolving the existing business challenges you are facing?

- What outcomes do you expect from your AI solution?

- What are the major obstacles in achieving these outcomes?

- How will you measure the success of your AI solution in your business?

These questions will guide you in tailoring your AI solution to meet your business needs effectively.

Develop use cases

Creating use cases assures that your AI solution is achievable and meets your business needs. Developing a robust AI use case involves:

- Defining clear project goals and feasible AI application ideas.

- Identifying Key Performance Indicators (KPIs) to assess your project’s success.

- Assigning a case owner to manage development, testing, and validation.

- Identifying the critical data to achieve the objective.

- Evaluating the legal and ethical implications of an AI solution.

- Assessing current capabilities and technology to support your solution development.

- Planning for potential issues or roadblocks.

According to IBM, as of 2022, about 54% of companies already using AI reported cost savings and improved efficiencies.

Assess your internal capabilities

Before setting out to implement an AI solution, evaluate your organization’s capabilities to achieve your goals. Consider whether your business has the necessary in-house expertise or the budget to outsource a team. For smaller businesses, integrating existing SaaS solutions may be more viable, while larger businesses may opt to hire or outsource a dedicated team to an enterprise AI application development company.

Gather data

Data is crucial for training your AI model, as AI learns from existing datasets and mimics human thought and action. The steps to data collection for your AI application include:

- Identifying critical data for your enterprise AI solution.

- Determining data sources.

- Inspecting, analyzing, and summarizing data.

- Sourcing and integrating data.

- Cleaning the data.

- Preparing data for your AI model.

Determine the AI learning model

In this step, you’ll decide whether human involvement is needed at the start or throughout your AI model’s learning process. Two types of training models are commonly used:

- Supervised Learning: In this model, machines are trained using labeled data samples to distinguish between correct and incorrect inputs.

- Unsupervised Learning: In this model, machines learn independently by trying to identify patterns according to the data provided. There’s no direct instruction about correct or incorrect data.

Plan for human intervention

Finally, determine when and where human assistance is needed for your AI model’s success. You may need the expertise of human intelligence specialists during the development process. Identifying these points at the outset helps to anticipate staffing needs. Understanding the role of human intelligence in your AI solution development ensures that your model performs as expected and delivers the desired outcomes.

Endnote

Building an enterprise AI solution can seem like a challenging process that needs careful planning and execution. Some factors that form the backbone of a robust enterprise AI solution include having good quality data, having large data sets, having a data pipeline, and the possibility of consistent training of the models, so they perform optimally at all times. By carefully defining the business problem to be solved with AI, gathering and assessing the data, choosing the right AI technologies, building a data pipeline, training the models, deploying the solution, monitoring and evaluating performance, and fostering a data-driven culture, organizations can benefit from the power of AI to improve their operations, drive business growth, and stay ahead on the curve.

LeewayHertz boasts extensive expertise in creating enterprise AI applications leveraging AI technologies like deep learning, machine learning, computer vision and natural language. Contact LeewayHertz today to discuss your requirements and bring your vision to life!

Start a conversation by filling the form

All information will be kept confidential.

Insights

Digital Transformation in Healthcare

Digitalization in healthcare refers to integrating digital technologies and processes into various healthcare delivery and management aspects.

Federated learning: Unlocking the potential of secure, distributed AI

Federated learning aims to train a unified model using data from multiple sources without the need to exchange the data itself.

AI in financial compliance: Streamlining regulatory processes

AI applications are redefining financial regulatory compliance by offering unparalleled precision, efficiency, and adaptability.