Ensuring data security in AI systems

As AI becomes deeply embedded in our everyday lives, the data fueling these intelligent systems becomes more valuable than ever. However, along with its increasing value come heightened risks. With AI systems having access to vast amounts of sensitive data for tasks like business analytics and personalized recommendations, safeguarding it has become increasingly important in today’s digital era. Data security is a major concern of current times, the implications of which extend far beyond the IT department, encompassing a broader scope of interest.

Data security in AI systems is not just about safeguarding information; it’s about maintaining trust, preserving privacy, and ensuring the integrity of AI decision-making processes. The responsibility falls not just on database administrators or network engineers, but everyone who interacts with data in any form. Whether creating, managing, or accessing data, every interaction with data forms a potential chink in the armor of an organization’s security plan.

Whether you are a data scientist involved in the development of AI algorithms, a business executive making strategic decisions, or a customer interacting with AI applications, data security affects everyone. Hence, if you are dealing with data that holds any level of sensitivity — essentially, information you wouldn’t share with any arbitrary individual online — the onus of protecting that data falls upon you too.

In this article, we will delve into the intricacies of data security within AI systems, exploring the potential threats, and identifying strategies to mitigate the risks involved.

- Why is data security in AI systems a critical need?

- Industry-specific data security challenges for AI systems

- Understanding the types of threats

- The role of regulations and compliance of data security in AI

- Principles for ensuring data security in AI systems

- Techniques and strategies for ensuring data security

- Best practices for AI in data security

- Future trends in AI data security

Why is data security in AI systems a critical need?

With advancements taking place at an unparalleled pace, the growth of artificial intelligence is impossible to ignore. As AI continues to disrupt numerous business sectors, the importance of data security in AI systems becomes increasingly important. Traditionally, data security was mainly a concern for large enterprises and their networks due to the substantial amount of sensitive information they handled. However, with the rise of AI programs, the landscape has evolved. AI, specifically generative AI relies heavily on data for training and decision-making, making it vulnerable to potential security risks. Many AI initiatives have overlooked the significance of data integrity, assuming that pre-existing security measures are adequate. However, this approach fails to consider the potential threat of targeted malicious attacks on AI systems. Here are three compelling reasons highlighting the critical need for data security in AI systems:

- Threat of model poisoning: Model poisoning is a growing concern within AI systems. This nefarious practice involves malicious entities introducing misleading data into AI training sets, leading to skewed interpretations and, potentially, severe repercussions. In earlier stages of AI development, inaccurate data often led to misinterpretations. However, as AI evolves and becomes more sophisticated, these errors can be exploited for more malicious purposes, impacting businesses heavily in areas like fraud detection and code debugging. Model poisoning could even be used as a distraction, consuming resources while real threats remain unaddressed. Therefore, comprehensive data security is essential to protect businesses from such devastating attacks.

- Data privacy is paramount: As consumers become increasingly aware of their data privacy rights, businesses need to prioritize their data security measures. Companies must ensure their AI models respect privacy laws and demonstrate transparency in their use of data. However, currently, not all companies communicate their data usage policies clearly. Simplifying privacy policies and clearly communicating data usage plans will build consumer trust and ensure regulatory compliance. Data security is crucial in preventing sensitive information from falling into the wrong hands.

- Mitigating insider threats: As AI continues to rise, there is an increased risk of resentment from employees displaced by automation, potentially leading to insider threats. Traditional cybersecurity measures that focus primarily on external threats are ill-equipped to deal with these internal issues. Adopting agile security practices, such as Zero Trust policies and time-limited access controls, can mitigate these risks. Moreover, a well-planned roadmap for AI adoption, along with transparent communication, can reassure employees and offer opportunities for upskilling or transitioning to new roles. It’s crucial to portray AI as an asset that enhances productivity rather than a threat to job security.

Industry-specific data security challenges for AI systems

Data security challenges in AI systems vary significantly across industries, each with its unique requirements and risks:

- Healthcare: The healthcare industry deals with highly sensitive patient data. AI systems in healthcare must comply with stringent regulations like HIPAA in the United States, ensuring the confidentiality and integrity of patient records. The risk is not just limited to data breaches affecting privacy but extends to potentially life-threatening situations if medical data is tampered with.

- Finance: Financial institutions use AI to process confidential financial data. Security concerns include protecting against fraud, ensuring transaction integrity, and compliance with financial regulations like GDPR and SOX. A breach can lead to significant financial loss and damage to customer trust.

- Retail: Retailers use AI for personalizing customer experiences, requiring them to safeguard customer data against identity theft and unauthorized access. Retail AI systems need robust security measures to prevent data breaches that can lead to loss of customer trust and legal repercussions.

- Automotive: In the automotive sector, especially in the development of autonomous vehicles, AI systems must be secured against hacking to ensure passenger safety. Data security in this industry is critical to prevent unauthorized access that could lead to accidents or misuse of vehicles.

- Manufacturing: AI in manufacturing involves sensitive industrial data and intellectual property. Security measures are needed to protect against industrial espionage and sabotage. Manufacturing AI systems often control critical infrastructure, making their security paramount.

- Education: AI in education handles student data and learning materials. Ensuring the security and privacy of educational data is crucial to protect students and comply with educational privacy laws.

- Energy and utilities: AI in this sector often deals with critical infrastructure data. Security challenges include protecting against attacks that could disrupt essential services, like power or water supply.

- Telecommunications: AI in telecom must protect customer data and maintain the integrity of communication networks. Security challenges include safeguarding against unauthorized access and ensuring the reliability of communication services.

- Agriculture: AI in agriculture might handle data related to crop yields, weather patterns, and farm operations. Ensuring the security of this data is crucial for the privacy and economic well-being of farmers and the food supply chain.

Understanding the types of threats

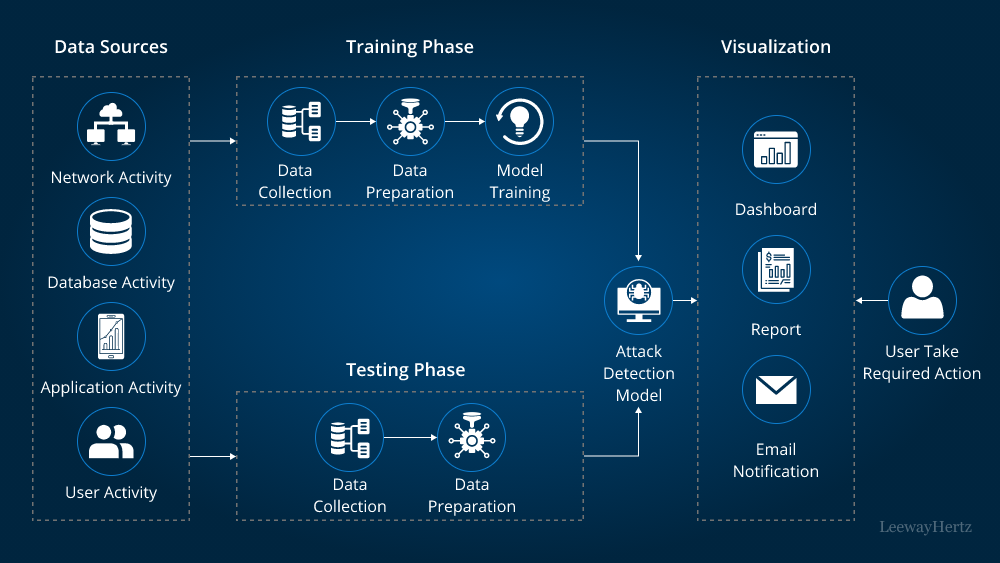

As the application of artificial intelligence becomes more pervasive in our everyday lives, understanding the nature of threats associated with data security is crucial. These threats can range from manipulation of AI models to privacy infringements, insider threats, and even AI-driven attacks. Let’s delve into these issues and shed some light on their significance and potential impact on AI systems.

- Model poisoning: This term refers to the manipulation of an AI model’s learning process. Adversaries can manipulate the data used in training, causing the AI to learn incorrectly and make faulty predictions or classifications. This is done through adversarial examples – input data deliberately designed to cause the model to make a mistake. For instance, a well-crafted adversarial image might be indistinguishable from a regular image to a human but can cause an image recognition AI to misclassify it. Mitigating these attacks can be challenging. Certain suggested protections against harmful actions include methods like ‘adversarial training.’ This technique involves adding tricky, misleading examples during the learning process of an AI model. Another method is ‘defensive distillation.’ This process aims to simplify the model’s decision-making, which makes it more challenging for potential threats to find these misleading examples.

- Data privacy: Data privacy is a major concern as AI systems often rely on massive amounts of data to train. For example, a machine learning model used for personalizing user experiences on a platform might need access to sensitive user information, such as browsing histories or personal preferences. Breaches can lead to exposure of this sensitive data. Techniques like Differential Privacy can help in this context. Differential Privacy provides a mathematical framework for quantifying data privacy by adding a carefully calculated amount of random “noise” to the data. This approach can obscure the presence of any single individual within the dataset while preserving statistical patterns that can be learned from the data.

- Data tampering: Data tampering is a serious threat in the context of AI and ML because the integrity of data is crucial for these systems. An adversary could modify the data used for training or inference, causing the system to behave incorrectly. For instance, a self-driving car’s AI system could be tricked into misinterpreting road signs if the images it receives are altered. Data authenticity techniques like cryptographic signing can help ensure that data has not been tampered with. Also, solutions like secure multi-party computation can enable multiple parties to collectively compute a function over their inputs while keeping those inputs private.

- Insider threats: Insider threats are especially dangerous because insiders have authorized access to sensitive information. Insiders can misuse their access to steal data, cause disruptions, or conduct other harmful actions. Techniques to mitigate insider threats include monitoring for abnormal behavior, implementing least privilege policies, and using techniques like Role-Based Access Control (RBAC) or Attribute-Based Access Control (ABAC) to limit the access rights of users.

- Deliberate attacks: Deliberate attacks on AI systems can be especially damaging because of the high value and sensitivity of the data involved. For instance, an adversary might target a healthcare AI system to gain access to medical records. Robust cybersecurity measures, including encryption, intrusion detection systems, and secure software development practices, are essential in protecting against these threats. Also, techniques like AI fuzzing, which is a process that bombards an AI system with random inputs to find vulnerabilities, can help in improving the robustness of the system.

- Mass adoption: The mass adoption of AI and ML technologies brings an increased risk of security incidents simply because more potential targets are available. Also, as these technologies become more complex and interconnected, the attack surface expands. Secure coding practices, comprehensive testing, and continuous security monitoring can help in reducing the risks. It’s also crucial to maintain up-to-date knowledge about emerging threats and vulnerabilities, through means such as shared threat intelligence.

- AI-driven attacks: AI itself can be weaponized by threat actors. For example, machine learning algorithms can be used to discover vulnerabilities, craft attacks, or evade detection. Deepfakes, synthetic media created using AI, are another form of AI-driven threats, used to spread misinformation or conduct fraud. Defending against AI-driven attacks requires advanced detection systems, capable of identifying subtle patterns indicative of such attacks. Also, as AI-driven threats continue to evolve, the security community needs to invest in AI-driven defense mechanisms to match the sophistication of these attacks.

Unmatched data safety with LeewayHertz’s AI development services

LeewayHertz follows stringent data security measures at every step of the AI development process, delivering robust and reliable solutions.

The role of regulations and compliance of data security in AI

Regulations and compliance play a crucial role in data security in AI systems. They serve as guidelines and rules that organizations need to adhere to while using AI and related technologies. Regulations provide a framework to follow, ensuring that companies handle data responsibly, safeguard individual privacy rights, and maintain ethical AI usage.

Let’s delve into some key aspects of how regulations and compliance shape data security in AI systems:

- Data protection: Regulatory measures like the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States enforce strict rules about how data should be collected, stored, processed, and shared. Under these regulations, organizations must ensure that the data they use to train and run AI systems is properly anonymized or pseudonymized, and that data processing activities are transparent and justifiable under the legal grounds set out in the regulations. Companies that violate these rules can face heavy fines, highlighting the role of regulation in driving data security efforts.

- Data sovereignty and localization: Many countries have enacted laws requiring data about their citizens to be stored within the country. This can pose challenges for global AI-driven services which may have to modify their data handling and storage practices to comply with these laws. Ensuring compliance can help prevent legal disputes and sanctions and can encourage the implementation of more robust data security measures.

- Ethical AI use: There’s an increasing push for regulations that ensure AI systems are used ethically, in a manner that respects human rights and does not lead to discrimination or unfairness. These regulations can influence how AI models are developed and trained. For example, AI systems must be designed to avoid bias, which can be introduced into a system via the data it is trained on. Regulatory compliance in this area can help prevent misuse of AI and enhance public trust in these systems.

- Auditing and accountability: Regulations often require organizations to be able to demonstrate compliance through audits. This need for transparency and accountability can encourage companies to implement more robust data security practices and to maintain thorough documentation of their data handling and AI model development processes.

- Cybersecurity standards: Certain industries, like healthcare or finance, have specific regulations concerning data security, such as the Health Insurance Portability and Accountability Act (HIPAA) in the U.S., or the Payment Card Industry Data Security Standard (PCI-DSS) globally. These regulations outline strict standards for data security that must be adhered to when building and deploying AI systems.

Overall, regulatory compliance plays a fundamental role in ensuring data security in AI. It not only provides a set of standards to adhere to but also encourages transparency, accountability, and ethical practices. However, it’s crucial to note that as AI technology continues to evolve, regulations will need to keep pace to effectively mitigate risks and protect individuals and organizations.

Principles for ensuring data security in AI systems

In the realm of Artificial Intelligence (AI), data security principles are paramount. Let’s consider several key data security controls, including encryption, Data Loss Prevention (DLP), data classification, tokenization, data masking, and data-level access control.

Encryption

Numerous regulatory standards, like the Payment Card Industry Data Security Standard (PCI DSS) and the Health Insurance Portability and Accountability Act (HIPAA), require or strongly imply the necessity of data encryption, whether data is in transit or at rest. However, it’s important to use encryption as a control based on identified threats, not just compliance requirements. For example, it makes sense to encrypt mobile devices to prevent data loss in case of device theft, but one might question the necessity of encrypting data center servers unless there’s a specific reason for it. It becomes even more complex when considering public cloud instances where the threat model might involve another cloud user, an attacker with access to your instance, or a rogue employee of the cloud provider. Implementations of encryption should, therefore, be dependent on the specific threat model in each context, not simply treated as a compliance checkbox.

Data Loss Prevention (DLP)

Data Loss Prevention (DLP) is another important element in data security. However, the effectiveness of DLP often stirs up debate. Some argue that it only serves to prevent accidental data leaks by well-meaning employees, while others believe it can be an effective tool against more malicious activities. While DLP is not explicitly required by any compliance documents, it is commonly used as an implied control for various regulations, including PCI DSS and GDPR. However, DLP implementation can be a complex and operationally burdensome task. The nature of this implementation varies greatly based on the specific threat model, whether it’s about preventing accidental leaks, stopping malicious insiders, or supporting privacy in cloud-based environments.

Data classification

Data classification is pivotal in AI data security, enabling the identification, marking, and protection of sensitive data types. This categorization allows for the application of robust protection measures, such as stringent encryption and access controls. It aids in regulatory compliance (GDPR, CCPA, HIPAA), enabling effective role-based access controls and response strategies during security incidents. Data classification also supports data minimization, reducing the risk of data breaches. In AI, it improves model performance by eliminating irrelevant information and enhances accuracy. Importantly, it ensures the right protection measures for sensitive data, reducing breach risk while preserving data integrity and confidentiality.

Tokenization

Tokenization enhances AI data security by replacing sensitive data with non-sensitive ‘tokens’. These meaningless tokens secure data, making it unusable to unauthorized individuals or systems. In case of a breach, tokenized data remains safe without the original ‘token vault’. Tokenization also ensures regulatory compliance, reducing the scope under regulations like PCI DSS. During data transfer in AI systems, tokenization minimizes risk by ensuring only tokens and not actual sensitive data, are processed. It helps maintain privacy in AI applications dealing with sensitive data. It also allows secure data analysis, transforming sensitive data into non-sensitive tokens without altering the original format, ideal for AI models requiring training on large sensitive datasets. Hence, tokenization is a powerful strategy in AI for protecting sensitive data, ensuring compliance, reducing data breach risks, and preserving data utility.

Data masking

Data masking is a security technique that replaces sensitive data with scrambled or artificial data while maintaining its original structure. This method allows AI systems to work on datasets without exposing sensitive data, ensuring privacy and aiding secure data analysis and testing. Data masking helps comply with privacy laws like GDPR and reduces the impact of data breaches by making actual data inaccessible. It also facilitates secure data sharing and collaboration, allowing safe analysis or AI model training. Despite concealing sensitive data, data masking retains the statistical properties of the data, ensuring its utility for AI systems. Thus, it plays an essential role in AI data security, regulatory compliance, and risk minimization.

Data-level access control

Data-level access control is a pivotal security practice in AI systems, where detailed policies define who can access specific data and their permitted actions, thus minimizing data exposure. It provides a robust defense against unauthorized access, limiting potential data misuse. This method is instrumental in achieving regulatory compliance with data protection laws like GDPR and HIPAA. Features like auditing capabilities allow monitoring data access and detecting unusual patterns, indicating potential breaches. Furthermore, context-aware controls add another layer of security, regulating access based on factors like location or time. In AI, it’s especially useful when training models on sensitive datasets by restricting exposure to necessary data only. Therefore, data-level access control is vital for managing data access, reducing breach risks, and supporting regulatory compliance.

Techniques and strategies for ensuring data security

This section will delve into the array of techniques and strategies essential for bolstering data security in AI systems, ensuring integrity, confidentiality, and availability of sensitive information.

AI model robustness

AI model robustness, in the context of data security, refers to the resilience of an AI system when confronted with variations in the input data or adversarial attacks intended to manipulate the model’s output. Robustness can be viewed from two perspectives: accuracy (ensuring that the model provides correct results in the face of noisy or manipulated inputs) and security (ensuring that the model isn’t vulnerable to attacks).

Here are a few techniques and strategies used to ensure AI model robustness:

- Adversarial training: This involves training the model on adversarial examples – inputs that have been intentionally designed to cause the model to make a mistake. By training on these examples, the model learns to make correct predictions even in the face of malicious inputs. However, adversarial training can be computationally expensive and doesn’t always ensure complete robustness against unseen attacks.

- Defensive distillation: In this technique, a second model (the ‘student’) is trained to mimic the behavior of the original model (the ‘teacher’), but with a smoother mapping of inputs to outputs. This smoother mapping can make it more difficult for an attacker to find inputs that will cause the student model to make mistakes.

- Feature squeezing: Feature squeezing reduces the complexity of the data that the model uses to make decisions. For example, it might reduce the color depth of images or round off decimal numbers to fewer places. By simplifying the data, feature squeezing can make it harder for attackers to manipulate the model’s inputs in a way that causes mistakes.

- Regularization: Regularization methods, such as L1 and L2, add a penalty to the loss function during training to prevent overfitting. A more robust model is less likely to be influenced by small changes in the input data, reducing the risk of adversarial attacks.

- Privacy-preserving machine learning: Techniques like differential privacy and federated learning ensure that the model doesn’t leak sensitive information from the training data, thereby enhancing data security.

- Input validation: This involves adding checks to ensure that the inputs to the model are valid before they are processed. For example, an image classification model might check that its inputs are actually images and that they are within the expected size and color range. This can prevent certain types of attacks where the model is given inappropriate inputs.

- Model hardening: This is the process of stress testing an AI model using different adversarial techniques. By doing so, we can discover vulnerabilities and fix them, thereby making the model more resilient.

These are just a few of the methods used to improve the robustness of AI models in the context of data security. By employing these techniques, it’s possible to develop models that are resistant to adversarial attacks and that maintain their accuracy even when they are fed noisy or manipulated data. However, no model can ever be 100% secure or accurate, so it’s important to consider these techniques as part of a larger security and accuracy strategy.

Secure multi-party computation

Secure Multi-party Computation (SMPC) is a subfield in cryptography focused on enabling multiple parties to compute a function over their inputs while keeping those inputs private.

SMPC is a crucial method for ensuring data security in scenarios where sensitive data must be processed without being fully disclosed. This could be for reasons like privacy concerns, competitive business interests, legal restrictions, or other factors.

Here is a simplified breakdown of how SMPC works:

- Input secret sharing: Each party starts by converting their private input into a number of “shares,” using a cryptographic method that ensures the shares reveal no information about the original input unless a certain number of them (a threshold) are combined. Each party then distributes their shares to the other parties in the computation.

- Computation: The parties perform the computation using the shares, instead of the original data. Most importantly, they do this without revealing the original inputs. Computation is generally done using addition and multiplication operations, which are the basis for more complex computations. Importantly, these operations are performed in a way that preserves the secrecy of the inputs.

- Result reconstruction: After the computation has been completed, the parties combine their result shares to get the final output. Again, this is done in such a way that the final result can be computed without revealing any party’s individual inputs unless the predetermined threshold is met.

SMPC’s core principle is that no individual party should be able to determine anything about the other parties’ private inputs from the shares they receive or from the computation’s final output. To ensure this, SMPC protocols are designed to be secure against collusion, meaning even if some of the parties work together, they still can’t discover other parties’ inputs unless they meet the threshold number of colluders. In addition to its use in privacy-preserving data analysis, SMPC has potential applications in areas like secure voting, auctions, privacy-preserving data mining, and distributed machine learning.

However, it’s important to note that SMPC protocols can be complex and computationally intensive, and their implementation requires careful attention to ensure security is maintained at all stages of the computation. Moreover, SMPC assumes that parties will follow the protocol correctly; violations of this assumption can compromise security. As such, SMPC should be part of a broader data security strategy and needs to be combined with other techniques to ensure complete data protection.

Differential privacy

Differential privacy is a system for publicly sharing information about a dataset by describing the patterns of groups within the dataset while withholding information about individuals. It is a mathematical technique used to provide guarantees that the privacy of individual data records are preserved, even when aggregate statistics are published.

Here is how differential privacy works:

- Noise addition: The primary mechanism of differential privacy is the addition of carefully calculated noise to the raw data or query results from the database. The noise is generally drawn from a specific type of probability distribution, such as a Laplace or Gaussian distribution.

- Privacy budget: Each differential privacy system has a measure called the ‘epsilon’ (ε), which represents the amount of privacy budget. A smaller epsilon means more privacy but less accuracy, while a larger epsilon means less privacy but more accuracy. Every time a query is made, some of the privacy budget is used up.

- Randomized algorithm: Differential privacy works by using a randomized algorithm when releasing statistical information. This algorithm takes into account the overall sensitivity of a function (how much the function’s output can change given a change in the input database) and the desired privacy budget to determine the amount of noise to be added.

Here is the core idea: When differential privacy is applied, the probability of a specific output of the database query does not change significantly, whether or not any individual’s data is included in the database. This makes it impossible to determine whether any individual’s data was used in the query, thereby ensuring privacy. Differential privacy has been applied in many domains including statistical databases, machine learning, data mining, etc. It is one of the key techniques used by large tech companies like Apple and Google to collect user data in a privacy-preserving manner. For instance, Apple uses differential privacy to collect usage patterns of emoji, while preserving the privacy of individual users.

However, it’s important to understand that the choice of epsilon and the noise distribution, as well as how they are implemented, can greatly affect the privacy guarantees of the system. Balancing privacy protection with utility (accuracy of the data) is one of the key challenges in implementing differential privacy.

Homomorphic encryption

Homomorphic encryption is a cryptographic method that allows computations to be performed on encrypted data without decrypting it first. The result of this computation, when decrypted, matches the result of the same operation performed on the original, unencrypted data.

This offers a powerful tool for data security and privacy because it means you can perform operations on sensitive data while it remains encrypted, thereby limiting the risk of exposure.

Unmatched data safety with LeewayHertz’s AI development services

LeewayHertz follows stringent data security measures at every step of the AI development process, delivering robust and reliable solutions.

Here’s a simple explanation of how it works:

- Encryption: The data owner encrypts their data with a specific key. This encrypted data (ciphertext) can then be safely sent over unsecured networks or stored in an untrusted environment, because it’s meaningless without the decryption key.

- Computation: An algorithm (which could be controlled by a third-party, like a cloud server) performs computations directly on this ciphertext. The homomorphic property ensures that operations on the ciphertext correspond to the same operations on the plaintext.

- Decryption: The results of these computations, still in encrypted form, are sent back to the data owner. The owner uses their private decryption key to decrypt the result. The decrypted result is the same as if the computation had been done on the original, unencrypted data.

It’s important to note that there are different types of homomorphic encryption depending on the complexity of operations allowed on the ciphertext:

- Partially Homomorphic Encryption (PHE): This supports unlimited operations of a single type, either addition or multiplication, not both.

- Somewhat Homomorphic Encryption (SHE): This allows limited operations of both types, addition and multiplication, but only to a certain degree.

- Fully Homomorphic Encryption (FHE): This supports unlimited operations of both types on ciphertexts. It was a theoretical concept for many years until the first practical FHE scheme was introduced by Craig Gentry in 2009.

Homomorphic encryption is a promising technique for ensuring data privacy in many applications, especially cloud computing and machine learning on encrypted data. However, the computational overhead for fully homomorphic encryption is currently high, which limits its practical usage. As research continues in this field, more efficient implementations may be discovered, enabling broader adoption of this powerful cryptographic tool.

Federated learning

Federated learning is a machine learning approach that allows a model to be trained across multiple decentralized devices or servers holding local data samples, without exchanging the data itself. This method is used to ensure data privacy and reduce communication costs in scenarios where data can’t or shouldn’t be shared due to privacy concerns, regulatory constraints, or simply the amount of bandwidth required to send the data.

Here is how federated learning works:

- Local training: Each participant (which could be a server or a device like a smartphone) trains a model on its local data. This means the raw data never leaves the device, which preserves privacy.

- Model sharing: After training on local data, each participant sends a summary of their locally updated model (not the data) to a central server. This summary often takes the form of model weights or gradients.

- Aggregation: The central server collects the updates from all participants and aggregates them to form a global model. The aggregation process typically involves computing an average, though other methods can be used.

- Global model distribution: The updated global model is then sent back to all participants. The participants replace their local models with the updated global model.

- Repeat: Steps 1-4 are repeated several times until the model performance reaches a satisfactory level.

The main benefit of federated learning is privacy preservation since raw data doesn’t need to be shared among participants or with the central server. It’s especially useful when data is sensitive, like in healthcare settings, or when data is large and difficult to centrally collect, like in IoT networks.

However, federated learning also presents challenges. There can be significant variability in the number of data samples, data distribution across devices, and the computational capabilities of each device. Coordinating learning across numerous devices can also be complex.

For data security, federated learning alone isn’t enough. Additional security measures, such as secure multi-party computation or differential privacy, may be used to further protect individual model updates during transmission and prevent the central server from inferring sensitive information from these updates.

Best practices for AI in data security

Be specific to your need

To ensure data security in AI, it is crucial that only what is necessary is collected. Adhering to the principle of “need to know” limits the potential risks associated with sensitive data. By refraining from collecting unnecessary data, businesses can minimize the chances of data loss or breaches. Even when there is a legitimate need for data collection, it is essential to gather the absolute minimum required to accomplish the task at hand. Stockpiling excess data may seem tempting, but it significantly increases the vulnerability to cybersecurity incidents. By strictly adhering to the “take only what you need” approach, organizations can avoid major disasters and prioritize data security in AI operations.

Know your data and eliminate redundant records

Begin by conducting a thorough assessment of your current data, examining the sensitivity of each dataset. Dispose of any unnecessary data to minimize risks. Additionally, take proactive measures to mitigate potential vulnerabilities in retained data. For instance, consider removing or redacting unstructured text fields that may contain sensitive information like names and phone numbers. It is crucial to not only consider your own interests but also empathize with individuals whose data you possess. By adopting this perspective, you can make informed decisions regarding data sensitivity and prioritize data security in AI operations.

Encrypt data

Applying encryption to your data, whether it’s static or in transit, may not provide a foolproof safety net, but it usually presents a cost-effective strategy to boost the security of your network or hard disk should they become compromised. Assuming that your work doesn’t necessitate exceptionally high-speed applications, the negative effects of encryption on performance are no longer a significant concern. Thus, if you are handling confidential data, enabling encryption should be your default approach.

The argument that encryption negatively impacts performance is losing its relevance, as many modern, high-speed applications and services are incorporating encryption as a built-in feature. For instance, Microsoft’s Azure SQL Database readily provides this option. As such, the excuse of performance slowdown due to encryption is increasingly being disregarded.

Opt for secure file sharing services

While quick and simple methods of file sharing might suffice for submitting academic papers or sharing adorable pet photos, they pose risks when it comes to distributing sensitive data. Therefore, it’s advisable to employ a service that’s specifically engineered for the secure transfer of files. Some individuals might prefer using a permission-regulated S3 bucket on AWS, where encrypted files can be securely shared with other AWS users, or an SFTP server, which enables safe file transfers over an encrypted connection.

However, even a simple switch to platforms such as Dropbox or Google Drive can enhance security. Although these services are not primarily designed with security as their key focus, they still offer superior fundamental security, such as encrypting files at rest, and more refined access control compared to transmitting files via email or storing them on a poorly secured server.

For those seeking a higher level of security than Dropbox or Google can provide, SpiderOak One is a worthy alternative. It offers end-to-end encryption for both file storage and sharing, coupled with a user-friendly interface and an affordable pricing structure, making it accessible for nearly everyone.

Ensure security for cloud services

Avoid falling into the trap of thinking that if the servers are managed by someone else, there is no need for you to be concerned about security. In fact, the reality is quite contrary – you need to be cognizant of numerous best practices to safeguard these systems. You would benefit from going through the recommendations provided by users of such services.

These precautions involve steps like enabling authentication for S3 buckets and other file storage systems, fortifying server ports so that only the necessary ones are open, and restricting access to your services solely to authorized IP addresses or via a VPN tunnel.

Practice thoughtful sharing

When dealing with sensitive information, it is recommended to assign access rights to individual users (be they internal or external) and specific datasets, rather than mass authorization. Further, access should only be granted when absolutely necessary. Similarly, access should be provided only for specific purposes and durations.

It is also beneficial to have your collaborators sign nondisclosure and data usage agreements. While these may not always be rigorously enforced, they help set clear guidelines for how others should handle the data to which you have granted them access. Regular log checks are also crucial to ensure that the data is being used as intended.

Ensure holistic security: Data, applications, backups, and analytics

Essentially, every component interacting with your data needs to be safeguarded. Failure to do so may result in futile security efforts, for instance, creating an impeccably secure database that is compromised due to an unprotected dashboard server caching that data. Similarly, it’s crucial to remember that system backups often duplicate your data files, meaning these backups persist even after the original files are removed – the very essence of a backup.

Therefore, these backups need to be not only defended, but also discarded when they have served their purpose. If neglected, these backups may turn into a hidden cache for hackers – why would they struggle with your diligently maintained operational database when all the data they need exists on an unprotected backup drive?

Ensure no raw data leakage in shared outputs

Certain machine learning models encapsulate data, such as terminologies and expressions from source documents, within a trained model structure. Therefore, inadvertently, sharing the output of such a model might risk disclosing training data. Similarly, raw data could be embedded within the final product of dashboards, graphs, or maps, despite only aggregate results being visible at the surface level.

Even if you’re only distributing a static chart image, bear in mind that there exist tools capable of reconstituting original datasets, so never assume that you’re concealing raw data merely because you’re not sharing tables. It’s vital to comprehend what precisely you’re sharing and to anticipate potential misuse by ill-intentioned individuals.

Understanding privacy impacts of correctly ‘de-identifying’ data

Eliminating personally identifiable information (PII) from a dataset, especially when you don’t need it, is an effective way to mitigate the potential fallout of a data breach. Moreover, it’s a crucial step to take prior to making data public. However, erasing PII doesn’t necessarily shield the identities in your dataset. Could your data be re-associated with identities if matched with other data? Are the non-PII attributes distinct enough to pinpoint specific individuals?

A simple hashing method might not suffice. For instance, one might receive a supposedly “anonymized” consumer data file only to identify oneself swiftly based on a unique blend of age, race, gender, and residential duration in a Census block. With minimal effort, one could potentially discover records of many others. Pairing this with a publicly available voter registration file could enable you to match most records to individuals’ names, addresses, and birth dates.

While there isn’t a flawless standard for de-identification, if privacy protection is a concern and you’re relying on de-identification, it’s strongly recommended to adhere to the standards laid out by the Department of Health and Human Services for de-identifying protected health information. Although this doesn’t guarantee absolute privacy protection, it’s your best bet for maintaining useful data while striving for maximum privacy.

Understand your potential worst-case outcomes

Despite all the preventative measures, complete risk eradication is impossible. Thus, it’s essential to contemplate the gravest possible consequences if your data were to be breached. Having done that, revisit the first and second points. Despite all efforts to prevent breaches, no system is impervious to threats. Therefore, if the potential risks are unacceptable, it’s best not to retain sensitive data to begin with.

Future trends in AI data security

Technological advancements for enhanced data security

As data security and privacy have taken center stage in today’s digital landscape, emergence of several transformative technological advancements is leading the trend. Based on Forrester’s analysis, key innovations include Cloud Data Protection (CDP) and Tokenization, which protect sensitive data by encrypting it before transit to the cloud and replacing it with randomly generated tokens, respectively. Big Data Encryption further fortifies databases against cyberattacks and data leaks, while Data Access Governance offers much-needed visibility into data locations and access activities.

Simultaneously, Consent/Data Subject Rights Management and Data Privacy Management Solutions address personal privacy concerns, ensuring organizations manage consent and enforce individuals’ rights over shared data while adhering to privacy processes and compliance requirements. Advanced techniques such as Data Discovery and Flow Mapping, Data Classification, and Enterprise Key Management (EKM) play pivotal roles in identifying, classifying, and prioritizing sensitive data, and managing diverse encryption key life-cycles. Lastly, Application-level Encryption provides robust, fine-grained encryption policies, securing data within applications before database storage. Each of these innovations serves as a crucial tool in enhancing an organization’s data security framework, ensuring privacy, compliance, and protection against potential cyber threats.

The role of blockchain technology in AI data security

At present, blockchain is recognized as one of the most robust technologies for data protection. With the digital landscape rapidly evolving, new data security challenges have emerged, demanding stronger authentication and cryptography mechanisms. Blockchain is efficiently tackling these challenges by providing secure data storage and deterring malicious cyber-attacks. The global blockchain market is projected to reach approximately $20 billion by 2024, with applications spanning multiple sectors, including healthcare, finance, and sports.

Distinct from traditional methods, blockchain technology has motivated companies to reevaluate and redesign their security measures, instilling a sense of trust in data management. Blockchain’s distributed ledger system provides a high level of security, advantageous for establishing secure data networks. Businesses in the consumer products and services industry are adopting blockchain to securely record consumer data.

As one of this century’s significant technological breakthroughs, Blockchain enables competitiveness without reliance on any third party, introducing new opportunities to disrupt business services and solutions for consumers. In the future, this technology is expected to lead global services across various sectors.

Blockchain’s inherent encryption offers robust data management, ensuring data hasn’t been tampered with. With the use of smart contracts in conjunction with blockchain, specific validations occur when certain conditions are met. Any data alterations are verified across all ledgers on all nodes in the network.

For secure data storage, blockchain’s capabilities are unparalleled, particularly for shared community data. Its capabilities ensure that no entity can read or interfere with the stored data. This technology is also beneficial for public services in maintaining decentralized and safe public records. Moreover, businesses can save a cryptographic signature of data on a Blockchain, affirming data safety. In distributed storage software, Blockchain breaks down large amounts of data into encrypted chunks across a network, securing all data.

Lastly, due to its decentralized, encrypted, and cross-verified nature, blockchain is safe from hacking or attacks. Blockchain’s distributed ledger technology offers a crucial feature known as data immutability, which significantly enhances security by ensuring that actions or transactions recorded on the blockchain cannot be tampered with or falsified. Every transaction is validated by multiple nodes on the network, bolstering the overall security.

Endnote

In today’s interconnected world, trust is rapidly becoming an elusive asset. With growing complexity of interactions within organizations, where human and machine entities, including Artificial Intelligence (AI) and Machine Learning (ML) systems, are closely integrated, establishing trust presents a considerable challenge. This necessitates an urgent and thorough reformation of our trust systems, acclimatizing them to this dynamic landscape.

In the forthcoming years, data will surge in importance and value. This rise will inevitably draw the attention of hackers, intent on exploiting our data, services, and servers. Furthermore, the very nature of cyber threats is undergoing a transformation, with AI and ML enabled machines superseding humans in orchestrating sophisticated attacks, making their prevention, detection, and response considerably more complex.

In light of these evolving trends, data security’s importance cannot be overstated. AI systems, owing to the fact that they deal in vast amounts of data, are attractive targets for cyber threats. Therefore, integrating robust security measures, such as advanced encryption techniques, secure data storage, and stringent authentication protocols, into AI and ML systems should be central to any data management strategy.

The future success of organizations will pivot on their commitment to data security. Investments in AI and ML systems should coincide with substantial investments in data security, creating a secure infrastructure for these advanced technologies to operate. An organization’s dedication to data security not only safeguards sensitive information but also reinforces its reputation and trustworthiness. Only by prioritizing data security can we fully unleash the transformative potential of AI and ML, guiding our organizations towards a secure and prosperous future. It’s time to fortify our defenses and ensure the safety and security of our data in the dynamic landscape of artificial intelligence.

Secure your AI systems with our advanced data security solutions or benefit from expert consultations for data security in AI systems tailored to your needs. Contact LeewayHertz today!

Start a conversation by filling the form

All information will be kept confidential.

Insights

AI for operational efficiency: Navigating the future of streamlined operations

AI applications in operational efficiency span a wide range of industries, transforming how businesses streamline processes, optimize workflows, and make data-driven decisions.

Generative AI in finance and banking: The current state and future implications

The finance industry has embraced generative AI and is extensively harnessing its power as an invaluable tool for its operations.

Comparison of Large Language Models (LLMs): A detailed analysis

Large Language Models (LLMs) have emerged as a cornerstone in the advancement of artificial intelligence, transforming our interaction with technology and our ability to process and generate human language.